Modern telemetry is incredibly complex, particularly at enterprise scale. Between disparate data sources and formats, segmentation across several downstream destinations, and skyrocketing data volumes, organizations are increasingly looking for better solutions to help achieve efficient data collection, processing, routing, and visibility across all their environments and services.

Edge Delta’s next-generation Telemetry Pipelines leverage a novel architecture to intelligently collect, process, and forward data as it’s created, helping teams improve clarity, extract signal from noise, and enhance downstream monitoring and analysis.

Want Faster, Safer, More Actionable Root Cause Analysis?

With Edge Delta’s out-of-the-box AI agent for SRE, teams get clearer context on the alerts that matter and more confidence in the overall health and performance of their apps.

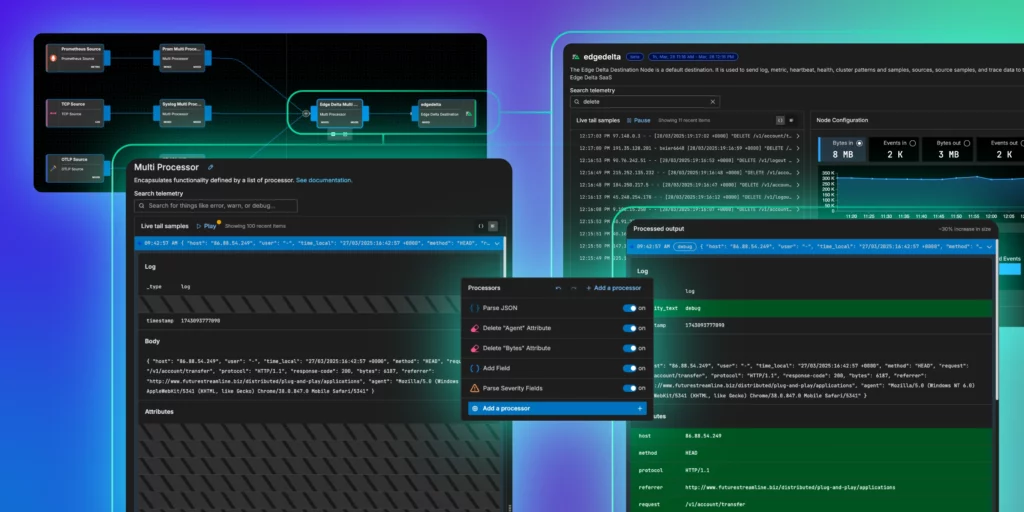

Learn MoreTo continue our mission of providing full telemetry data control and visibility, we’re excited to announce the release of two new features — Multi-Processor Nodes and Live Capture — that revolutionize pipeline management through Edge Delta’s Pipeline interface. With these tools, users can create elaborate pipeline configurations with ease, greatly simplifying pipeline construction and modification, all while visualizing how their data is being processed in real time, each step of the way.

In this post, we’ll walk through both features in detail and demonstrate how they provide an unparalleled pipeline management experience.

Build Better with Multi-Processor Nodes

Optimally structuring raw data for downstream analysis often requires multiple distinct processing steps. For example, to properly analyze logs generated in the CEF format, teams must parse and extract key information from each log body, enrich their associated metadata, filter out any noise, and map them into the appropriate schema for backend ingestion. However, adding these processors independently is tedious and can result in misconfiguration issues, particularly in large-scale deployments.

With Multi-Processor Nodes, users can compress sequential processing steps into a single, unified node. Instead of treating sequential processors as completely separate entities, Multi-Processor Nodes allow users to create logical groupings of processors and edit them in an integrated fashion under a unified node view, streamlining pipeline configuration.

Take the example pipeline below, where server and web traffic logs are:

- Collected via HTTP and OTLP input nodes respectively

- Structured, enriched, and filtered

- Shipped downstream into Splunk and Edge Delta

Though its configuration is relatively straightforward, the pipeline is hard to manage and understand — and if the user wants to add additional processing steps or new data flows, the visual complexity will only grow.

We can improve pipeline structure and functionality by using Multi-Processor Nodes to combine these sequential processors into logical groupings of work.

Configuring individual processors within a Multi-Processor Node is straightforward — simply choose the processors you’d like to include, specify which field(s) in the data item you’d like to modify, and Edge Delta will automatically construct the appropriate logic:

In this case, we can roll the ParseJSON and Log Enrichment nodes into an Apache Logs Multi-Processor Node, the Grok Pattern Extraction and Field Removal nodes into a Common Log Format Multi-Processor Node, and the Filter 4xx and Splunk Mapper nodes into a Splunk Mapper Multi-Processor Node.

The modified pipeline is far more streamlined, and as a result is much easier to work with:

By attaching to each distinct source and destination, Multi-Processor Nodes:

- Simplify pipeline visualization

- Appropriately apply bespoke processing requirements to each data source and destination

- Create a standardized format to simplify intermediary processing and routing

Additional Multi-Processor Nodes can easily be added between pipeline inputs and outputs for aggregation across multiple sources and routing into multiple destinations. What’s more, each individual processor within Multi-Processor Nodes utilizes the highly efficient OpenTelemetry Transformation Language (OTTL) under the hood, simplifying processing configuration through logical OTTL statements and accelerating processing time.

Visualize Processing Previews with Live Capture

Having an in-depth understanding of how new processing rules affect your telemetry data during configuration is a critical part of the pipeline construction process. Without it, teams struggle with troubleshooting processing steps before pushing changes into production, which can lead to inaccurate transformations, unexpected data loss, and ultimately reduced downstream monitoring and analysis quality.

With Live Capture, Edge Delta users gain deep visibility over their data in real time as it’s flowing through their pipeline — and can thoroughly test their processing steps to remain confident in the efficacy of their modifications.

With Live Capture’s three panel view, users receive:

- A context-rich live tail of data entering and exiting the chosen node

- Information on which fields are impacted by the associated processing steps within the viewed node

- A percentage delta of data volume increase or decrease post-processing

From here, users can experiment with a variety of processing options and visualize how the flow of outgoing data is impacted from a structure or overall volume perspective.

Multi-Processor Nodes and Live Capture – Bringing It Together

Though powerful individually, Multi-Processor Nodes and Live Capture function best when working together.

By integrating Live Capture into Multi-Processor Node configuration workflows, Edge Delta users gain a strong collective understanding of how their processors are working in harmony. Within this combined view, users can add new individual processors to their Multi-Processor Node and immediately visualize how they’re modifying the live data flowing through it:

Users can, for example, easily see how output volume is changing dynamically as new processors are added, and can adjust them accordingly:

Conclusion

Telemetry pipelines are an effective tool for controlling data volumes, strengthening insight quality, and reducing tooling and downstream ingestion costs. Edge Delta’s Telemetry Pipelines take things one step further, pairing these benefits with deep visibility into both pipeline health and telemetry data as it travels from source to destination.

With the release of our new Multi-Processor Node and Live Capture features, Edge Delta gives teams an unparalleled view of their data streams, enabling them to optimize their processing flows with confidence and ease.

Want to try out our next-generation Telemetry Pipelines yourself? Check out our playground.

Think our Pipelines can help you level up your observability or security? Book a demo to get started.