Implement a Data Tiering Strategy

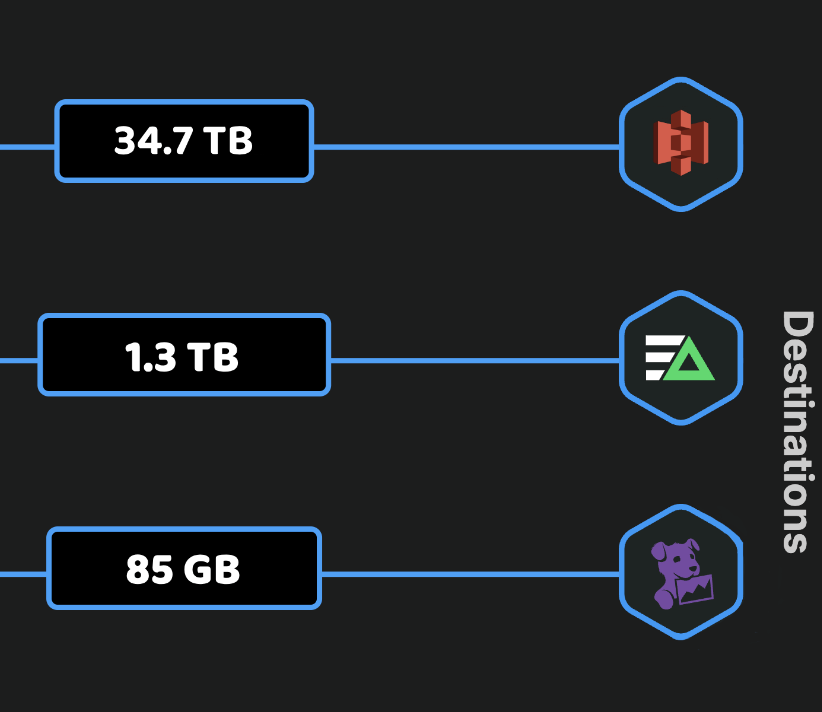

Tier observability and security data across multiple vendors with ease. Route the highest-value data to premium platforms while shipping a copy of all raw data to more cost-effective search or archival destinations. Control your data, reduce costs, and minimize noise.

The Challenge

Vast quantities of low-value data are draining budgets

Companies are overflowing with telemetry data and struggling to limit ingress. As a result, teams have cobbled together imperfect, homegrown solutions to filter out large swaths of logs, metrics, and APM data, with the goal of extracting valuable insights from more manageable portions of the remaining data. This approach is fundamentally flawed, and creates a number of problems:

Critical Data Loss

It’s difficult to manually filter without risking loss of essential data.

Blurred Context

Filtering telemetry data reduces the quality of data insights and analytics.

Alignment Difficulties

Stakeholders don’t always agree on which methodology to use for filtering.

The Solution

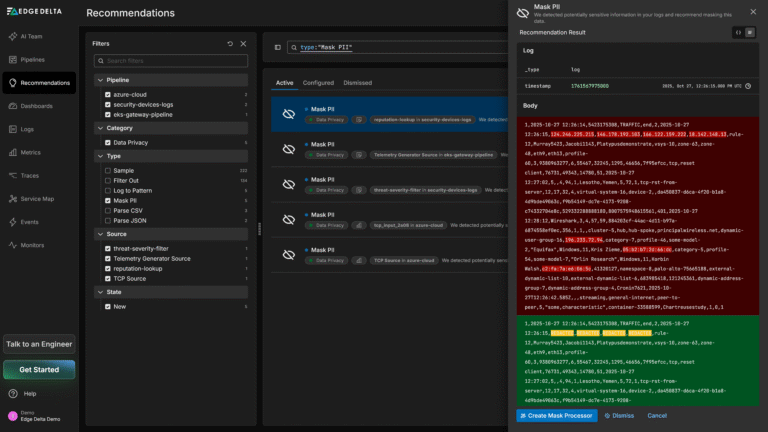

End-to-end control over your telemetry data

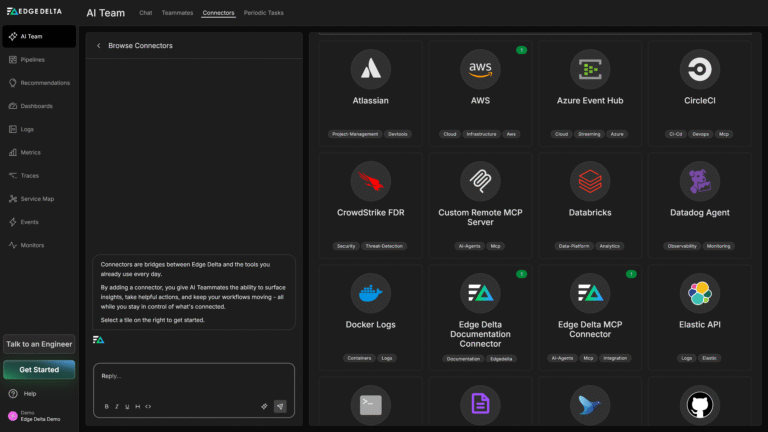

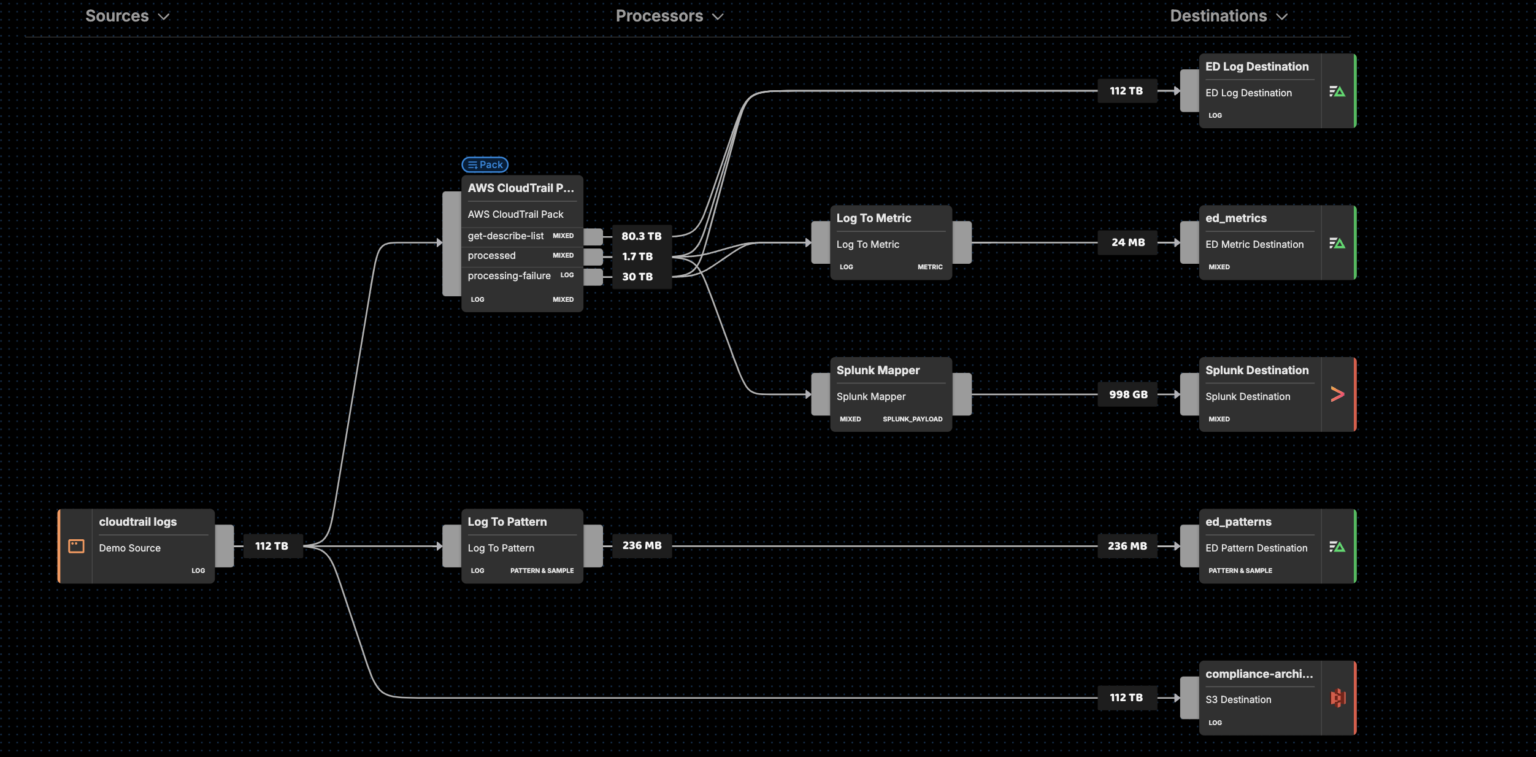

Edge Delta processes your log data as it’s created, and our Visual Pipeline interface lets you easily route it to any number of downstream destinations, including other monitoring platforms and cold storage.

Pre-process logs at the source to adhere to platform-specific requirements and extract high-level patterns for analysis of the bigger picture.

Reduce costs by sending only high-value data to premium platforms, and ship a full copy of all raw data into S3 to support audit, compliance, and investigation use cases.

Limit noisy data by sending only the data you need to any given platform to enhance the insight and analysis processes.

Ready to take the next step?

Learn more about our use cases and how we fit into your observability stack.

Trusted By Teams That Practice Observability at Scale

“This is not just about doing what you used to do in the past, and doing it a little bit better. This is a new way to see this world of how we collect and manage our observability pipelines.”

Ben Kus, CTO, Box

Read Case Study

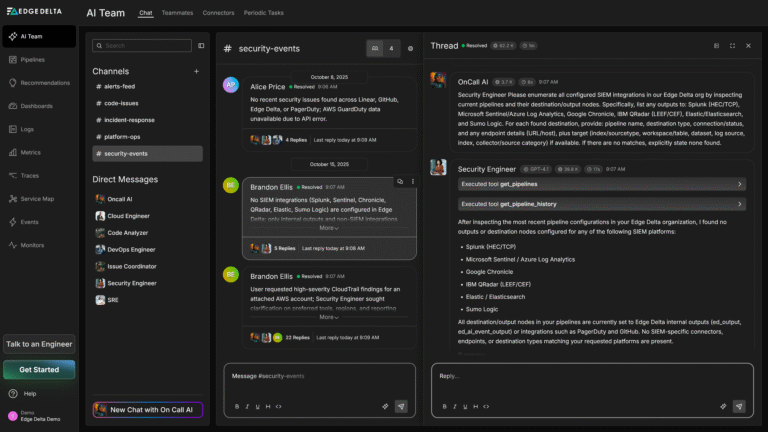

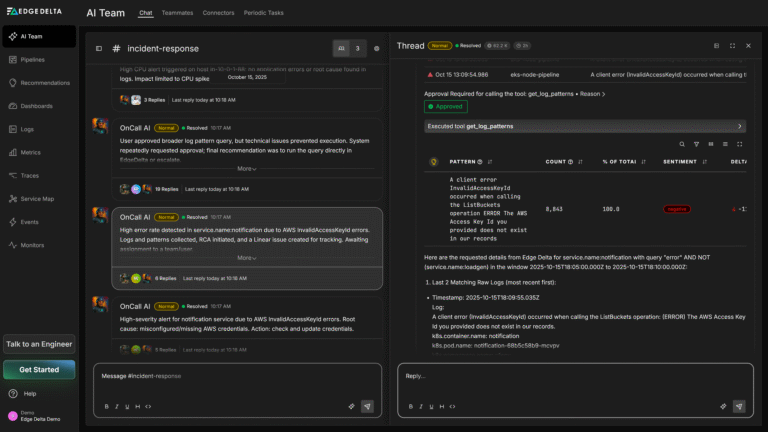

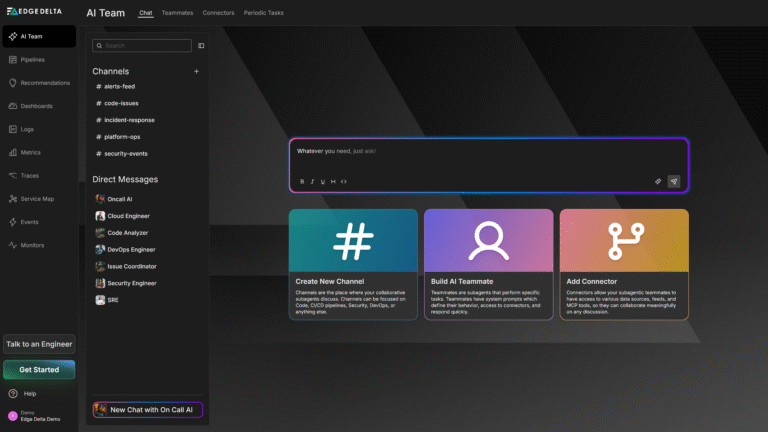

Join Engineering Teams That Are Embracing AI

It only takes a couple of minutes to start running AI Teammates in production.