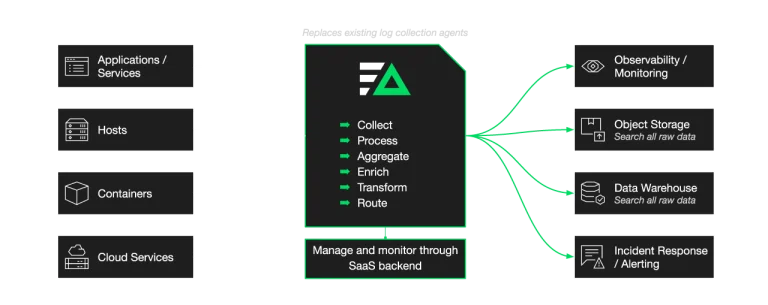

Unified Logging Architecture

Use a single agent to collect, pre-process, and route log data.

The Challenge

Logging Architectures Are Increasingly Complex

Teams use several tools to stay on top of data growth and meet the needs of different users. As a byproduct, logging architectures have become sprawling and difficult to scale. It’s not uncommon for teams to use a combination of:

Open-Source Agents

Lack performance and offer no fleet management capabilities.

Platform-Specific Agents

Create vendor lock-in issues and hinder flexibility.

Data pipelines

Homegrown versions filter out data in anticipation of what you’ll need.

The Solution

Collect, Pre-Process, and Route Data to Anywhere

Edge Delta supersedes the functionality of existing log collection and routing mechanisms. At the same time, it analyzes your log data upstream and provides visibility to help you build a scalable and sustainable architecture.

Collect data from any source and route to any – or multiple – destinations.

Reliably collect and route petabytes worth of data while supporting real-time analytics.

Manage and roll out agent configurations, and monitor the health of agents – all from our SaaS backend.

Ready to take the next step?

Learn more about our use cases and how we fit into your observability stack.

Data Pipelines

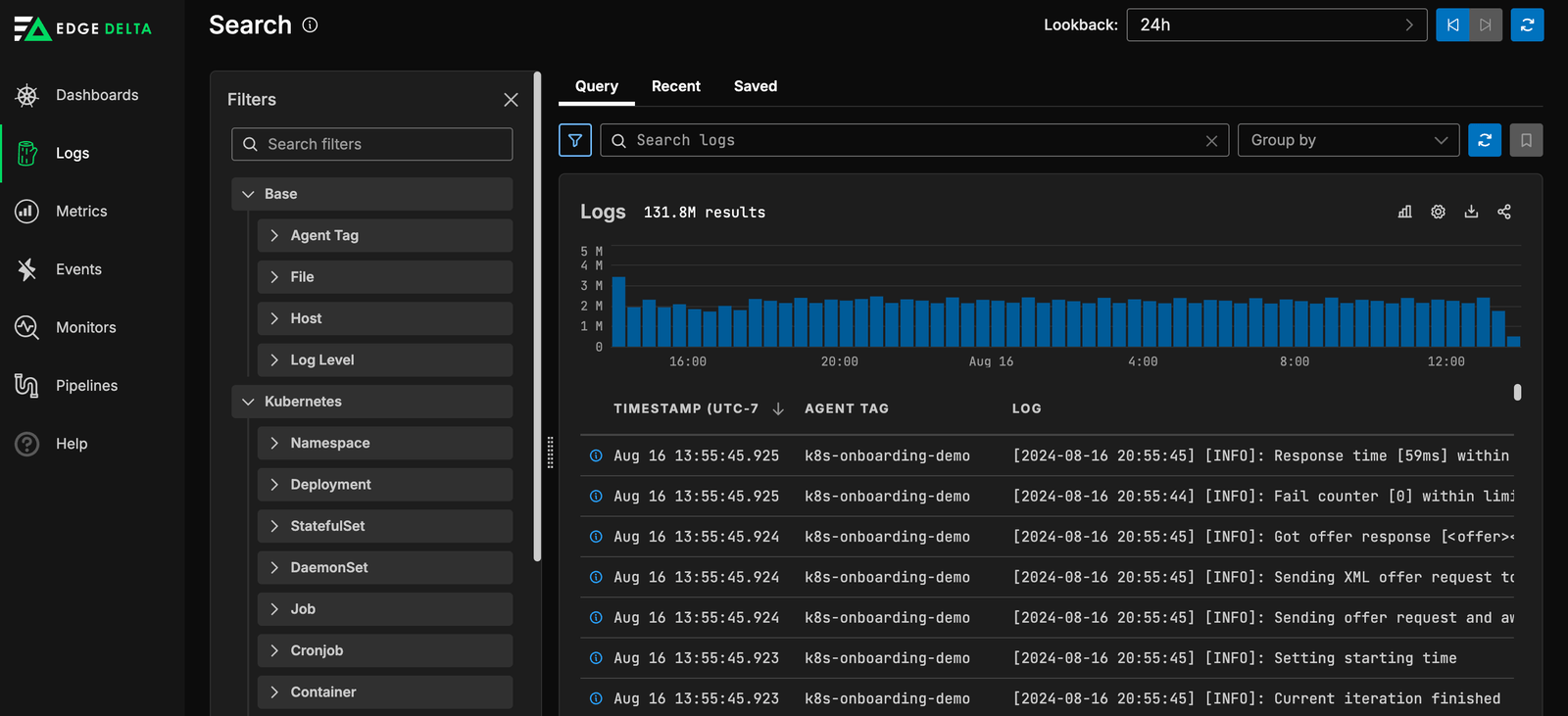

Gain Visibility Into All Log Data

Analyze data at the source – before anything is indexed downstream.

between.

Index Any Level of Fidelity

Meet each team’s needs with optimized datasets, full-fidelity logs, or anything in

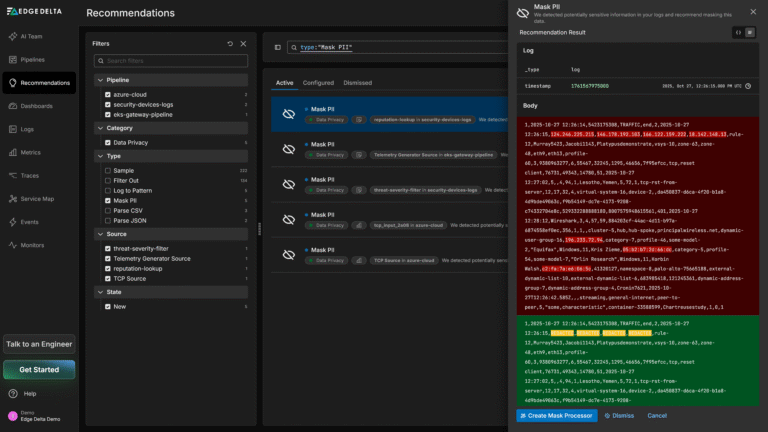

Transform Datasets

Filter, parse, mask, or sample your data as it passes through Edge Delta.

“This is not just about doing what you used to do in the past, and doing it a little bit better. This is a new way to see this world of how we collect and manage our observability pipelines.”

Ben Kus, CTO, Box

Read Case Study

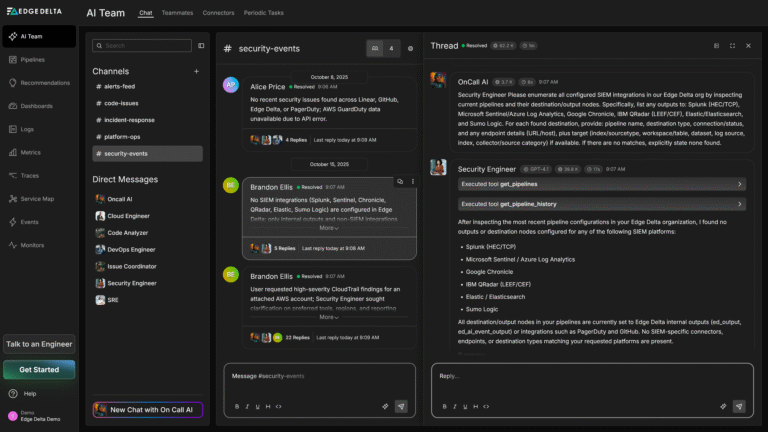

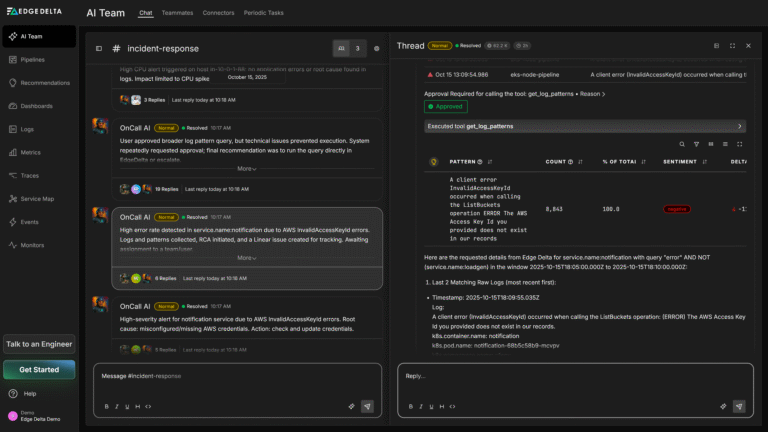

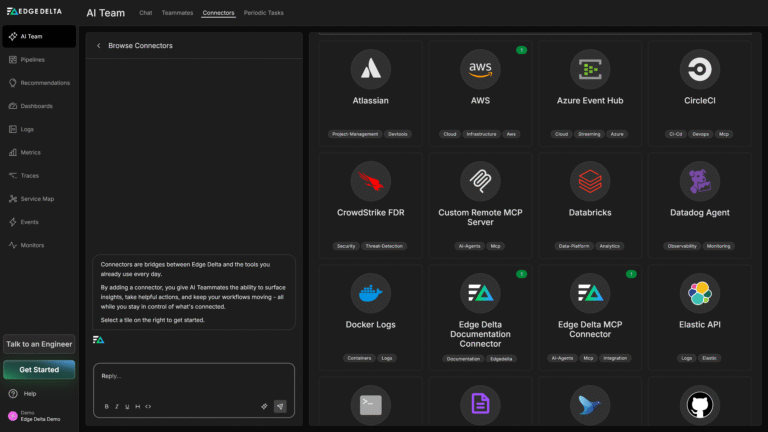

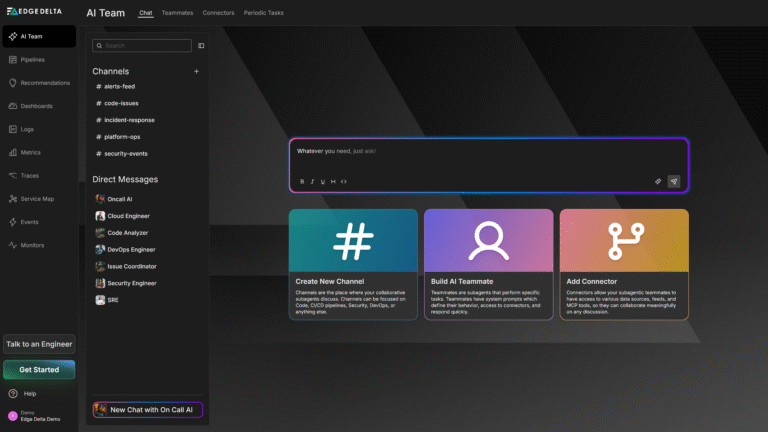

Join Engineering Teams That Are Embracing AI

Get started with minimal effort, simply start adding connectors and start discussing with your out-of-the-box teammates now.