Security Data Pipelines

The foundation that gives you control and visibility over your security data. Standardize, enrich, and stream data to security platforms and archives, and provide a clear view into how data streams are configured, all in real-time to outpace adversaries.

Safeguard your Environment

Control Your Security Data and Strengthen Compliance

Safeguard your Environment

Control Your Security Data and Strengthen Compliance

Route Data Anywhere

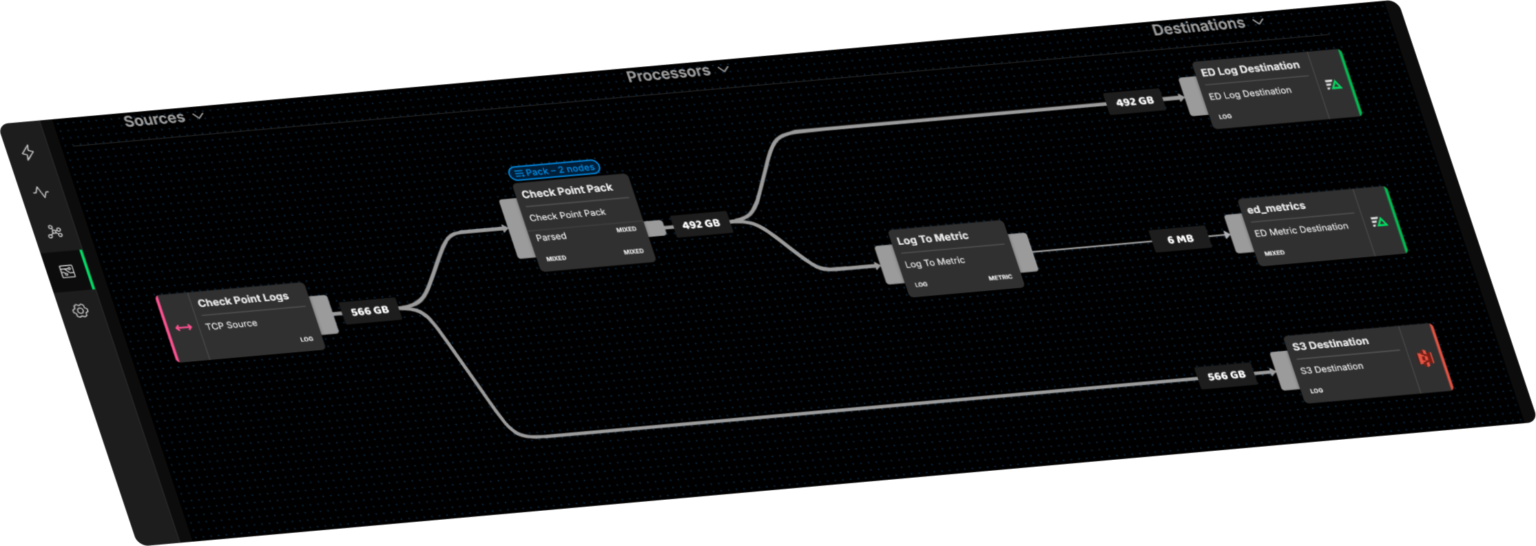

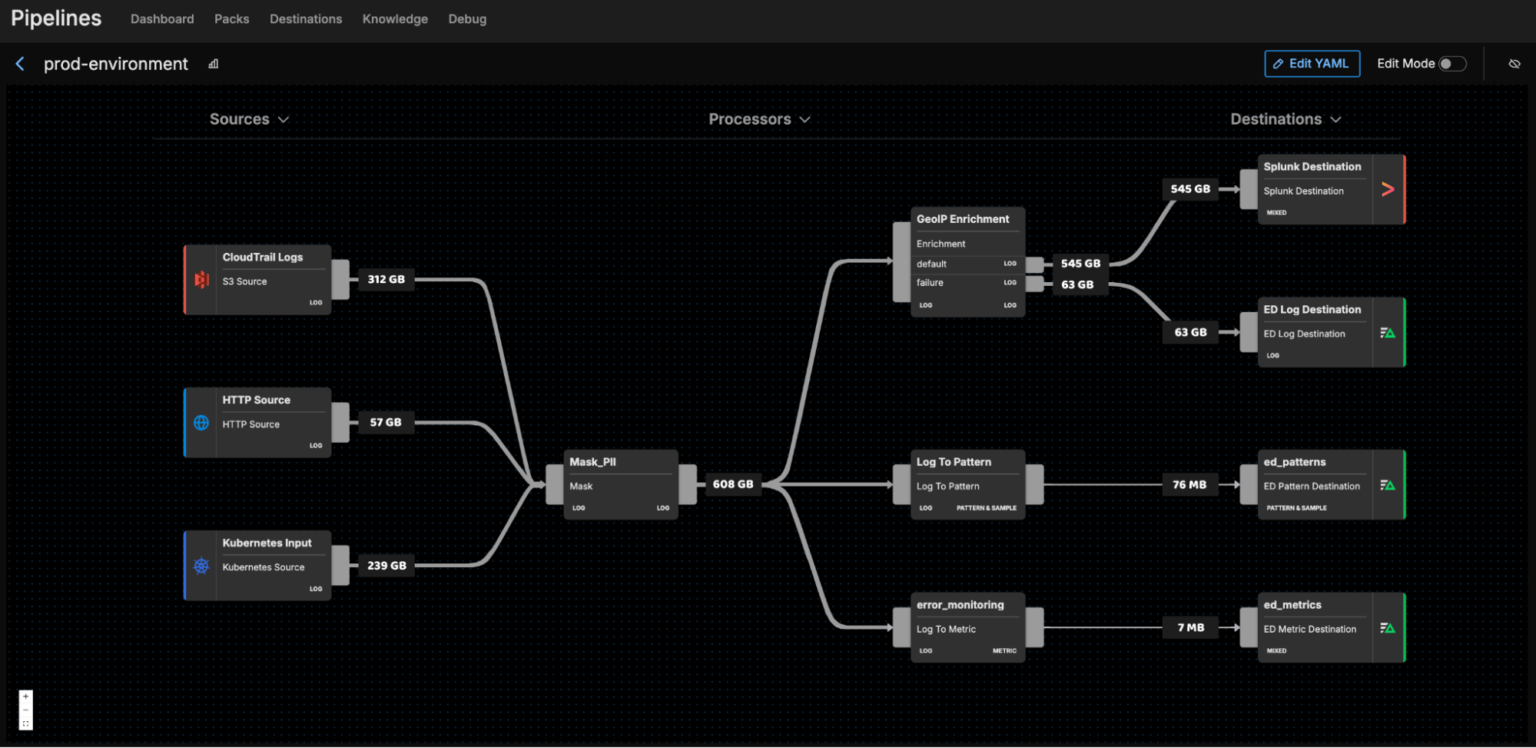

Tier data across multiple downstream destinations, including top-tier SIEM vendors as well as archival storage. Route a copy of all raw data to secure and efficient object storage for compliance and long-term analysis.

Meet Compliance Standards Effortlessly

Mask PII and other sensitive data locally as it’s created to minimize surface area. Satisfy compliance standards with ease and safeguard your customers, endpoints, and organization from cyber threats.

Implement Hybrid Security Architectures

Keep all your security data on-prem and fully within your purveyance, combining the privacy and security of on-prem services with the power of the cloud.

Full Data Control

Defend Your Environment with Efficient Data Management

Enhance Monitoring Insights

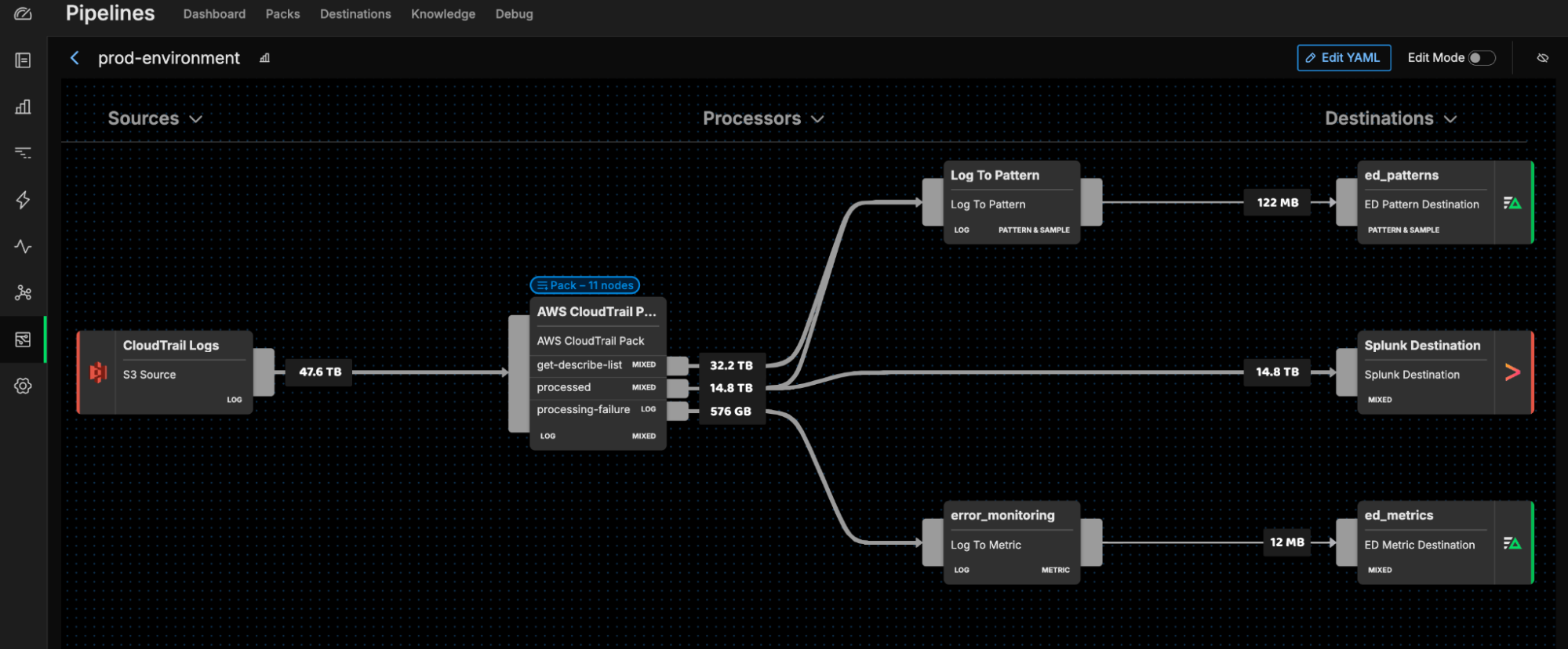

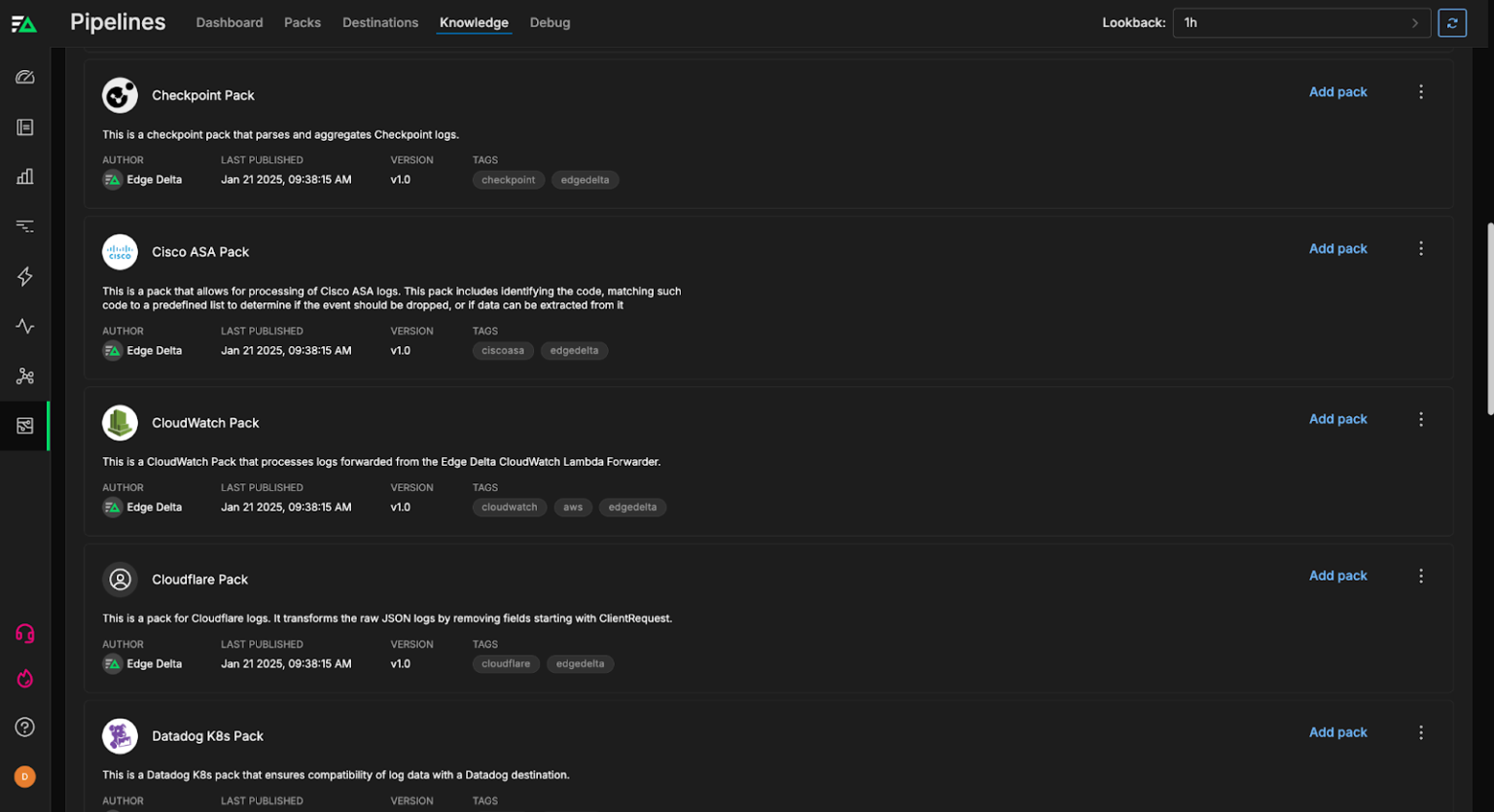

Leverage our collection of pre-built pipeline packs for security — including our CloudTrail pack, Palo Alto pack, FortiGate pack, and many more — to automatically process security data and improve analysis.

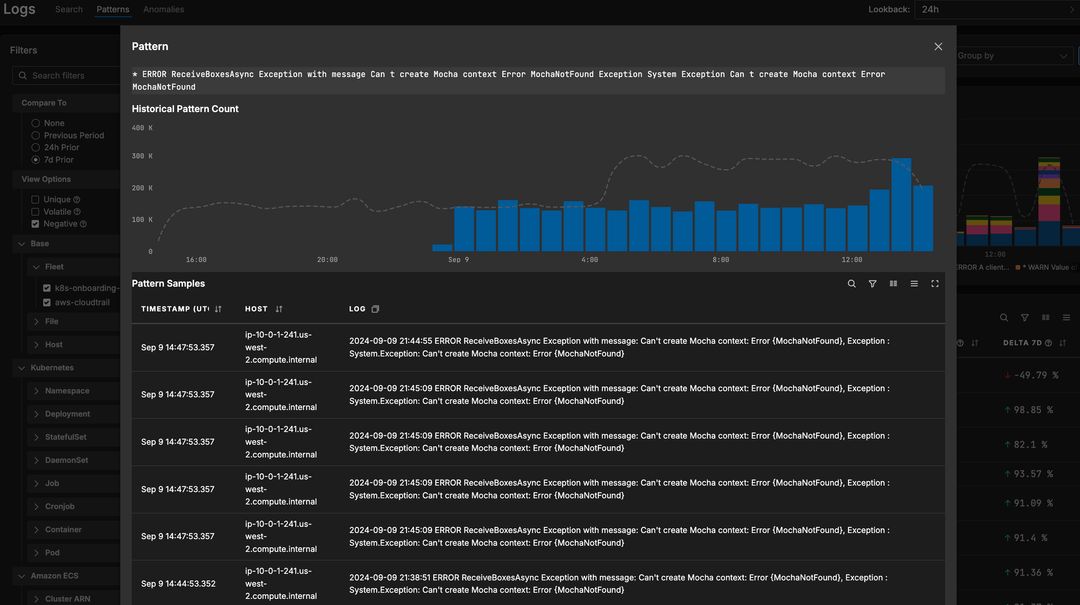

Unlock Pipeline Visibility

Easily modify and maintain your Security Data Pipelines. Test and deploy configuration changes in minutes to ensure consistent real-time monitoring and incident prevention.

Control Permissions

Enforce granular role based access control (RBAC) applied to your data streams with a proactive and modern approach to securing your enterprise data from adversaries.

Learn more about Edge Delta Pipeline Packs

See Edge Delta in Action

Meet with an engineer to learn how you can control your security data and reduce risk with Edge Delta Pipelines.

Trusted By Teams That Manage Telemetry Data at Scale

“Edge Delta’s approach to this problem is key to keeping up with your rapidly growing footprint and ensuring full visibility and the ability to correlate across all machine data.”

Joan Pepin, CISO, Bigeye

“When we deployed Edge Delta, we saw anomalies and useful data immediately. Edge Delta helped us find things hours faster than we would have otherwise ”

Justin Head, VP of DevOps, Super League

Read Case Study

Securely Route Data from Source to Destination with Visibility and Control

Edge Delta supports 50+ integrations across analytics, alerting, storage, and more. We can help you consolidate agents and routing infrastructure and improve your cybersecurity data foundation.

Explore Integrations

Additional Resources

Resources

Next Generation Telemetry Pipelines: Low Cost, High Scalability

Use Edge Delta's Telemetry Pipelines to reduce spend and maximize telemetry efficiency.

Read Whitepaper

Resources

How to Reduce Observability Costs at Scale

Modern telemetry data volumes are exploding — are your costs too?

Read Ebook

Blog

Introducing Visual Pipelines: A Better Way to Manage Telemetry Pipelines

Today, we’re excited to announce Visual Pipelines.

Read blog

Join Engineering Teams That Are Embracing AI

Get started with minimal effort, simply start adding connectors and start discussing with your out-of-the-box teammates now.