Akamai is a cloud-based content delivery network (CDN) and security platform that enhances the performance, protection, and reliability of web applications and services. It is used by companies around the world, and helps over 7 million sites serve billions of users every day.

Akamai products produce large quantities of log data, which serve as detailed records that capture critical information about web traffic, user interactions, system performance, and much more.

Automate workflows across SRE, DevOps, and Security

Edge Delta's AI Teammates is the only platform where telemetry data, observability, and AI form a self-improving iterative loop. It only takes a few minutes to get started.

Learn MoreHowever, analyzing Akamai logs can be quite tricky, as customers aren’t able to instrument source code to change the logs’ generated format. Instead, Akamai users must first properly process Akamai logs after they’ve been created before performing any log-based analysis.

This processing step can be rather cumbersome, which is why we’re excited to announce the release of our new pre-built Akamai Pipeline Pack.

Edge Delta’s Akamai Pipeline Pack is a specialized collection of processors built specifically to handle logs in the Akamai format, enabling you to transform your log data out of the box to fuel analysis. Our packs are built to easily slot into your Edge Delta pipelines — all you need to do is route the Akamai source data into the Akamai pack and let it begin processing.

If you’re unfamiliar with Edge Delta’s Telemetry Pipelines, they are the only pipeline product on the market built to handle log, metric, trace, and event data. They are an edge-based pipeline solution that begin processing data as it’s created at the source, providing you with greater control over your telemetry data at a far lower cost.

How Does the Akamai Pack Work?

The Edge Delta Akamai Pack streamlines the transformation process by automatically modifying Akamai-structured JSON log data to extract only what you need. Once processed, these logs can be easily filtered, aggregated, and analyzed within the observability platforms of your choosing.

The Akamai Pack consists of a few different processing steps, each of which play a vital role in allowing teams to use Akamai logs to gain crucial insights into their web systems and security events.

Here is a quick breakdown of each processor node within the pack:

Drop Empty Fields

The Akamai pack features two nodes that enhance log efficiency by eliminating all empty JSON fields, resulting in cleaner data and more efficient transfer and storage. With these nodes, you can:

- Handle all empty log fields by leveraging a regex pattern to identify fields with “-” values and replace them with a comma. This reduces log size by eliminating all non-existent data.

- Remove the empty field at the end of JSON objects and replace them with a closing brace via regex, to ensure each object is cleanly terminated.

Parse JSON Attributes

The Parse JSON node flattens JSON objects with nested attributes to simplify future analysis. With this node, you can:

- Convert nested JSON attributes from the

item[“body”]field into standalone JSON object attributes, which simplifies access to individual fields for further processing and querying.

Drop Unnecessary Fields

This filter node further reduces data size and noise by removing any extraneous fields. With this node, you can:

- Remove fields which aren’t necessary for analysis, including the

item.attributes.cacheableanditem.attributes.versionfields. Removing these fields helps focus on the most relevant data, conserving resources and optimizing performance.

Extract Path Field

The Akamai pack concludes with a node which extracts key path information from the JSON object. With this node, you can:

- Extract the

reqPathfield from the JSON logs, a crucial field that indicates which resources were requested in association with the given Akamai log. Extracting this field aids in deeper analysis such as web traffic pattern recognition or security event tracking. Importantly, logs where this extraction fails are still retained (keep_log_if_failed: true), ensuring no potentially valuable data is lost.

For a more in-depth understanding of these processors and the Akamai Pipeline Pack, check out our full Akamai Pipeline Pack documentation.

Akamai Pack in Practice

Once you’ve added the Akamai Pack into your Edge Delta pipeline, you can route the outputted log streams anywhere you choose.

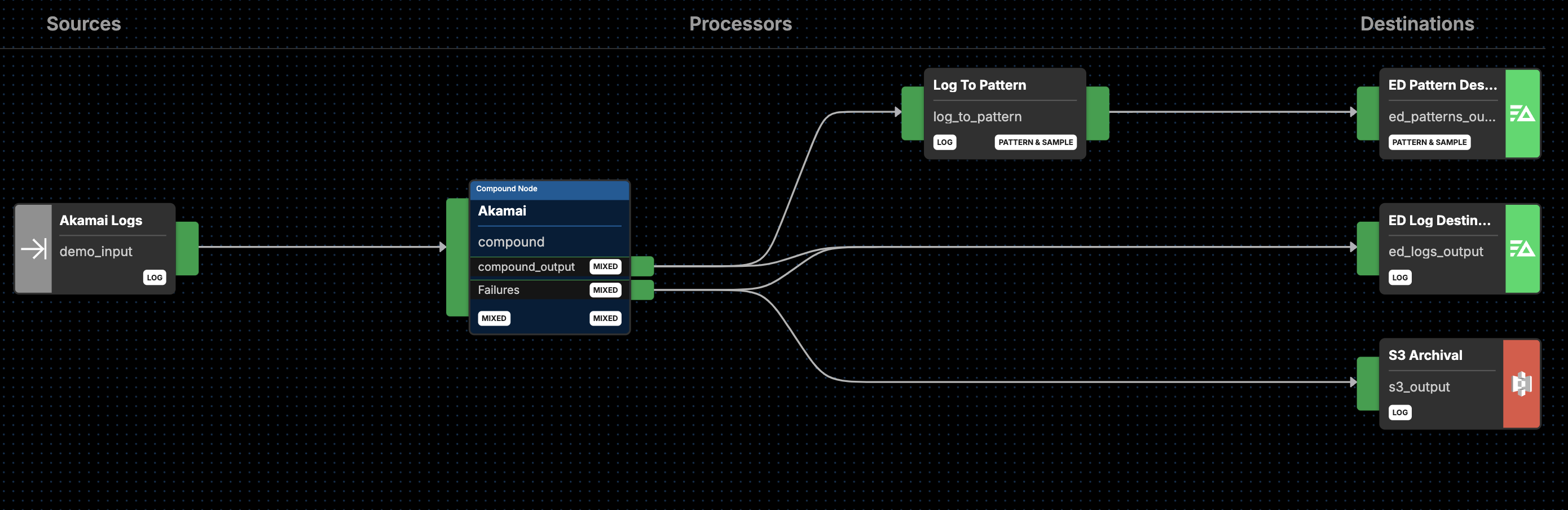

For instance, you can route your newly formatted Akamai logs to ed_patterns_output to leverage Edge Delta’s unique pattern analysis, and to ed_logs_output for log search and analysis with Edge Delta’s Log Management platform, as shown below:

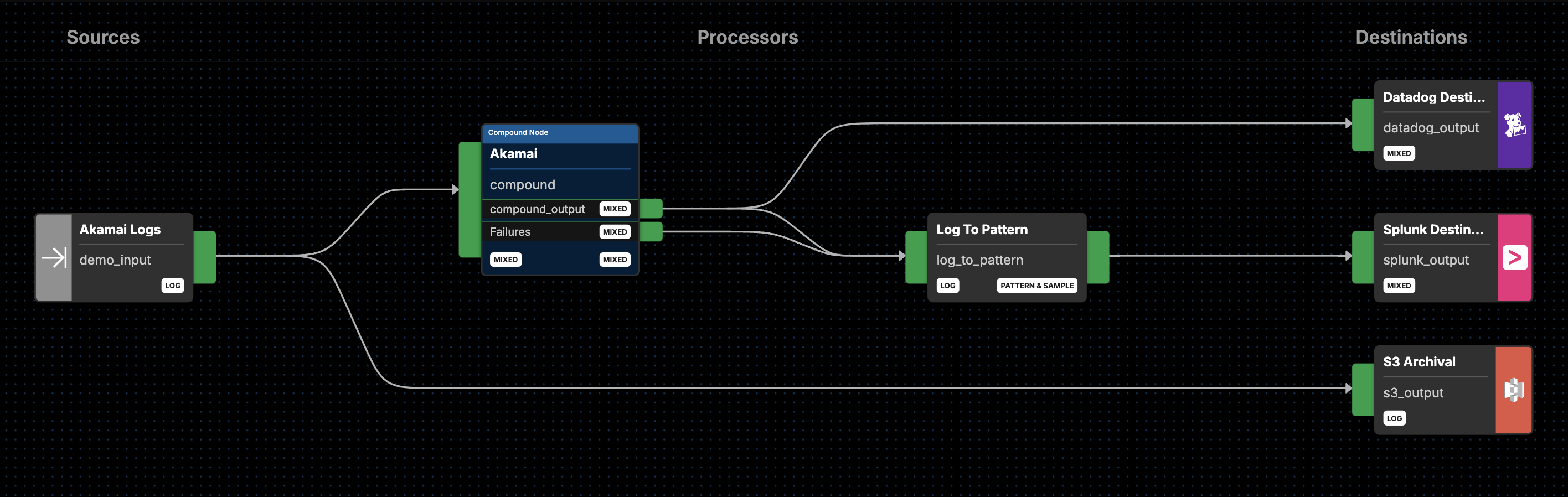

Alternatively, you can route your Akamai logs to other downstream destinations, including (but not limited to) Datadog and Splunk. As always, you can easily route a copy of all raw data directly into S3 as well:

Getting Started

Ready to see it in action? Get started with our Akamai Pipeline Pack today. Check out our packs list and add the Akamai pack to any running pipeline, or visit our pipeline sandbox to try it out for free!