Webinar

Observability Pipelines: Monitoring Tomorrow’s Applications at Scale

Sep 14, 2023

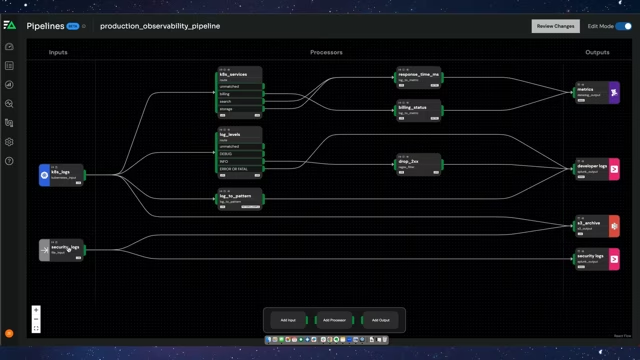

David Wynn, Principle Solutions Architect at Edge Delta, discusses a true bottleneck in monitoring applications and why DevOps teams have been looking into observability pipelines.

More Videos