After three decades in cybersecurity, I’ve come to accept that log pipelines are rarely anyone’s favorite part of the SOC. They’re complex, fragile, and endlessly frustrating. Yet they’re also foundational — because everything else, from detection to compliance to forensics, depends on the quality of the telemetry data we collect.

The most persistent obstacle I have seen across security teams is chaotic data. There has been an explosion of modern tools and schemas, but firewalls, VPNs, IDS sensors, endpoint agents, and appliances all still emit logs in proprietary or poorly structured formats. These logs aren’t going away anytime soon. And they’re often the most valuable data we have.

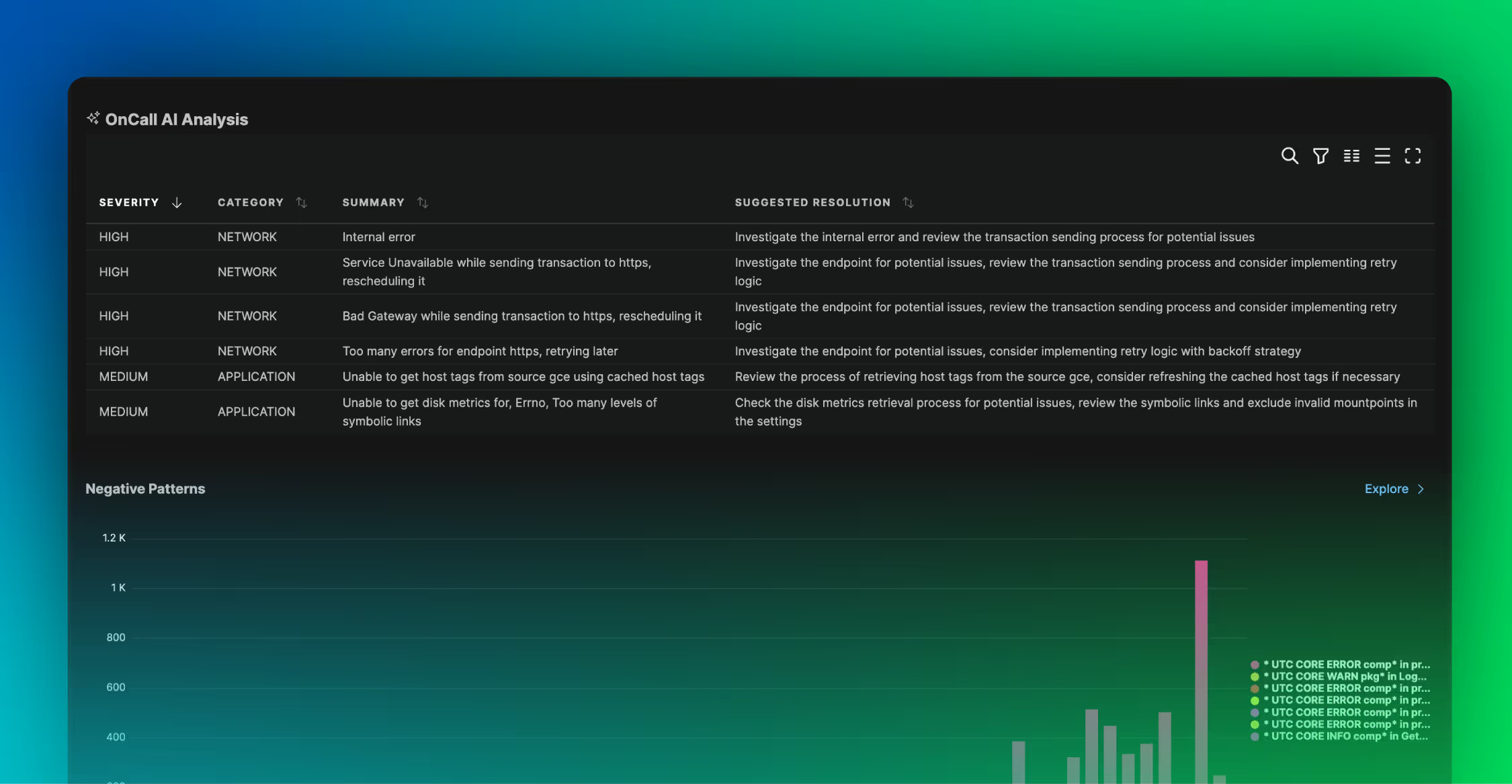

Want Faster, Safer, More Actionable Root Cause Analysis?

With Edge Delta’s out-of-the-box AI agent for SRE, teams get clearer context on the alerts that matter and more confidence in the overall health and performance of their apps.

Learn MoreBut here’s the problem: these formats were never built for analytics. They were built for human eyes, device consoles, and telnet sessions. They don’t play well with modern detection content, open schemas like OCSF, or cloud-native SIEMs that expect JSON and structured fields. And trying to build correlation logic across these disparate formats is a recipe for inconsistency and missed detections.

This is the real-world challenge Edge Delta solves: making legacy telemetry data usable in modern security operations.

The Problem with Proprietary and Legacy Logs

Security data today is both richer and messier than ever. While cloud and SaaS platforms increasingly emit well-structured logs using standards like OCSF or ECS, the vast majority of security telemetry data still originates from devices and systems built long before schema convergence was a thing.

Legacy log formats — whether it’s syslog from a perimeter firewall, custom XML from a VPN concentrator, or CSV from an older DLP appliance — pose a few critical challenges:

- Lack of structure: Unstructured or semi-structured logs are difficult to parse consistently, leading to fragile detection rules and false negatives.

- Incompatibility with modern SIEMs: Many cloud-native SIEMs rely on well-formed JSON and open schemas. Proprietary logs require custom connectors or brittle parsing rules to even be ingestible.

- Operational overhead: Security teams waste countless hours writing regex, tuning connectors, and remapping fields — time that could be spent improving detection workflows or investigating incidents.

- Inconsistent field naming: Different log sources use different labels for the same concept (e.g.,

src_ipvs.source-address), making correlation difficult. - Cost inefficiency: Sending redundant or unparsed logs to your SIEM consumes licensing volume without delivering analytical value.

Most teams respond by filtering these logs out entirely — or, by sending them “as-is” and accepting the analytics blind spots (and potentially huge costs).

Edge Delta offers a third option: normalize them at the edge.

Format-Neutral Normalization with Edge Delta

Edge Delta’s Security Data Pipelines are built to ingest logs from any source and in any format — structured, unstructured, proprietary, or open — and map that data onto a clean, standardized schema before it hits your downstream tools.

This transformation is powered by three core capabilities:

- Source-Aware Parsing:

Edge Delta ships with a library of pre-built packs for hundreds of known log sources, including legacy firewalls, networking gear, endpoint agents, and identity systems. These packs automatically parse, normalize, and enrich security data to extract meaningful structure from even the messiest syslog or appliance-specific formats. For custom or uncommon logs, you can build and tune your own pack using YAML-based logic or the intuitive UI. - Real-Time Schema Mapping:

Parsed events are immediately mapped into structured schemas, including OCSF, ECS, or any custom schema your organization defines. This mapping ensures that logs from legacy systems use the same field names, types, and structures as logs from modern cloud-native platforms. The result is a unified data stream your SIEM can actually use. - Enrichment and Context Injection:

Logs can be enriched with external context in-stream — such as GeoIP lookups, threat intel tags, user metadata, or asset information — before they reach the SIEM. This enables richer detections and faster investigations without adding cost to your ingestion pipeline.

These capabilities turn legacy log formats from a liability into a strength — providing valuable context and coverage in investigations, detections, and compliance workflows.

Normalize Once, Route Anywhere

A key differentiator of Edge Delta’s architecture is its vendor-agnostic routing model. Once logs are parsed and normalized, they can be delivered to any downstream destination:

- SIEMs (Splunk, Sentinel, QRadar, Elastic, Exabeam, etc.)

- Data lakes and cold storage (Amazon S3, Snowflake, BigQuery)

- Real-time alerting tools (Slack, PagerDuty, Teams)

- Dual destinations (e.g., raw logs to archive + normalized events to SIEM)

This flexibility allows security teams to route only high-value, normalized events to their SIEM — reducing license consumption — while archiving full-fidelity logs for compliance or future forensic needs.

Why Normalization at the Edge Is Strategic

Too often, normalization happens after the fact — inside the SIEM, or not at all. But shifting that process upstream, closer to the source, offers several key advantages:

- Better performance: SIEMs ingest less noise and perform better on searches and alerts.

- Lower costs: Filtering and summarizing at the edge reduces ingest volume by up to 95% in some environments.

- Stronger detections: Unified schemas enable more consistent, reusable detection logic across data sources.

- Faster time to value: Onboarding a new log source doesn’t require a new connector or parser inside the SIEM.

- Migration readiness: Because Edge Delta is format-neutral, it decouples your data pipeline from your SIEM, easing transitions or hybrid strategies.

This architecture aligns directly with the “pipeline-first” model now emerging as the new SOC backbone.

Preparing for an Open-Format Future — Without Abandoning the Past

The shift toward open schemas like OCSF is real, and accelerating. OCSF already boasts over 900 contributors and growing adoption across cloud providers, analytics tools, and security vendors. But adoption across legacy infrastructure will take years, if not decades.

Edge Delta helps bridge that gap. It allows security teams to embrace open schemas and modern workflows today, even as they continue to operate within the constraints of legacy hardware, vendor-specific formats, and hybrid environments.

In a world where security data is fragmented by default, normalization is not optional. It’s foundational. Edge Delta delivers that foundation — with the speed, scale, and flexibility that modern SOCs demand.