As organizations accelerate their cloud migrations, many existing applications must be modernized in the process. And this transition creates challenges for DevOps teams.

Developers might need to shift to microservice-based architecture to deliver better cloud reliability and scalability, for example, as Ops teams deploy those applications across expansive hybrid and multi-cloud environments. Istio — a widely-adopted service mesh that acts as a modern networking layer for managing microservices — helps streamline these tasks.

Edge Delta’s Istio Pack for Telemetry Pipelines provides greater comprehensive visibility into traffic patterns and enables more proactive monitoring so teams can further improve the performance of their applications.

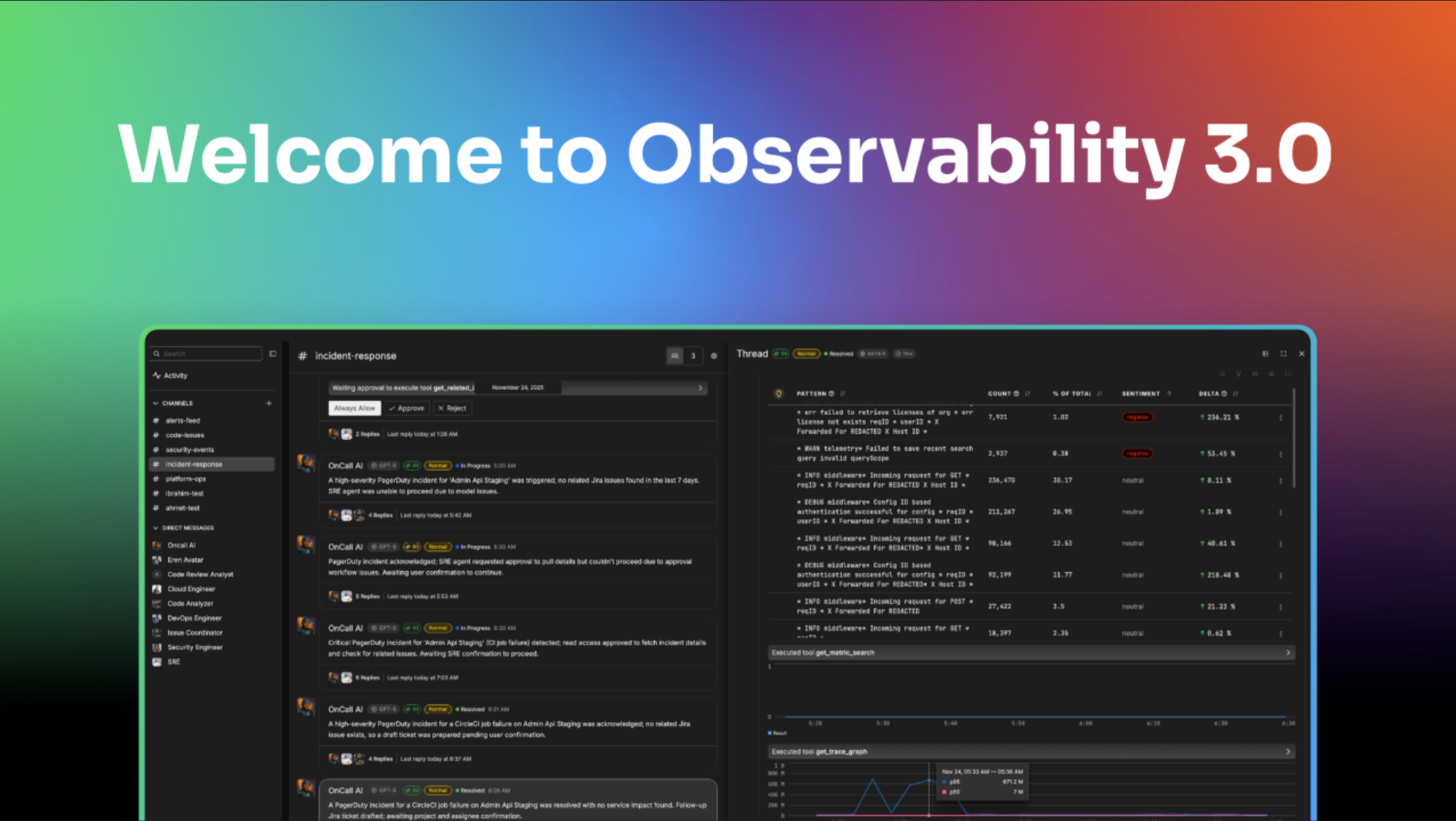

Automate workflows across SRE, DevOps, and Security

Edge Delta's AI Teammates is the only platform where telemetry data, observability, and AI form a self-improving iterative loop. It only takes a few minutes to get started.

Learn MoreHow the Istio Pack Works

The Istio Pack analyzes incoming HTTP logs to deliver deeper insights into service mesh traffic. After first extracting key data fields, the pack classifies them by HTTP status codes, which facilitates focused analysis of successful requests, client errors, and server errors.

Once your Istio logs are fully processed, the pack delivers eight different outputs for exporting to any number of downstream destinations.

Below we’ll outline each processing step, so you can follow the journey of the Istio logs through the transformations that occur within the pack.

Processing Pathway: Extraction

Once your Istio logs begin flowing into Edge Delta, the first stop is the {grok_extract_fields} node, which utilizes a Grok pattern to extract fields and structure them as attributes. The extracted fields include:

timestampverbrequestresponse_code

This resulting transformation ensures log fields are easier to search, analyze, and visualize.

Processing Pathway: Timestamp Transformation

Next, the log_transform_timestamp node converts each log’s timestamp attribute into a Unix Milliseconds format. The result of the conversion is then copied into the item[“timestamp”] field directly, which confirms accuracy in log timing.

- name: log_transform_timestamp

type: log_transform

transformations:

- field_path: item["timestamp"]

operation: upsert

value:

convert_timestamp(item["attributes"]["timestamp"], "2006-01-02T15:04:05.999999Z",

"Unix Milli")Processing Pathway: Status Code Based Routing

Logs then pass through the status_code_router, which categorizes logs into separate routes based on their HTTP response_code. Logs are sent to the following routes based on the following status codes:

- Success Path: Log entries with a

response_codein the range of200to299are labeled a success. In this case, the log is then routed to thesuccesspath, and the evaluation stops there. - Client Error Path: If a

response_codeis between400and499, the log is routed to theclient_errorpath. - Server Error Path: When a

response_codelands within the500to599range, a server-side error has been detected. The log is then sent to theserver_errorpath.

- name: status_code_router

type: route

paths:

- path: success

condition:

int(item["attributes"]["response_code"]) >= 200 && int(item["attributes"]["response_code"])

<= 299

exit_if_matched: true

- path: client_error

condition:

int(item["attributes"]["response_code"]) >= 400 && int(item["attributes"]["response_code"])

<= 499

exit_if_matched: true

- path: server_error

condition:

int(item["attributes"]["response_code"]) >= 500 && int(item["attributes"]["response_code"])

<= 599

exit_if_matched: trueProcessing Pathway: Log Pattern Identification

This node detects common patterns within your logs and provides a summary by clustering them together.

- name: log_to_pattern

type: log_to_pattern

num_of_clusters: 10

samples_per_cluster: 5Processing Path: Log to Metric Conversion

Lastly, remaining logs run through Log to Metric nodes — client_error_l2m, server_error_l2m, and success_l2m — which track and report metrics related to bytes sent, log counts, and status codes.

The generated metrics are reported at a frequency defined by the interval, which is 1m0s (one minute), and aggregated over this interval. The metrics generated are also labeled with http_client_errors, prefixed to provide a clear context that these metrics pertain to client-side HTTP errors.

Each node configures two groups for the metrics, capturing specific attributes, such as downstream_remote_address, verb, request, and response from the log entry.

- name: client_error_l2m

type: log_to_metric

pattern: .*

interval: 1m0s

skip_empty_intervals: false

only_report_nonzeros: false

metric_name: http_client_errors

dimension_groups:

- field_dimensions:

- item["attributes"]["downstream_remote_address"]

- item["attributes"]["verb"]

- item["attributes"]["request"]

- string(item["attributes"]["response"])

field_numeric_dimension: item["attributes"]["bytes_sent"]

enabled_stats:

- sum

- field_dimensions:

- item["attributes"]["downstream_remote_address"]

- item["attributes"]["verb"]

- item["attributes"]["request"]

- string(item["attributes"]["response"])

enabled_stats:

- countAfterwards, the processed logs and metrics are sent to the matching compound_output for further processing or storage. This ensures that logs associated with success, client_error, and server_error are captured for archival purposes or further inspection.

Istio Pack in Action

To begin using the Istio Pack, ensure you have an existing pipeline within Edge Delta. If you haven’t created one yet, go to Pipelines and select New Fleet.

Next, choose whether you want your pipeline to be configured with an Edge Fleet or Cloud Fleet, depending on your hosting environment, and follow the on-screen prompts to complete the setup.

Once your pipeline is ready, navigate to the Pipelines menu, select Knowledge, and then click on Packs. Locate the Istio Pack and select Add Pack to add it to your Pack library.

To integrate the Istio Pack into your pipeline, return to the main Pipelines dashboard, choose the pipeline you’d like to modify, and enter Edit Mode using the Visual Pipelines builder. In Edit Mode, go to Add Processor, select Packs, and scroll to the Istio Pack.

You’ll have the option to rename the pack and select sample logs for testing. When you’re ready, click Save Changes to apply the Istio Pack to your pipeline. Then, using the Visual Pipelines builder, simply drag and drop the connection from your Istio log source to the pack.

After processing your Istio logs, Edge Delta Telemetry Pipelines provide flexible routing options. You can send the processed data to your preferred observability vendor — including Datadog, Dynatrace, Splunk, Sumo Logic, and others.

Additionally, you can route data to Edge Delta for advanced log analysis, and also send a complete copy of your raw log data in archival storage, like S3, for compliance or future investigation.

Getting started with the Istio Pack

Ready to see Edge Delta’s Istio Pack in action? Check out our packs list and add the Istio Pack to any running pipeline. New to Edge Delta? Visit our Playground to get a feel for how we can help you change the way you manage your telemetry data.