Cisco Secure Firewall Threat Defense (SFTD) — formally known as Firepower Threat Defense (FTD) — is a network security platform that delivers next-generation firewalling, intrusion prevention, and malware protection. SFTD logs are exported via syslog by default, but they capture a wide range of activity — including network traffic, VPN connections, web access, DNS queries, and more. Each log type has its own field structure, which makes it challenging to extract insights and monitor network security at scale.

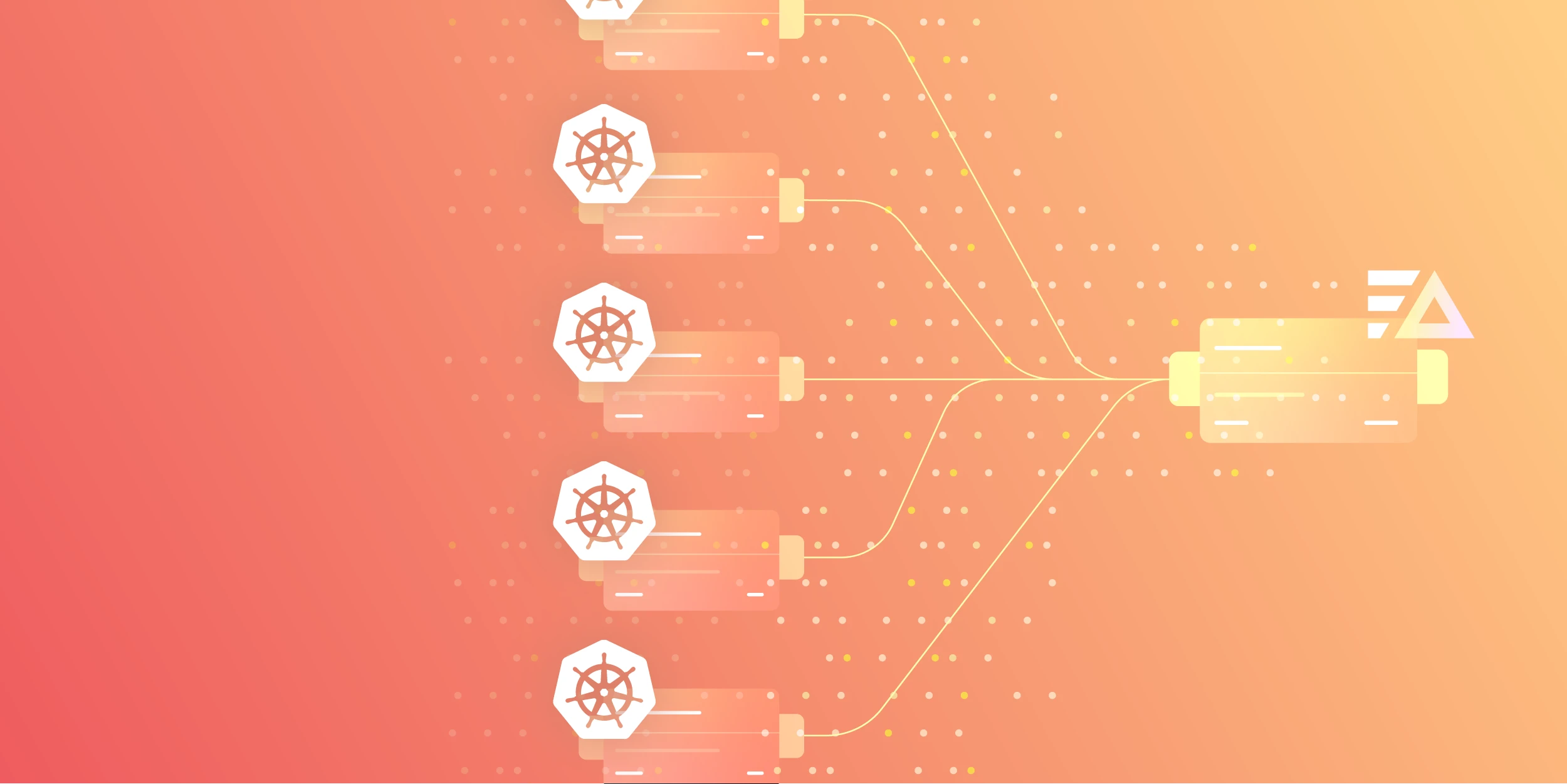

Edge Delta’s pre-built SFTD Pack automatically collects, normalizes, and enriches Cisco SFTD logs within our intelligent Security Data Pipelines. Once processed, these logs can then be routed to the appropriate destinations, such as SIEMs and cost-effective archival storage. By creating a uniform structure across Cisco SFTD log types, the pack streamlines analysis and threat detection workflows, reducing overhead for security teams.

Automate workflows across SRE, DevOps, and Security

Edge Delta's AI Teammates is the only platform where telemetry data, observability, and AI form a self-improving iterative loop. It only takes a few minutes to get started.

Learn MoreThis post will walk through each processing step within the Cisco SFTD Pack and demonstrate how to add it to an existing pipeline.

Log Parsing and Structuring

Edge Delta’s Cisco SFTD Pack performs a sequence of security data processing steps within a single multiprocessor node. Its default configuration is based on best practices for processing Cisco SFTD logs, but it also includes optional steps, which we’ll note throughout this post.

First, the pack uses a regex pattern to extract the syslog message ID or code, represented as the ftd_code. This numerical identifier reports specific network actions, such as building a connection (302013), denying traffic by access control (106100), or establishing a VPN tunnel (716001).

Once extracted, the ftd_code is merged into an existing map of attributes using an “upsert” approach, which either updates existing keys or adds new ones. In cases where the attributes field is not yet a map, the same extraction occurs but a new map replaces attributes to ensure all data is consistently structured.

- type: ottl_transform

metadata: '{"id":"QrJFHBqwPgjSQKgcYqkpu","type":"parse-regex","name":"Parse FTD code"}'

data_types:

- log

statements: |-

merge_maps(attributes, ExtractPatterns(body, "%FTD-\S*-(?P<ftd_code>\d+):"), "upsert") where IsMap(attributes)

set(attributes, ExtractPatterns(body, "%FTD-\S*-(?P<ftd_code>\d+):")) where not IsMap(attributes)Log Filtering

Next, a filter processor examines the Cisco SFTD logs to determine if they contain an ftd_code. Logs missing an ftd_code are typically system or diagnostic messages with limited security value, so the processor drops them from the pipeline. This ensures only the most pertinent data moves forward for additional processing.

- type: ottl_filter

metadata: '{"id":"H5deS0wvGroNU1ZYcpdvQ","type":"filter","name":"Filter no FTD code"}'

condition: attributes["ftd_code"] == nil

data_types:

- log

filter_mode: excludeAs an optional step, the pack can then run the remaining logs that do contain an ftd_code through a lookup processor to flag non-critical events — such as the beginning or end of an SSL handshake. If a match is found, the processor adds a flag (attributes["ftd_drop"]) to the log, marking it for exclusion in the next step (also optional).

- type: lookup

metadata: '{"id":"uA9Mfy_Z0abLxI8Syp01Y","type":"lookup","name":"Lookup FTD codes to drop"}'

disabled: true

data_types:

- log

location_path: ed://ftd_drops.csv

key_fields:

- event_field: attributes["ftd_code"]

lookup_field: ftd_code

out_fields:

- event_field: attributes["ftd_drop"]

lookup_field: ftd_codeThese two optional steps help consolidate and refine the data you’re sending downstream, ensuring only the most relevant and actionable logs are chosen for deeper analysis.

Log Enrichment and Timestamp Normalization

Once the logs have been filtered, the pack uses an add field processor to enrich the remaining logs with vendor and product information, improving clarity, context, and correlation for downstream investigations.

- type: ottl_transform

metadata: '{"id":"XUf2YmjtV-ZnYjP6wCrWG","type":"add-field","name":"Add vendor product"}'

data_types:

- log

statements: |-

set(attributes["product"], "FTD")

set(attributes["vendor"], "Cisco")

set(attributes["vendor_product"], "Cisco FTD")Next, a parse regex processor extracts the timestamps from the logs. Two OTTL statements handle this logic: the first checks whether the attributes field is a map and, if so, merges the extracted timestamp into it; the second handles cases where attributes is not a map and creates a new one containing the timestamp.

- type: ottl_transform

metadata: '{"id":"DLIOorvzHDC47hQaatMjd","type":"parse-grok","name":"Extract Timestamp"}'

data_types:

- log

statements: |-

merge_maps(attributes, ExtractGrokPatterns(body, "(?<timestamp>%{MONTH} %{MONTHDAY} %{YEAR} %{TIME})"), "upsert") where IsMap(attributes)

set(attributes, ExtractGrokPatterns(body, "(?<timestamp>%{MONTH} %{MONTHDAY} %{YEAR} %{TIME})")) where not IsMap(attributes)After extraction, a parse timestamp processor standardizes the timestamp format, converting it to Unix time in milliseconds. This ensures consistent, structured time data for downstream processing.

- type: ottl_transform

metadata: '{"id":"d5mOENwvune0dVDc6rAcp","type":"parse-timestamp","name":"Parse Timestamp"}'

condition: attributes["timestamp"] != nil

data_types:

- log

statements: set(timestamp, UnixMilli(Time(attributes["timestamp"], "Jan 02 2006 15:04:05")))Further Clean Up

The ftd_code within each log is then compared against a second lookup table. If a match is discovered, the pack adds the corresponding regex from the table to the log’s attributes["regex"] to set up more targeted parsing or filtering in later processing stages.

- type: lookup

metadata: '{"id":"HYLvyh1s01mV5s7MN3XnF","type":"lookup","name":"Lookup regex by FTD code"}'

data_types:

- log

location_path: ed://ftd_parsing.csv

key_fields:

- event_field: attributes["ftd_code"]

lookup_field: ftd_code

out_fields:

- event_field: attributes["regex"]

lookup_field: regexLogs then enter a parse regex processor for data extraction via regex, and a parse key value processor for added granularity.

In this process, any text within the log body that comes before %FTD- is eliminated through a non-greedy modifier, which standardizes the log format for more simplified parsing.

Next, if a regex pattern exists in attributes["regex"], it’s used to extract key-value pairs from the log body and merge them into attributes, which translates to increased accuracy, reliability, and control in analysis, alerting, and storage.

Logs with an ftd_code that reference critical security events, such as intrusions or malware files, are then isolated. The portion of the log following the code is also extracted and stored in attributes["43000x"] to help fast-track security event logs for more targeted analysis.

To further bolster downstream insights, two conditional transformations parse and structure the logs to ensure the attributes field is consistently structured as a map, regardless of its initial format.

- type: ottl_transform

metadata: '{"id":"NekW9KrFLww7ZOOTy9wko","type":"parse-key-value","name":"Parse 43000x body"}'

condition: attributes["43000x"]["body"] != nil

data_types:

- log

statements: |-

merge_maps(attributes, ParseKeyValue(attributes["43000x"]["body"], ":", ","), "upsert") where IsMap(attributes)

set(attributes, ParseKeyValue(attributes["43000x"]["body"], ":", ",")) where not IsMap(attributes)To continue improving consistency, the statement edx_map_keys renames specific keys in the attributes map, so any old field names (e.g., “DstIP“, “DstPort“) are mapped to newly-standardized ones (e.g., “dest_ip“, “dest_port“).

- type: ottl_transform

metadata: '{"id":"RvnYOpuPN_CFkHfy5ZC8j","type":"ottl_transform","name":"Rename attribute keys"}'

data_types:

- log

statements: edx_map_keys(attributes, ["DstIP", "DstPort", "Protocol", "SrcIP", "SrcPort"], ["dest_ip", "dest_port", "protocol", "src_ip", "src_port"])Finally, any remaining unnecessary fields are eliminated via a delete field processor.

- type: ottl_transform

metadata: '{"id":"rRJPuizMaHxefNBv8tGXt","type":"delete-field","name":"Delete Field"}'

data_types:

- log

statements: |-

delete_key(attributes, "43000x")

delete_key(attributes, "HOUR")

delete_key(attributes, "MINUTE")

delete_key(attributes, "MONTH")

delete_key(attributes, "MONTHDAY")

delete_key(attributes, "TIME")

delete_key(attributes, "SECOND")

delete_key(attributes, "YEAR")

delete_key(attributes, "timestamp")

delete_key(attributes, "ftd_code")

delete_key(attributes, "regex")

delete_key(attributes, "ftd_drop")The fully-processed SFTD logs can then be routed to any downstream destination through a single output.

See It in Action

To take advantage of the Cisco SFTD Pack, you’ll need an existing pipeline in Edge Delta. To set up a new pipeline, follow these steps.

Once your pipeline is activated, go to the Pipelines menu, select Knowledge, and open the Packs section. Scroll down to locate the Cisco SFTD Pack and click Add Pack. This will move the pack into your library, which you can access anytime through the Pipelines menu under Packs. Once there, you can fine-tune the multiprocessor’s sequencing steps by simply toggling them on or off.

To install the Cisco SFTD Pack, return to your Pipelines dashboard, choose the pipeline where you want to add the pack, and enter Edit Mode. While in Edit Mode, click Add Processor, navigate to Packs, and select the Cisco SFTD Pack.

You can rename the pack from “Cisco SFTD Pack” to something else if you prefer. When you’re finished, click Save Changes. Next, head back to the Pipeline Builder and drag the initial connection from your Cisco SFTD Pack logs source into the pack.

Finally, you’ll need to configure destinations. With Edge Delta Security Data Pipelines, you can route your processed Cisco SFTD Pack logs to any downstream destination, including SIEMs — such as Splunk, Microsoft Sentinel, and CrowdStrike Falcon — as well as archival storage solutions like S3. You can also send logs to Edge Delta’s Observability Platform, which supports real-time anomaly detection and pattern visualization.

Get Started with the Cisco SFTD Pack

The Cisco SFTD Pack is one of many pre-built packs that streamline data processing through automated parsing, enrichment, and optimization — with new packs being added regularly to our growing library.

Check out our free playground if you’d like to explore our next-generation Security Data Pipelines and experiment with packs. You can also schedule a demo with one of our experts to learn more about how Edge Delta enhances analysis while reducing operational costs.