Today’s distributed applications produce large volumes of telemetry data in a variety of formats, and this lack of standardization complicates correlation, analysis, and troubleshooting. Additionally, organizations rely on numerous monitoring tools and platforms that have their own vendor-specific data formats, which adds fragmentation and complexity. Without a unified view, teams struggle to make informed decisions, which can lead to performance degradations that negatively impact the business’s bottom line.

OpenTelemetry (OTel) has emerged as the industry standard for preparing, normalizing, and standardizing telemetry data onto a common format that all observability platforms can consume. It uses the OpenTelemetry Transform Language (OTTL) to define rules that modify, filter, and enhance telemetry data before it is routed downstream — without any application code changes. This standardized data can then be used to create dashboards and monitors, enabling engineers, data scientists, business analysts, and marketing teams to make data-driven decisions at scale.

Automate workflows across SRE, DevOps, and Security

Edge Delta's AI Teammates is the only platform where telemetry data, observability, and AI form a self-improving iterative loop. It only takes a few minutes to get started.

Learn MoreIn this post, we’ll explore how OTTL works, discuss Edge Delta’s implementation, and unpack some real-world examples that show OTTL in action.

How Does OTTL Work?

An OTTL statement is made up of three main components: a function, an argument, and an optional condition. Together, these components define how telemetry data should be modified, enriched, or filtered:

- Function: Defines the action to perform. It either modifies the telemetry data (e.g., setting or deleting attributes) or transforms it without altering the original values.

- Argument: Tells the function what to do and which data to operate on.

- Condition: Defines the conditions under which the action should be performed (optional).

These components are assembled into statements like the one below, where the set function is used to assign the production value to the environment attribute when the service.name is my_service:

set(attributes["environment"], "production") where attributes["service.name"] == "my_service"OTTL Within Edge Delta Telemetry Pipelines

Edge Delta is pioneering a shift within the observability space by bringing both data collection and transformation as close to the source as possible — and our native support for OpenTelemetry and OTTL is central to that approach.

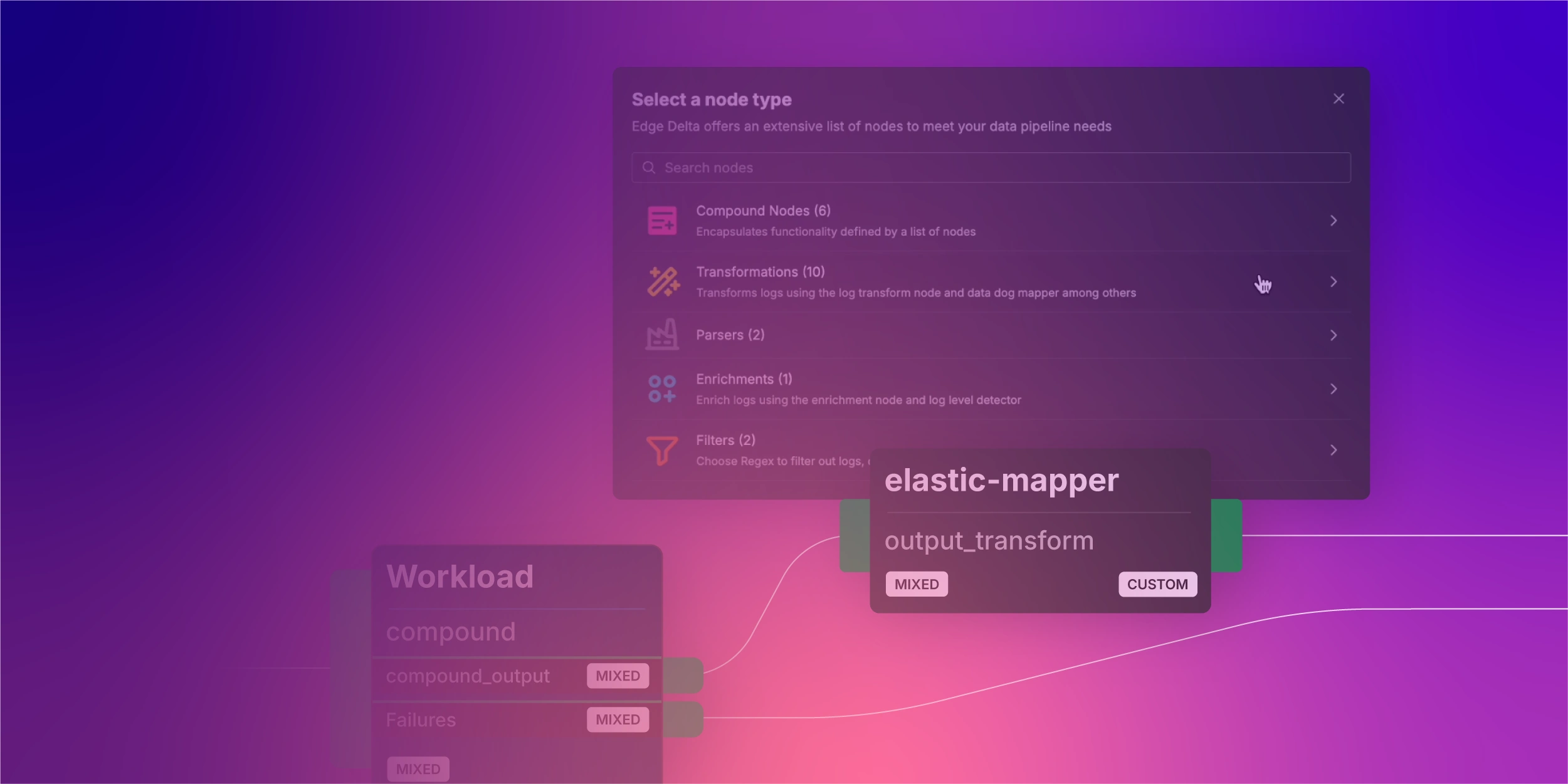

OTTL powers all of the Transformation nodes within Telemetry Pipelines and is also used to route data. This enables organizations to build elegant, low-overhead, and easy-to-use OTTL implementations that deliver the right data, at the right time, and in the right volume.

As a result, Edge Delta Telemetry Pipelines not only deliver telemetry data in a standardized format but also unlock significant cost savings by eliminating unnecessary data at the edge — before it ever reaches expensive storage or processing systems.

See OTTL in Action

In this section, we’ll walk through three different use cases for OTTL: standardizing inconsistent logs, adding tags to distributed traces, and optimizing a native OTTL metric.

Log Data in Different Formats

Inconsistent log formats are one of the biggest obstacles to system-wide visibility because they prevent teams from easily aggregating, correlating, and analyzing data across services. When each service logs data differently, it creates fragmentation that obscures patterns, introduces blind spots, and slows down troubleshooting. This undermines observability efforts and makes it harder to maintain reliable, performant systems.

Consider, for example, the following NGINX log that might be scanned from a local log file:

10.0.0.2 - john [21/Apr/2025:14:55:05 +0000] "POST /api/login HTTP/1.1" 401 512 "https://example.com/login" "Mozilla/5.0 (Macintosh; Intel Mac OS X 10_15_7)"Now, compare that log to the following custom JSON access log, which may have been emitted by an NGINX reverse proxy:

{"remote_addr": "192.168.1.10", "remote_user": null, "timestamp": 1745966703596, "request": "GET /api/data HTTP/1.1", "status": 200, "body_bytes_sent": 1024, "http_referer": null, "http_user_agent": "Mozilla/5.0", "request_time": 0.045, "upstream_response_time": 0.03, "upstream_addr": "10.0.0.1:8080", "upstream_status": 200, "upstream_cache_status": "HIT"}Both logs contain similar data, but they are formatted very differently. We can leverage two separate sets of OTTL statements to standardize them onto OTel’s Logs Data Model.

For the first log, we can use the following OTTL to transform the attributes into key/value pairs:

set(cache["body"], Decode(body, "utf-8"))

replace_pattern(cache["body"], "^(\\S+) - (\\S+) \\[([^\\]]+)\\] \"([A-Z]+) (\\S+) ([^\"]+)\" (\\d{3}) (\\d+) \"([^\"]*)\" \"([^\"]*)\"$", "$1|$2|$3|$4|$5|$6|$7|$8|$9|$10")

set(attributes["client.address"], Split(cache["body"], "|")[0])

set(attributes["username"], Split(cache["body"], "|")[1])

set(attributes["timestamp"], Split(cache["body"], "|")[2])

set(attributes["http.request.method"], Split(cache["body"], "|")[3])

set(attributes["path"], Split(cache["body"], "|")[4])

set(attributes["http.version"], Split(cache["body"], "|")[5])

set(attributes["http.response.status_code"], Split(cache["body"], "|")[6])

set(attributes["http.response.size"], Split(cache["body"], "|")[7])

set(attributes["url.full"], Split(cache["body"], "|")[8])

set(attributes["user.agent"], Split(cache["body"], "|")[9])

Here is the resulting log:

{

"_type": "log",

"attributes": {

"client.address": "10.0.0.2",

"http.request.method": "POST",

"http.response.size": "512",

"http.response.status_code": "401",

"http.version": "HTTP/1.1",

"path": "/api/login",

"timestamp": "21/Apr/2025:14:55:05 +0000",

"url.full": "https://example.com/login",

"user.agent": "Mozilla/5.0 (Macintosh; Intel Mac OS X 10_15_7)",

"username": "john"

},

"body": "10.0.0.2 - john [21/Apr/2025:14:55:05 +0000] \"POST /api/login HTTP/1.1\" 401 512 \"https://example.com/login\" \"Mozilla/5.0 (Macintosh; Intel Mac OS X 10_15_7)\"",

"timestamp": 1746027901803

}For the JSON log (i.e., the second example above), we can use the following OTTL statement to perform the transformation:

set(cache["body"], Decode(body, "utf-8"))

set(attributes, ParseJSON(cache["body"]))

set(attributes["client.address"], attributes["remote_addr"])

delete_key(attributes, "remote_addr")

set(attributes["username"], attributes["remote_user"])

delete_key(attributes, "remote_user")

replace_pattern(attributes["request"], "^(\\S+) (\\S+) (\\S+)", "$1|$2|$3")

set(attributes["http.request.method"], Split(attributes["request"], "|")[0])

set(attributes["path"], Split(attributes["request"], "|")[1])

set(attributes["http.version"], Split(attributes["request"], "|")[2])

delete_key(attributes, "request")

set(attributes["http.upstream.response.time"], attributes["upstream_response_time"])

delete_key(attributes, "upstream_response_time")

set(attributes["http.request.time"], attributes["request_time"])

delete_key(attributes, "request_time")

set(attributes["http.response.status_code"], attributes["status"])

delete_key(attributes, "status")

set(attributes["http.response.size"], attributes["body_bytes_sent"])

delete_key(attributes, "body_bytes_sent")

set(attributes["user.agent"], attributes["http_user_agent"])

delete_key(attributes, "http_user_agent")This is what the second log would look like after the transformation:

{

"_type": "log",

"attributes": {

"client.address": "192.168.1.10",

"http.request.method": "GET",

"http.request.time": 0.045,

"http.response.size": 1024,

"http.response.status_code": 200,

"http.upstream.response.time": 0.03,

"http.version": "HTTP/1.1",

"http_referer": null,

"path": "/api/data",

"timestamp": 1745966703596,

"upstream_addr": "10.0.0.1:8080",

"upstream_cache_status": "HIT",

"upstream_status": 200,

"user.agent": "Mozilla/5.0"

},

"body": "{\"remote_addr\": \"192.168.1.10\", \"remote_user\": null, \"timestamp\": 1745966703596, \"request\": \"GET /api/data HTTP/1.1\", \"status\": 200, \"body_bytes_sent\": 1024, \"http_referer\": null, \"http_user_agent\": \"Mozilla/5.0\", \"request_time\": 0.045, \"upstream_response_time\": 0.03, \"upstream_addr\": \"10.0.0.1:8080\", \"upstream_status\": 200, \"upstream_cache_status\": \"HIT\"}",

"timestamp": 1746103827828

}As you can see, both logs now have http.request.method and client.address normalized to standard key/value pairs. This standardization enables us to send this data to a single Log to Metrics processor, simplifying the pipeline and preparing the data for dashboarding.

Inconsistently Tagged Traces

Tracing is essential for monitoring distributed applications because it reveals how services interact and helps surface performance bottlenecks. Tags provide critical context within traces — such as service names, environments, or request IDs — but to be effective, they must be applied consistently across all services. Otherwise, it’s difficult to connect related spans or extract meaningful insights from trace data.

Consider this example trace from a Java application:

{

"resourceSpans": [

{

"resource": {

"attributes": [

{

"key": "service.name",

"value": {

"stringValue": "example-java-app"

}

},

{

"key": "telemetry.sdk.language",

"value": {

"stringValue": "java"

}

},

{

"key": "host.name",

"value": {

"stringValue": "localhost"

}

}

]

},

"scopeSpans": [

{

"scope": {

"name": "example.instrumentation",

"version": "1.0.0"

},

"spans": [

{

"traceId": "17125e4f5f0a40f88476dc7ae1ef23d7",

"spanId": "d95d1bbb9c414c91",

"parentSpanId": "",

"name": "GET /api/data",

"kind": "SPAN_KIND_SERVER",

"startTimeUnixNano": 1745968037867470592,

"endTimeUnixNano": 1745968039067470592,

"attributes": [

{

"key": "http.method",

"value": {

"stringValue": "GET"

}

},

{

"key": "http.route",

"value": {

"stringValue": "/api/data"

}

},

{

"key": "http.status_code",

"value": {

"intValue": 200

}

},

{

"key": "net.peer.ip",

"value": {

"stringValue": "192.168.1.10"

}

}

],

"status": {

"code": "STATUS_CODE_OK"

}

}

]

}

]

}

]

}Now, here’s a trace that captures a call from the Java app to a MongoDB instance:

{

"traceId": "17125e4f5f0a40f88476dc7ae1ef23d7",

"spanId": "a1b2c3d4e5f60708",

"parentSpanId": "d95d1bbb9c414c91",

"name": "mongodb.find testdb.testcoll",

"kind": "SPAN_KIND_CLIENT",

"startTimeUnixNano": 1745968038067470592,

"endTimeUnixNano": 1745968038567470592,

"attributes": [

{

"key": "db.system",

"value": {

"stringValue": "mongodb"

}

},

{

"key": "db.name",

"value": {

"stringValue": "testdb"

}

},

{

"key": "db.operation",

"value": {

"stringValue": "find"

}

},

{

"key": "db.mongodb.collection",

"value": {

"stringValue": "testcoll"

}

},

{

"key": "net.peer.name",

"value": {

"stringValue": "localhost"

}

},

{

"key": "net.peer.port",

"value": {

"intValue": 27017

}

}

],

"status": {

"code": "STATUS_CODE_OK"

}

}The problem with the above traces is that they are not tagged with the same service.name. The following OTTL statements can be used to modify these values — and add other key/value pairs that help shed light on different parts of an application:

set(attributes, ParseJSON(body))

set(attributes["application"], "myApplication")

set(attributes["application-tier"], "Frontend") where IsMatch(attributes["resourceSpans"][0]["resource"]["attributes"][0]["value"]["stringValue"], "java-app")

set(attributes["application-tier"], "Database") where IsMatch(attributes["attributes"][0]["value"]["stringValue"], "mongodb")After flowing through this OTTL, the Java trace will look like this:

{

"_type": "trace",

"attributes": {

"application": "myApplication",

"application-tier": "Frontend",

"resourceSpans": [

{

"resource": {

"attributes": [

{

"key": "service.name",

"value": {

"stringValue": "example-java-app"

}

.....The database trace will look like this:

{

"_type": "trace",

"attributes": {

"application": "myApplication",

"application-tier": "Database",

"attributes": [

{

"key": "db.system",

"value": {

"stringValue": "mongodb"

}

.......Notice how the OTTL statements used the information in the traces to add the appropriate application and application-tier key/value pairs. This approach will enable users to filter through the traces by application and tier in order to isolate any potential issues. Logs and metrics can be transformed in a similar way, making searching and filtering much more efficient.

Metrics That Aren’t Optimized for Cost-Effective Storage

Native OTel metrics can also benefit from OTTL standardizations — especially those that include unnecessary information. For example, consider the following OTel resource metrics:

{

"resourceMetrics": [

{

"resource": {

"attributes": [

{ "key": "service.name", "value": { "stringValue": "example-java-app" } },

{ "key": "telemetry.sdk.language", "value": { "stringValue": "java" } },

{ "key": "host.name", "value": { "stringValue": "java-prod-node-1" } }

]

},

"scopeMetrics": [

{

"scope": {

"name": "io.opentelemetry.jvm",

"version": "1.30.0"

},

"metrics": [

{

"name": "jvm.memory.used",

"description": "Used JVM memory",

"unit": "bytes",

"gauge": {

"dataPoints": [

{

"attributes": [

{ "key": "memory.type", "value": { "stringValue": "heap" } },

{ "key": "memory.pool", "value": { "stringValue": "G1 Eden Space" } }

],

"timeUnixNano": 1714401256123000000,

"asInt": 38797312

}

]

}

},

{

"name": "http.server.duration",

"description": "HTTP server request duration",

"unit": "ms",

"sum": {

"dataPoints": [

{

"attributes": [

{ "key": "http.method", "value": { "stringValue": "GET" } },

{ "key": "http.route", "value": { "stringValue": "/api/data" } },

{ "key": "http.status_code", "value": { "intValue": 200 } }

],

"startTimeUnixNano": 1714401250000000000,

"timeUnixNano": 1714401256123000000,

"asDouble": 245.5

}

],

"aggregationTemporality": "AGGREGATION_TEMPORALITY_CUMULATIVE",

"isMonotonic": false

}

}

]

}

]

}

]

}This JSON time series metric is roughly 2 KB in size. This size may not seem significant, but when you multiply it by the number of metrics generated by a given system, and then by the number of systems you have, and then by the number of time series data points needed over time, the financial burden becomes clear — regardless of whether the metric is stored on-prem or in the cloud. Additionally, a third-party observability system might expect the metric in a different format.

We can therefore use the following OTTL statements to transform the data and reduce its size:

set(cache["body"], ParseJSON(body))

set(cache["metricname"], cache["body"]["resourceMetrics"][0]["scopeMetrics"][0]["metrics"][0]["name"])

set(cache["metricdata"], cache["body"]["resourceMetrics"][0]["scopeMetrics"][0]["metrics"][0]["gauge"]["dataPoints"][0]["asInt"])

set(cache["metric"], Concat([cache["metricname"], cache["metricdata"]], ":"))

set(cache["metrictimestamp"], cache["body"]["resourceMetrics"][0]["scopeMetrics"][0]["metrics"][0]["gauge"]["dataPoints"][0]["timeUnixNano"])

set(cache["metrictype"], cache["body"]["resourceMetrics"][0]["scopeMetrics"][0]["metrics"][0]["gauge"]["dataPoints"][0]["attributes"][0]["key"])

set(cache["metrictypevalue"], cache["body"]["resourceMetrics"][0]["scopeMetrics"][0]["metrics"][0]["gauge"]["dataPoints"][0]["attributes"][0]["value"]["stringValue"])

set(cache["metrictype"], Concat([cache["metrictype"], cache["metrictypevalue"]], ":"))

set(cache["metricdscr"], cache["body"]["resourceMetrics"][0]["scopeMetrics"][0]["metrics"][0]["gauge"]["dataPoints"][0]["attributes"][1]["key"])

set(cache["metricdscrvalue"], cache["body"]["resourceMetrics"][0]["scopeMetrics"][0]["metrics"][0]["gauge"]["dataPoints"][0]["attributes"][1]["value"]["stringValue"])

set(cache["metricdscr"], Concat([cache["metricdscr"], cache["metricdscrvalue"]], ":"))

set(cache["metrictype"], Concat([cache["metrictype"], cache["metricdscr"]], ","))

replace_pattern(cache["metrictype"], "\\.", "_")

replace_pattern(cache["metrictype"], " ", "_")

set(body, Concat([cache["metrictimestamp"], cache["metric"], "g", cache["metrictype"]], "|"))The resulting metric, shown below, can be consumed by any third-party observability platform — and has been reduced to 100 bytes. By optimizing the size by ~20x, we’re able to cut storage costs by 95%.

Here is the resulting output:

{

"_type": "metric",

"body": "1714401256123000000|jvm.memory.used:38797312|g|memory_type:heap,memory_pool:G1_Eden_Space",

"timestamp": 1747229716385

}

{

"_type": "metric",

"body": "1714401256123000000|jvm.memory.used:38797312|g|memory_type:heap,memory_pool:G1_Eden_Space",

"timestamp": 1747229716385

}Conclusion

As the volume, variety, and velocity of telemetry data grow, so does the complexity of making it both actionable and cost-effective. OpenTelemetry Transform Language (OTTL) plays a critical role in addressing these challenges by providing a flexible, standards-based framework to transform and normalize telemetry data across formats and systems. Whether it’s harmonizing inconsistent logs, aligning distributed traces, or restructuring metrics to reduce storage overhead, OTTL empowers organizations to make their observability data cleaner, more consistent, and more useful.

By embracing OTTL, Edge Delta enables organizations to operate with greater agility, efficiency, and clarity. Our native support for OTTL not only ensures interoperability and alignment with the broader observability ecosystem but also provides our users with intuitive tools that retain the full power and flexibility of the language when needed.

Ultimately, OTTL is more than just a transformation language—it is a critical enabler of scalable, intelligent observability. Standardization at the telemetry layer is no longer optional; it’s foundational. Edge Delta is proud to be contributing to this shift, helping teams make sense of their data, reduce waste, and deliver real value from observability investments.

Get hands-on with Edge Delta Telemetry Pipelines in our free-to-use Playground.