Modern systems generate tons of data daily, mostly log data. The data created worldwide is forecasted to reach 181 zettabytes by 2025. These logs’ increasing scale and complexity make traditional and manual inspection and analysis time-consuming and ineffective.

Traditionally, log analysis relies on the expertise of a data analyst. While it does work in certain situations, it puts the whole organization at risk if the data analyst is away.

Want Faster, Safer, More Actionable Root Cause Analysis?

With Edge Delta’s out-of-the-box AI agent for SRE, teams get clearer context on the alerts that matter and more confidence in the overall health and performance of their apps.

Learn MoreMany businesses have turned to machine learning (ML) and artificial intelligence(AI) to identify system log patterns and anomalies. ML and AI can find these issues by creating algorithms based on their ingested logs. The more logs ingested, the more information it can get to create the algorithms.

In this article, learn more about how log analysis has evolved with ML and AI, its benefits, and the tools used for the AI/ML approach to log analysis.

Key Takeaways:

Log analysis has evolved from the time-consuming processes of traditional log analysis to leveraging AI/ML.

There are two main approaches for training machine learning in log analysis: supervised learning and unsupervised learning.

Integrating AI/ML into log analysis offers many advantages to teams, such as quicker data sorting, early issue identification, and optimized resource allocation.

An In-Depth Look into the Evolution of Log Analysis with AI and ML

Log analysis used to be a process that relied solely on humans. However, as technology advances, data teams can harness the power of AI and ML to read and understand log files. Through this process, they can easily indicate the root cause of a system issue or predict a future error.

Machine learning and artificial intelligence are often used interchangeably regarding data and predictive analytics. However, machine learning is only a subset of AI that enables a machine to learn from experience. The more data it uses, the better the model will be.

Artificial intelligence is a broader topic that deals with technologies that can help human intelligence, such as:

- Respond to spoken or written language

- Analyzing data

- Making recommendations

The integration of machine learning algorithms into log analysis has revolutionized the process. These algorithms can now proactively detect patterns and anomalies, significantly reducing the time spent on log analysis.

In the past, IT teams performed each step above using largely manual steps, which was time-consuming and inefficient. However, AI and ML have helped make this process easier nowadays.

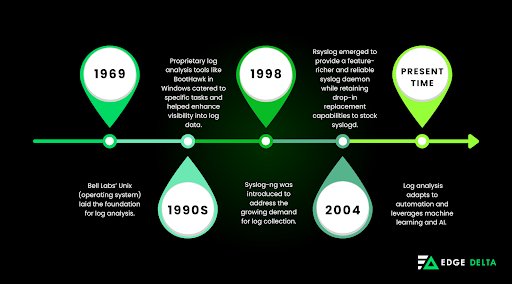

Here’s how log analysis has developed throughout the decades:

- 1969: Bell Labs’ Unix laid down the foundation for log analysis. The operating system didn’t have the tools to aggregate log files then, so admins relied on essential text manipulation tools to understand the log file. The admins can only do log analysis on an as-needed basis.

- 1990s: Log analysis evolved and became more complicated. There were separate logs for boot-up and system events, and applications kept their logs, too. Proprietary log analysis tools like BootHawk in Windows catered to specific tasks and helped enhance visibility into log data.

- 1998: Syslog-ng was introduced to address the growing demand for log collection. Because the waterfall age had caused log analysis to become more complex, teams looked for more reliable solutions. Syslog-ng helped enhance data transmission and provided wide support for various operating systems and applications. This shift allowed IT teams to study data from multiple locations and log types through a unified interface.

- 2004: Rsyslog emerged in the market. It came from the sysklogd standard package and aimed to provide a feature-richer and reliable syslog daemon while retaining drop-in replacement capabilities to stock syslogd.

- Present day: With the shift from waterfall to agile/DevOps methodologies, log analysis needed to adapt using automation, machine learning and AI. This shift was especially critical given the volume of data created each day. It’s no longer feasible to rely purely on manual operations.

Integrating AI/ML into the log analysis process has helped many data teams remove repeatable tasks so that they can focus on other tasks. The following section will discuss the different AI/ML Log Analysis approaches.

Understanding the Current Approaches to AI/ML Powered Log Analysis

Before diving into the main approaches for AI/ML-powered log analysis, it is essential to understand the two main training approaches for machine learning:

- Supervised Machine Learning: In supervised training, datasets are labeled and are often curated by humans. This action helps the model understand the cause-and-effect relationships inside the datasets. In this method, labeling log events related to a particular event allows the model to recognize and predict similar incidents based on the observed patterns. While it can be effective, this approach can be challenging and demands a lot of effort from data teams.

- Unsupervised Machine Learning: This approach to training machine learning models autonomously lets the model identify patterns and correlations inside the dataset. Unsupervised training enables the ML model to make predictions without needing pre-labeled data. Because modern applications are more dynamic and are frequently updated, unsupervised learning is a more practical approach for log analysis.

These learning models are then used to deploy ML in monitoring, collecting, and evaluating logs in centralized locations.

Good to Know!

One popular algorithm for unsupervised machine learning is k-means clustering. In this algorithm, datasets are partitioned into k-clusters and are used for tasks like customer segmentation and pattern recognition in unlabeled data.

These system-level insights can help data engineers find and fix issues quickly. There are two common approaches for ML-based log analysis:

1. Generalized Machine Learning Algorithms

This approach refers to algorithms that detect anomalies and patterns in string-based data. The most popular types of this algorithm are Linear Support Vector Machines (SVM) and Random Forest.

In SVM, the probabilities of certain words in log lines are categorized and correlated with different incidents. For example, words such as “error” or “failure” trigger an incident and receive a high score in anomaly detection. The scores are then combined and used to detect issues.

SVM and Random Forest use supervised machine learning for training and need massive data to provide accurate predictions, making them quite costly to deploy.

2. Deep Learning (AI)

Deep Learning is a powerful form of machine learning that works by training neural networks on huge volumes of data to find patterns. This approach is often used with supervised training with labeled datasets.

A study called “Deeplog” from the University of Utah shows one example of using deep learning for logs. In the paper, deep learning is used to detect anomalies in logs and parse them into event types, which can significantly improve the accuracy of log anomaly detection.

The challenge with deep learning is that it needs large volumes of data to become accurate, meaning it can take longer for new environments to serve accurate predictions.

Deep learning can also be compute-intensive and require expensive GPU instances to train models more quickly. Over time, this approach can be costly to perform automated log analysis.

While organizations navigate different ways to automate log analysis, choosing between the methods mentioned above becomes crucial. The balance between accuracy and real-world applicability can be delicate, so it is important to understand how these methods work.

There are a lot of benefits to integrating AI and machine learning into log analysis. Explore these benefits in the next section.

Fun fact!

Deep learning in data analytics involves using artificial neural networks with multiple layers (deep neural networks) to analyze patterns from large datasets and provide more accurate insights than traditional methods.

Exploring the Benefits of AI and Machine Learning for Log Analysis

Integrating AI/ML in log analysis offers organizations many advantages. These benefits include:

- Quicker Data Sorting: AI can help efficiently group similar logs and streamline data retrieval and navigation.

- Easy Identification of Issues: AI and machine learning are great at automating issue identification, even with a huge volume of logs.

- Early Detection of Anomalies: Machine learning can help prevent incidents by addressing issues at their inception.

- Optimized Resource Allocation: AI/ML enables quick and accurate resource allocation to high-priority areas by spending less time on manual log analysis.

- Critical Information Alerts: Employing AI and machine learning in log analysis can help minimize false alerts and help organizations ensure that notifications are only triggered by high-priority situations.

Log Analysis with AI and ML can help businesses enhance their efficiency in managing their log data. Having the right tool to implement ML with log analysis is crucial. In the next section, learn more about different log analysis tools with AI and ML.

Which Log Analysis Tools are Powered by AI and ML?

Many log analysis tools in the market use AI and machine learning for automation. One of these tools is Edge Delta.

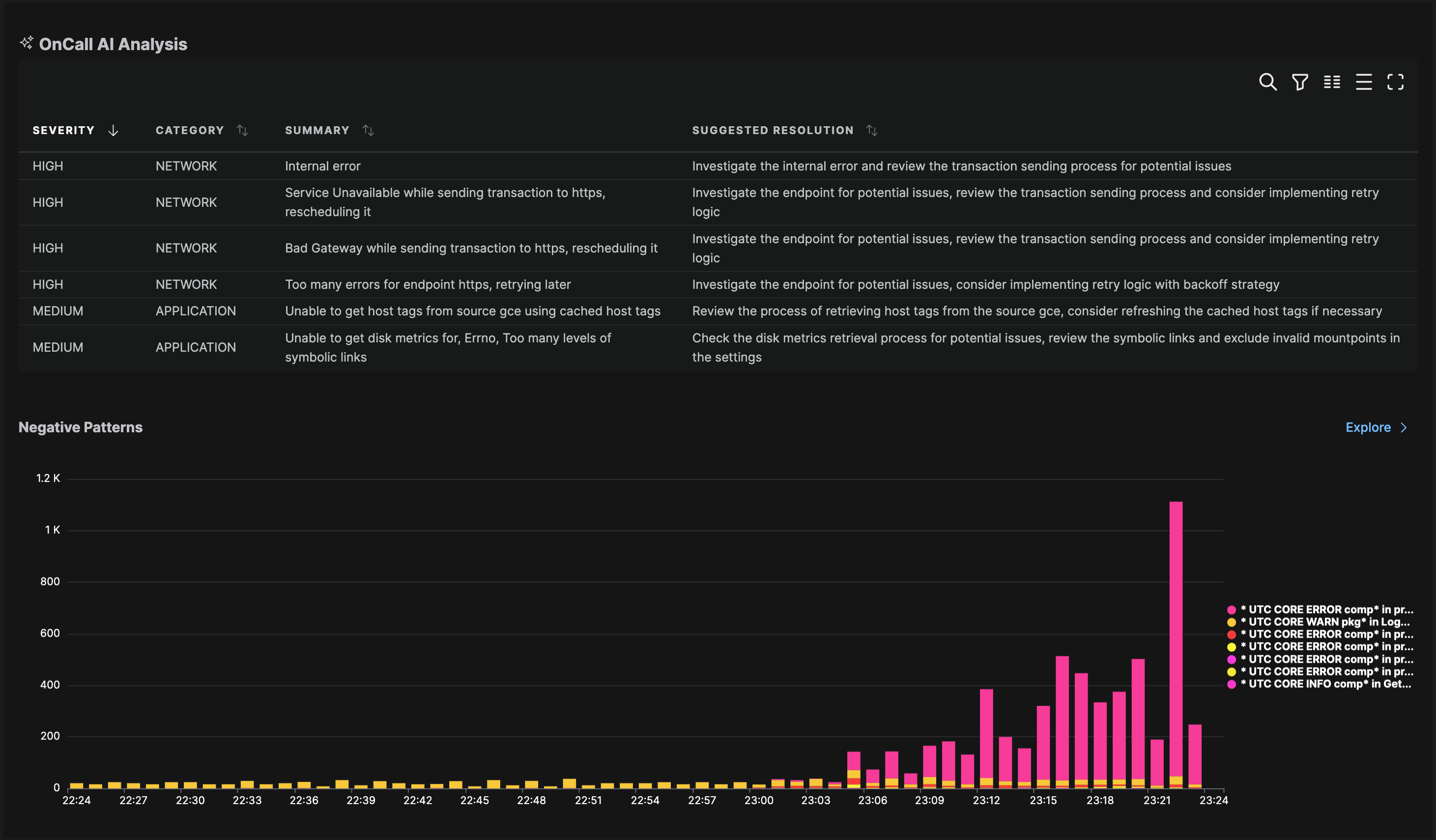

Edge Delta’s Automated Observability uses AI/ML for anomaly detection and troubleshooting issues. It allows teams to analyze 100% of their data at the source and automatically correlate logs to the alerts.

One use of Edge Delta’s Automated Observability is when it neutralized the log4j zero-day RCE vulnerability. This vulnerability is rated at the highest severity of 10/10. Edge Delta’s algorithm autonomously identified patterns in real-time log data without manual intervention from data teams. The platform’s system detected the threat within 79 seconds.

Features:

- Enhances Automation in Monitoring

- Simplified Root Cause Analysis

- Utilizing AI/ML to Monitor Services

- AI/ML Anomaly Detection

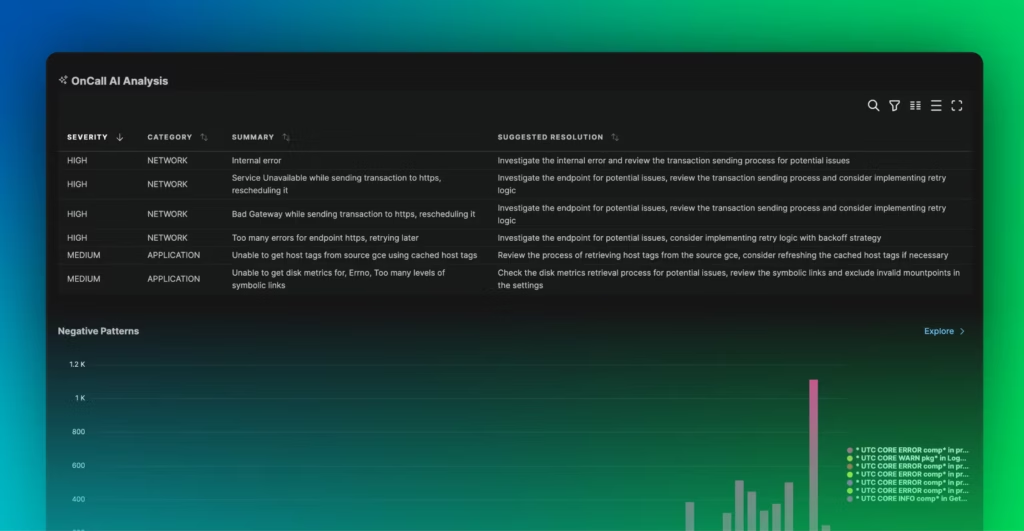

Edge Delta combines AI-driven automation with its distributed architecture. Its OnCall AI helps automate observability, providing organizations with clear summaries of every anomaly and a recommended path to resolution. Additionally, Edge Delta offers cost-effective log storage and search at a petabyte scale. Through these features, Edge Delta sets itself apart from competitors as a comprehensive and scalable solution for organizations of all sizes.

Here are some other log analysis tools in the market using AI/ML:

1. Datadog

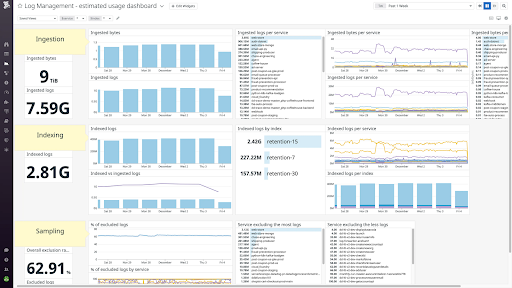

Datadog is a SaaS-based data analytics platform that offers log analysis with the power of machine learning. It helps businesses monitor servers, databases, and tools. Logs are protected by Datadog’s centralized data storage, and machine learning is employed to help find anomalies in log patterns.

2. Sumo Logic

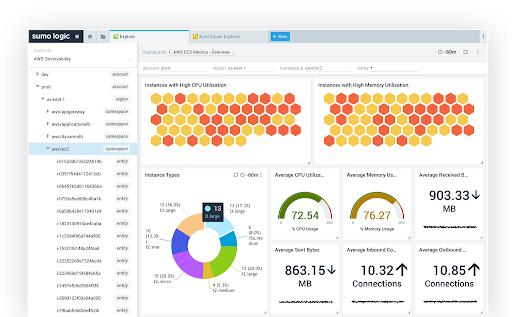

Sumo Logic helps manage logs and secure applications. It has a robust search syntax that resembles UNIX pipes and enables users to define operations effectively. Sumo Logic’s platform is a cloud-based data analytics machine that helps businesses find performance issues and improve application rollouts.

3. Zebrium

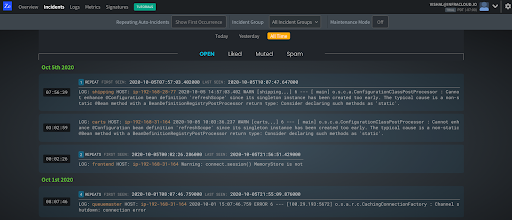

Zebrium uses unsupervised machine learning to monitor log structure and automatically find software incidents. It also shows the root cause of the issues and has AES-256 encryption. Zebrium finds hotspots or patterns of anomalies and correlates them to identify the problem. The platform then alerts the users through Slack.

4. Coralogix

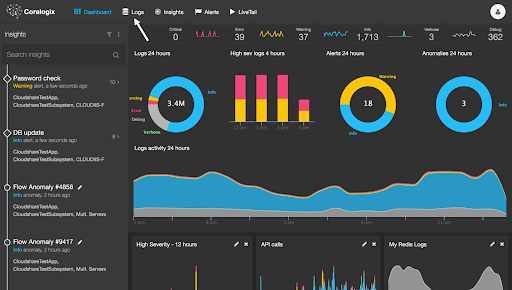

Coralogix aims to bring AI and automation to log analysis. They have a remote monitoring and management tool that is ML-powered and an analytics tool that enhances the delivery and maintenance process of the network. Coralogix offers an all-in-one platform for users to view live log streams and customized dashboard widgets for maximum control over their data.

5. Logic Monitor

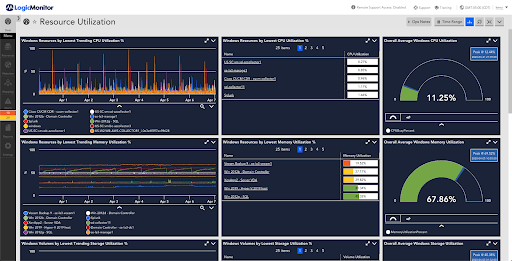

Logic monitor allows users to check on their data in a unified platform. It has full-stack visibility for networks, clouds, and servers so that data teams can focus on monitoring data that matters to their organizations.

Searching for the best tools for log analysis requires understanding what your team needs. These tools can help identify existing issues and potential threats. They can also help solve problems proactively to optimize the performance of systems.

Final Thoughts

The evolution of log analysis from the traditional processes to being incorporated with AI and machine learning techniques shows a significant leap in technology and data analytics. This evolution has changed how data teams identify patterns and anomalies in log data so they can spend less time on analysis and focus on more critical matters in their organization, such as product development.

FAQs About AI/ML Powered Log Analysis

How is AI and ML used in data analytics?

AI and machine learning help data analytics by simplifying the process of data ingestion. They also have the power to identify complex patterns in the data so teams can receive alerts more quickly and resolve issues as soon as possible.

What is the purpose of log analysis?

Log analysis gives visibility into the performance and health of an IT infrastructure. Reviewing and interpreting these logs can help data engineers and analysts gain insights on resolving issues and errors within the system and enhancing the overall user experience for the product or service.

How will AI change data analysis?

AI can change data analysis by providing precise forecasts and helping teams react to threats quickly. Machine learning models can also help analyze insights from previous data patterns to enhance their reliability in processing data.