Telemetry pipelines enable you to collect data — such as metrics, events, logs, and traces — from multiple sources and process it before you route to one or more destinations.

As more organizations grapple with the exponential growth of telemetry from sources like containers, microservices, and cloud migrations, the use of telemetry pipelines has emerged as a way to regain control over the associated management and costs.

Want Faster, Safer, More Actionable Root Cause Analysis?

With Edge Delta’s out-of-the-box AI agent for SRE, teams get clearer context on the alerts that matter and more confidence in the overall health and performance of their apps.

Learn MoreIn this article, we’ll look at the emerging world of telemetry pipelines, how they work, and why a prominent, independent analyst like Gartner would say that “by 2026, 40% of log telemetry will be processed through a telemetry pipeline product.”

Increasing Telemetry Volumes and Costs

If your work intersects with any type of telemetry data, you know volumes are rising. By our own calculations, on average, log data quantities have multiplied by five times from 2021-2023. And for 35% of companies, that number has exploded by between 7-10 times.

The reasons for these spiraling volumes can, at least partially, be attributed to things like cloud migrations, containerized applications and microservices, digital-first customer experiences, and the use of APIs.

The combination of organizations becoming cloud-first, plus an increasing fleet of technologies that create additional logs, events, metrics, and traces, is ensuring that the spike in telemetry data isn’t going to level off over time; just the opposite. According to Dynatrace’s 2024 State of Observability Report:

- 86% of technology leaders say the amount of data that cloud-native technology stacks produce is beyond any human’s ability to manage

- 85% say the costs of storing and analyzing logs are soaring and outweigh the benefits

- 81% agree that manual approaches to log management and analytics cannot keep pace with the rate of change of tech stacks and the volumes of data being created

If skyrocketing telemetry data volumes didn’t come with inflated costs, a lot of organizations could get by. However, the reality is that telemetry data is becoming exceedingly expensive to manage, precisely because it is incredibly valuable to organizations, which use it to monitor the health, performance, and security of software, applications, and environments.

That’s why the clearest indicator of rising telemetry volumes is your monthly observability or SIEM bill. Whether it’s an observability tool or a SIEM, the solutions used to analyze and monitor telemetry data charge for the amount of telemetry they ingest.

According to Gartner, the cost and complexity associated with managing telemetry data can top more than $10 million annually for large enterprises. Any organization paying $10 million per year to observe their telemetry data is then facing monthly costs of around $833,000. Perhaps these expenditures are not an issue for the top one percent of enterprises, but it’s completely unsustainable for everyone else.

Clearly, adjustments need to be made to account for and handle this growing ocean of telemetry data, and teams have been struggling to do just that.

Unsustainable Strategies to Reduce Telemetry Costs

In an effort to avoid completely handing over monthly budgets to monitoring solutions, teams have looked for alternative paths.

A lot of those attempts boil down to sampling, or extracting random selections from raw telemetry data and sending that to their preferred observability or security platforms to get a sense of what’s going on within systems. Alternatively, teams might just outright omit complete portions of their telemetry data, like events. These practices may reduce costs, but they also can lead to blind spots when critical incidents or outages occur.

And even if teams find temporary success this way, it’s not sustainable. As the volume of your telemetry data grows, you’ll end up scrapping larger and larger portions of that data in the name of cost cutting. In the process, you’ll be missing out on more and more valuable signals and issues that need resolution.

Telemetry pipelines can help teams break out of these patterns by pre-processing data before it’s sent to a pricey observability or security platform.

When data is processed before it’s routed to an observability or security platform, for instance, it can be reformatted or reduced in size. By stripping away extraneous fields from logs, for instance, you can significantly decrease the amount of information you’re asking your premium platforms to ingest and analyze. And that, in turn, will dramatically reduce costs.

How Telemetry Pipelines Work

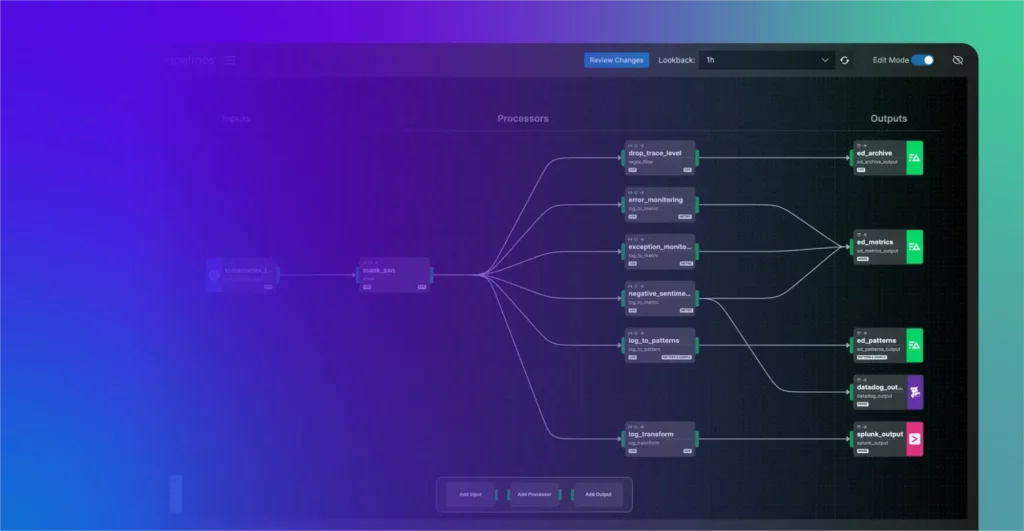

As with any pipe, there are three fundamental components: the input, the interior, and the output. From a visual perspective, telemetry pipelines are similar, but there’s a bit more that happens during the data flow. In this section, we’ll break down each piece of the pipeline.

Sources

These are sources, sometimes facilitated by data collection agents, from which data is pulled and collected. For instance, you could be extracting information from Kubernetes clusters, databases, devices, applications, to name a few. Ideally, you want any agents to run as close to your resources as possible.

In cases where you don’t own the resource generating telemetry data — such as capturing logs from a content delivery network (CDN) or a load balancer — you can deploy a hosted agent to collect the forwarded data from these external sources.

Processors

This is the section of the pipeline where all the data processing occurs. And depending on the data you’re using, you can pick and choose which type of processing you want to occur. For instance, some types of common processors include ways to transform your logs to metrics or filter, enrich, or mask different parts of your telemetry.

Destinations

Lastly, as with any pipe, there is always a final destination in mind for the journey’s end. With telemetry pipelines, the outputs are where data is routed after passing through the processors. These include observability or security platforms and storage solutions — or sometimes, all three.

Types of Telemetry Pipelines

It’s worth noting that not all telemetry pipelines are built equally. Here are some examples.

Homegrown Telemetry Pipelines

Some teams build their own pipelines. That could work if you have a large enough organization where you can devote certain team members to strictly managing pipelines, but most companies simply do not have that bandwidth.

Plus, even if you’re handling pipelines in-house, it’s not a straightforward process. Teams must often create separate configuration files that reference each other across various data sources. Manually constructing these extensive configurations introduces complexity, which only increases with scale. Additionally, there is often minimal or no support for validating pipeline configurations or monitoring them after deployment.

Platform-Specific Telemetry Pipelines

Some observability platforms have rolled out their own versions of pipelines. If you opt for a pipeline product from a premium observability vendor, for instance, they may be able to help you grab control over your log volume, but you can be sure they won’t be aiming to reduce your investments in their offerings.

Edge-Based Telemetry Pipelines

Edge-based pipelines are a step forward in pipeline architecture, providing greater flexibility and balance compared to traditional cloud models, which are typically centralized. By moving the processing stage closer to the data sources within your environment, transformation and routing operations become significantly less of a drain on resources.

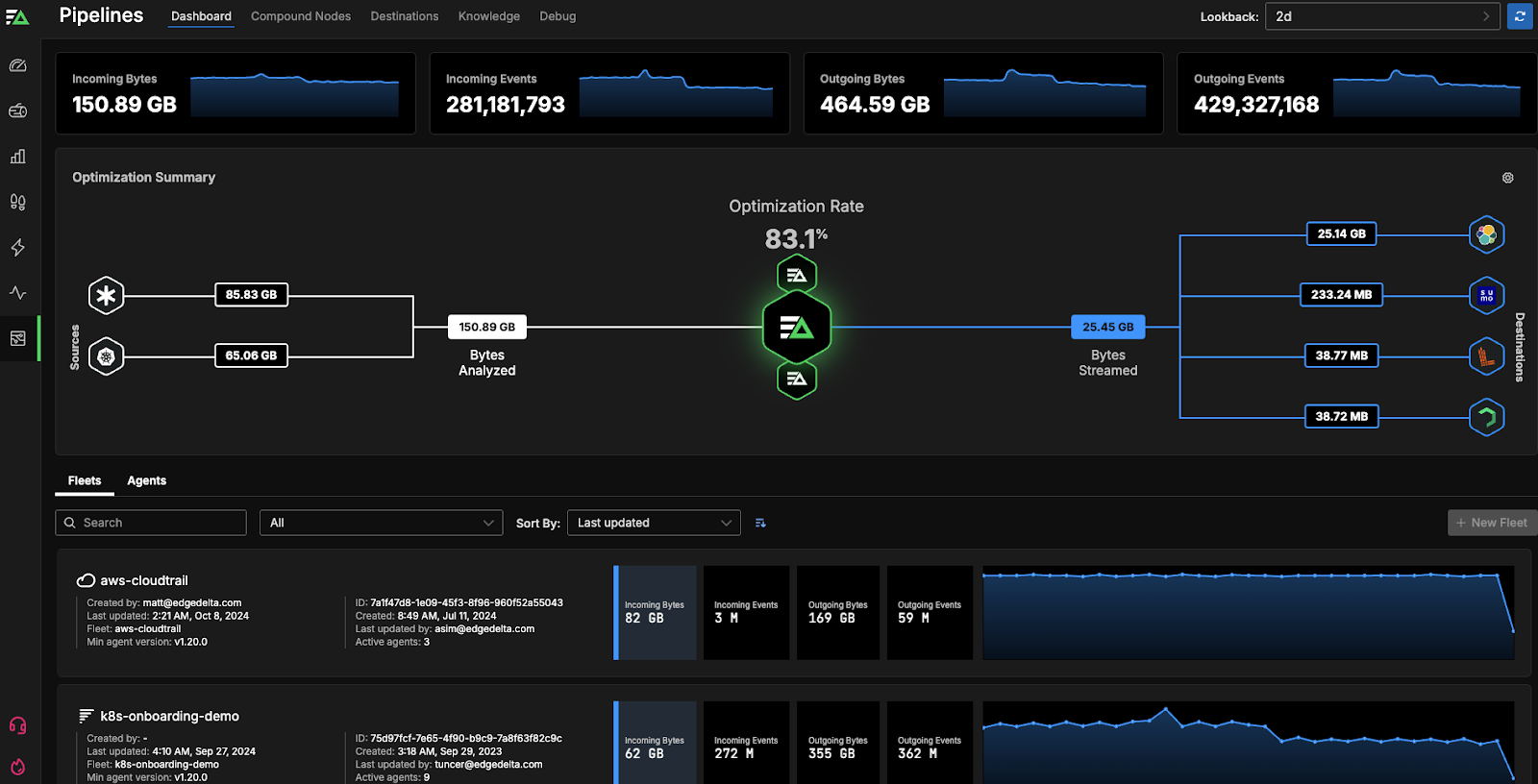

Edge Delta’s Telemetry Pipelines, for example, pull your data straight from its source, as it’s created, which is fundamentally different from most other pipelines.

From there, pipelines can be quickly created by selecting from our extensive library of pre-built processors — including PII masking, log to metric conversion, log event enrichment, and more — to aggregate, enrich, transform, and standardize your telemetry data.

After processing your data, our pipelines allow you to route it — in any format, including OpenTelemetry — to any downstream destination, including:

- Observability platforms

- SIEM platforms

- Cold storage options

Edge Delta’s intuitive, visual interface also allows you to quickly build, test, and monitor telemetry pipelines with self-service features and role-based access control.

Benefits of Telemetry Pipelines

To recap, here are some of the upsides to using telemetry pipelines:

Cost Reduction

By sending only high-value data to premium platforms, and shipping a full copy of all your raw data into affordable storage options, you will drastically reduce your team’s monthly expenses.

More Control and Clarity

Stripping away the noisiest segments of your data, distilling the signals, and extracting higher-level patterns for automated analysis provides a more accurate view of the overall health of your system.

Avoid Vendor Lock-In

If you’re using a vendor agnostic telemetry pipeline solution, you’ll be free from any rigid proprietary platform standards, and able to use whichever tools best suit your team’s needs.

To get the most benefits from your telemetry pipelines, your best bet is to go with an accessible, vendor-agnostic solution that will:

- Simplify pipelines workflows around logs, metrics, traces, and events

- Provide transparency pre-deployment and in production

- Enable developer self-service

- Give you complete control over your telemetry

- Allow you to route to any downstream destination

If you’re interested in immediately seeing how a solution like that could work, check out our playground.

Do You Need Telemetry Pipelines?

It’s possible the amount of telemetry data your organization is handling is just fine right now. If you’re not draining your budget every month with your observability or SIEM bills, there’s no rush to delve into telemetry pipelines. In those cases, it’s important to remember that data volumes will continue to grow, so even if you don’t need pipelines now, there’s a good chance you will later on.

But if you’re among the many organizations seeing unsustainable monthly observability or security bills, you may want to take a look at your options when it comes to telemetry pipelines. And since you’re already here, consider signing up for a free trial or hopping into our interactive playground.