Anomaly detection (or outlier detection) examines data to find patterns that do not conform to how it is expected to operate. The process provides significant insights into a system’s health, behavior, and security.

By promptly identifying irregularities, anomaly detection helps organizations address potential issues before they escalate into severe problems. Anomaly detection helps secure the system’s continuity and reliability—making it a vital component of modern data analysis and security strategies.

Want Faster, Safer, More Actionable Root Cause Analysis?

With Edge Delta’s out-of-the-box AI agent for SRE, teams get clearer context on the alerts that matter and more confidence in the overall health and performance of their apps.

Learn MoreContinue reading to uncover more on what is anomaly detection and its significance in data management.

What is Meant By Anomaly Detection? Full Definition and Process

Anomaly detection refers to identifying data points, events, or observations that stray from the normal behavior of the dataset. The detected irregularities can indicate critical incidents like security breaches, failing components, or areas that need improvement.

The anomaly detection process works by baselining datasets to understand normal patterns of behavior. From there, your analytics tool can identify when data is trending in an unusual or unexpected manner. Deviations can be identified by setting thresholds on metrics or using advanced AI/ML algorithms.

Learn more about how anomaly detection works in the next section.

Anomaly Detection Techniques and Algorithms

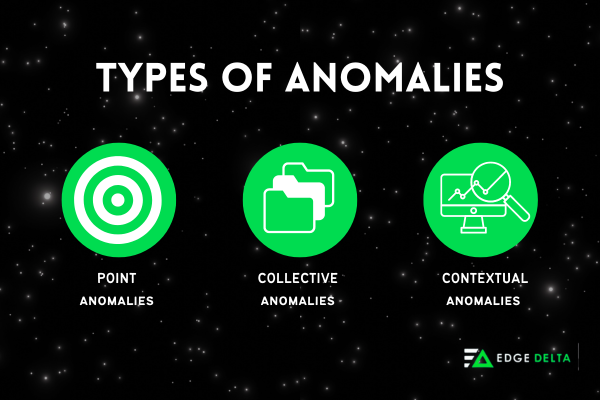

- Point Anomalies – occur when individual data points shift from the rest of the data. These anomalies stand out because they have unusual or rare values compared to most data points in a dataset.

- Collective Anomalies – A collection of related data points is eccentric compared to the entire dataset. However, the individual data points within the collection might not be anomalies alone. This anomaly is often identified in time series or spatial data.

- Contextual Anomalies – occur when a data point is anomalous only within a specific context. The context can be a situation, environment, or condition under which the data was generated.

There are three ways to identify the aforementioned anomalies: unsupervised, semi-supervised, and supervised. Explore how each technique works below.

- Supervised Anomaly Detection

- Supervised anomaly detection is training a model with a normal dataset and an anomalies dataset. This technique applies standard classification algorithms to learn the distinguishing features between what’s normal and what’s not.

- The model can predict and label new observations as normal or anomalies with this method. It is highly effective only when a substantial amount of labeled data is available since the model can quickly identify sample patterns.

- Semi-Supervised Anomaly Detection

- Semi-supervised anomaly detection takes a small subset of the data. The subset is usually labeled normal, while the vast majority remains unlabeled.

- The technique focuses on having a model learn the normal data. Then, it identifies anomalies as deviations from the learned normalcy. This approach is useful when a limited amount of labeled data is available.

- Unsupervised Anomaly Detection

- Unsupervised anomaly detection relies on identifying outliers in datasets without labeled data. It assumes that anomalies are data points that deviate significantly from most data distributions. This technique uses various algorithms to detect anomalies by analyzing the data’s intrinsic structure and patterns. It is really useful in scenarios where labeled data is scarce or nonexistent.

The anomaly detection techniques rely on various algorithms and methods. Some of the common ones are:

| Algorithm/Method | Description |

|---|---|

| Statistical Methods | These are traditional methods based on statistical models. Examples of these methods include the z-score, Grubbs’ test, and Chi-square test. They are effective for individual data but challenging for complex, high-dimensional data. |

| Algorithm/Method | Description |

|---|---|

| Isolation Forest | This algorithm isolates anomalies instead of profiling normal data points. It selects a feature randomly and then picks a split value between the maximum and minimum values of the selected feature. |

| Local Outlier Factor (LOF) | This method measures the local deviation of a data point based on its neighbors. It identifies outliers from the points with a substantially lower density than their neighbors. |

| One-Class Support Vector Machine (SVM) | One-Class SVM learns a decision boundary to separate normal data from anomalies. It defines a region that captures most data points and labels points outside that region as outliers. |

| Algorithm/Method | Description |

|---|---|

| Autoencoders | Trained on normal data, an autoencoder is used to reconstruct new data. The data points with high reconstruction error are considered anomalies, assuming they differ from the training data. |

| Generative Adversarial Networks (GANs) | This method trains a pair of networks, consisting of a generator and a discriminator, on normal data. The discriminator identifies anomalies by its inability to classify normal or generated data. Additionally, anomalies may be detected when the generator struggles to produce parallel instances. |

| Algorithm/Method | Description |

|---|---|

| K-Means Clustering | K-means clustering identifies data points far from their cluster centroids. These points are anomalies because they do not fit well into any cluster. |

| Density-Based Spatial Clustering of Applications with Noise (DBSCAN) | This algorithm groups closely packed points together. It then marks those that lie in low-density regions as outliers. |

Why is Anomaly Detection Important? Benefits and Use Cases

Anomaly detection is beneficial in cloud computing and big data. It helps maintain the integrity of the operation, ensures the system’s security, and enhances the overall performance.

Besides cloud computing and big data, anomaly detection is also essential in other industries. Different sectors and domains use anomaly detection to enhance operations, products, and services.

Here are a few use cases of anomaly detection:

- Finance: Banks and other financial companies utilize anomaly detection to identify unusual transactions, often signaling malicious activities such as credit card fraud, insider trading, and money laundering.

- Healthcare: In healthcare, anomaly detection serves to identify unusual patient conditions, irregularities in clinical trials, or unexpected treatment responses.

- Manufacturing: Anomaly detection aids in determining whether products meet quality standards and helps identify areas in the production process that require improvement to prevent defects.

- Cybersecurity: Anomaly detection identifies suspicious activities that can impact an IT environment while monitoring patterns and trends, which helps safeguard the system against potential breaches or unnecessary downtime.

- Observability: Anomaly detection can be used in observability and monitoring to trigger alerts. Instead of relying on engineers to define alert conditions and thresholds, anomaly detection can notify users when telemetry data deviates from normal behavior. This helps teams detect issues that could lead to downtime before they impact users.

When it comes to anomaly detection, one of the best solutions is Edge Delta. It excels in real-time data processing since it uses AI and distributed analytics to identify outliers. Moreover, Edge Delta optimizes alerts based on the actual behavior of a customer’s environment to help reduce false positives.

Edge Delta’s anomaly detection feature helped Peerspace by automating the monitoring and troubleshooting processes. This solution assisted in identifying issues without the need for manual setup or threshold definition, leading to a faster problem resolution.

Experience real-time, scalable, and accurate anomaly identification without the hassle of manually setting everything up. Try Edge Delta’s AI anomaly detection for 7 days to enhance your data processing!

Conclusion

Anomaly detection is a critical component of data analysis. It identifies irregularities that could represent relevant insights or potential threats across IT environments. This process is significant in improving and safeguarding a system.

With solutions like Edge Delta—which uses real-time data processing and AI-driven analytics, businesses can leverage anomaly detection and achieve a more secure and reliable architecture.

Anomaly Detection FAQs

What is the difference between forecasting and anomaly detection?

Forecasting uses past data to predict future values. It uses historical data to understand and visualize what might happen in the future based on past trends. Meanwhile, anomaly detection uses available data sets to identify specific data points that deviate from the normal behavior of a system.

How do you tell the difference between anomaly and novelty detection?

Anomaly detection is the process of detecting outliers that exist in a dataset. On the other hand, novelty detection is about identifying events or activities in a dataset that are not in the model training phase but are deviating from the norm.

Why do you need machine learning for anomaly detection?

Machine learning (ML) is vital for anomaly detection because it automatically learns and adapts to identify irregular patterns in a dataset without particular rules. Its ability to process massive data volume is also beneficial, especially when spotting anomalies in real-time.