In this article, we’ll go over how we use Common Expression Language (CEL) at Edge Delta. CEL helps us empower our users to set up Observability Pipeline configurations and process data in a very simple manner. That’s because CEL is both predictable and easy to read.

But, first, why do we need an expression language in the first place?

Want Faster, Safer, More Actionable Root Cause Analysis?

With Edge Delta’s out-of-the-box AI agent for SRE, teams get clearer context on the alerts that matter and more confidence in the overall health and performance of their apps.

Learn MoreIt’s critical to create an abstraction between (a) data that passes through a pipeline and (b) the configurations that manipulate or use that data. To create this abstraction, we need an easy way to refer to and operate on the different parts of the data object. This is where an expression language becomes necessary. When trying to get your product off the ground quickly, using an existing expression language saves a lot of time. Especially, when that language allows you to define your own macros to expand its functionality.

Why Common Expression Language?

Using CEL allows us to provide a concise and extendable language that runs in linear time, quickly terminates on errors, and is memory-safe. Simply put, CEL is a confined set of grammar rules that – when evaluated on a data object – is guaranteed to quickly produce an output.

CEL is commonly used across the industry, such as in the Kubernetes API. It’s also quite intuitive for users to pick up. However, CEL is not a free-form templating language where users can directly define their own functions, set variables, and have branched logic (if else and then statements).

Simplicity of Use

In order to use CEL, all a user needs to know is how to refer to the payloads passing through the application. If an application defines this payload as an “item” then simply writing the expression item[“field_to_be_accessed”] would access the field they want to refer to. The application code would create a CEL program with that expression and evaluate it on any data item, such as:

application_payload {

field_to_be_accessed: “return_value”

}

The output from the expression will be “return_value” which can be used by the application.

If the user defines their expression on a field that does not exist, the CEL program will return an error back to the application code. Then, the application code can decide how it wants to handle this. In some cases it makes sense to return the error back to the user so that they can update their expressions. In other cases, the application code can fall back to some reasonable defaults.

The user can use logical operators, macro functions, and built-in operators to operate on the result of their expression, such as creating a new string or validating the field’s value.

retString = “This is the ” + item[“field_to_be_accessed”]

retBool = item[“field_to_be_accessed”] == “return_value”

The resulting fields would be:

retString = “This is the return value”

retBool = true

If these built-in functions do not suffice, the application can extend the CEL macros very easily. It does so by defining custom functions based on the input types and output types, and writing the logic in the language of the application code.

One example when this would be useful is if a user wants to merge fields that are map. We can define a function merge(field_a map, field_b map) -> map that takes in two maps, merges them together, and returns the resulting map.

Given the following:

application_payload {

field_a: {

“name”: “John”

},

field_b:{

“age”: 30

}

}

…a user could define merge(item[“field_a”], item[“field_b”]) to get:

result {

“name”: “John”,

“age”: 30,

}

It is important to note that the details of this function’s implementation should be well-documented for the user to understand.

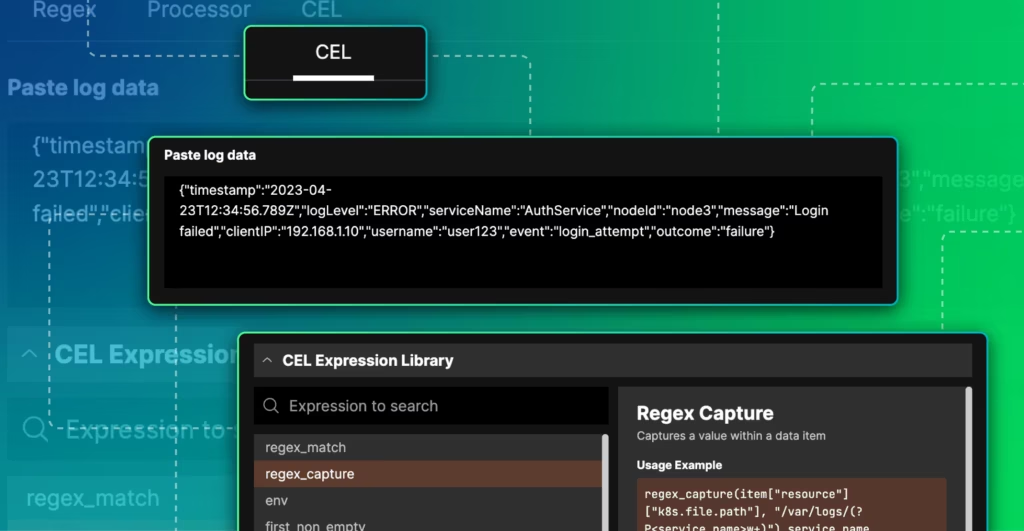

How CEL Powers Edge Delta Configs

Let’s walk through a real-world example of how CEL can simplify an Edge Delta configuration.

Let’s say we have an incoming JSON http log in our source that looks like the log below. Our ultimate goal is to create a metric based on certain fields within the log.

'{"timestamp":"2024-01-22T12:34:56.789Z","method":"GET","response_time":25, "resp_time": 30, "headers":{"content-type":"application/json","authorization":"Bearer your_token_here"}}'

A couple of details about the log that will guide this example:

- Either

response_timeorresp_timeor both will be populated. - The log is a JSON string

- The

headersare nested JSON

First, let’s unpack all the parts of this JSON log into individual fields. To do this, we could use a json parse CEL macro to do so. The payloads that pass through our system follow OpenTelemetry (OTEL) standards, so the incoming log, when converted to a payload, would look like the following after parsing the log into the attributes

We could define a CEL macro that would do that for the user:

// parses json and inserts the object into the attributes

parse_json(field_path)

example:

parse_json(item["body"])

The expression would result in the following:

{

"_type": "log"

"attributes": {

"timestamp": "2024-01-22T12:34:56.789Z",

"method": "GET",

"response_time": 25,

"resp_time": 30,

"headers": {

"content-type": "application/json",

"authorization": "Bearer your_token_here"

}

}

"body": '{"timestamp":"2024-01-22T12:34:56.789Z","method":"GET","path":"/api/user","status_code":200,"response_time":25, "resp_time": 30, "headers":{"content-type":"application/json","authorization":"Bearer your_token_here"}}' // incoming log

....

other fields

Let’s bring the headers to the top level of the attributes where the other attributes are to make it easier to work with. To do this, we can define a transformation on the data utilizing the merge macro that we defined previously. This will merge the attributes map with the nested headers map.

transformation:

- field_path: attributes // where the value will get upserted

operation: upsert // operation applied on the field path

value: merge(item["attributes"], item["attributes"]["header"])

Here’s what we end up with in the attributes (the rest of the log stays the same):

{

"_type": "log"

"attributes": {

"timestamp":"2024-01-22T12:34:56.789Z",

"method":"GET",

"path":"/api/user",

"status_code":200,

"response_time": 2.5,

"resp_time": 3000,

"content-type":"application/json",

"authorization":"Bearer your_token_here"

}

...

Maybe we know that response_time is in seconds, and we want it in milliseconds, we could apply a transform such as:

item["response_time"] * 1000

Then we want to create a metric based on the fields we extracted. Let’s create a metric based on either response_time or resp_time, depending on which exists in the log. Let’s say we have a CEL macro called first_non_empty that takes the first non-empty field from a list of fields and uses that value. Below we have a log to metric processor node, the only part to focus on is the section under dimension_groups.

nodes:

- name: http_metric

type: log_to_metric

pattern: "method"s*:s*"[^"]+" // matches all logs with method in it (http log)

enabled_stats:

- avg

dimension_groups:

- field_dimensions

- item["attributes"]["method"] //

field_numeric_dimension: first_non_empty(item["attributes"]["response_time"], item["attributes"]["resp_time"])

What we are doing above is creating a string attribute for the metric based on the method field, so this attribute would be GET. We are also creating a numeric dimension that tells the metric payload which field holds the value to be used by the metric, either response time or resp time.

An example output of this processor would be a metric item such as:

{

...

"_type": "metric",

"_stat_type": "avg",

"name": "http_metric.count",

"kind": "avg",

"gauge": {

"value": 25

}

"attributes": {

"method": "GET"

}

}

With the power of CEL, we can transform the data and dynamically create this metric in a way that is robust to changes in the data. It shows us how we can easily create a configuration that utilizes aspects of the data passing through our system to efficiently guide the agent in processing the data into the user’s desired output. Plus, we can hand that power to the user, and wherever it makes sense, we can apply reasonable defaults on the user’s behalf.

Future Work

There are some challenges with CEL and improvements that can be made.

Macros often have to be singularly focused, and slight differences in inputs, outputs, or functionality may require a new function definition resulting in a longer macros library. Sanitizing or guiding user inputs, in addition to strengthening validation on them, would allow us to extend each individual macro’s functionality.

It is also hard to validate expressions at compile time, and many validations can only be validated at runtime because the existence of a field is unknown. There are ways to handle this at runtime by checking for the existence of a field and applying defaults or allowing users to specify default behavior.

In many cases, expressions are applied to each data item that passes through the application, so one should consider the complexity of implementations of custom functions. Imagine we have our macro function merge. Each merge between two maps takes O(n) time because each gets or put is O(1), and there are n fields. Now in each payload item, we have worst case O(m) merges. So for each payload, we are doing O(n * m) operations.

Lastly, error handling can be cumbersome if there are errors for multiple expressions applied on a single data item. It is important to report these back to the user in a way that is efficient for your application and insightful for the user. It may make sense to stack runtime errors and report errors back at an interval. For some errors, it may make sense to just log a debug and use reasonable defaults.