Author’s Note: This is Part 1 in our series on managing modern telemetry data volumes with Edge Delta’s flow control capabilities. A big thank you to my colleague, Chad Sigler, who has worked alongside me in countless customer interactions to simplify and automate the dynamic sampling approach described in this post.

Telemetry data requirements are inherently dynamic. When your environment is running smoothly, you don’t need to persist every log, metric, and trace in your observability platform — it’s far too costly, especially when working with modern data volumes. But sending everything downstream in full fidelity is necessary during a critical outage or security breach, as doing so ensures you have rich context around impacted services or components for troubleshooting.

Want Faster, Safer, More Actionable Root Cause Analysis?

With Edge Delta’s out-of-the-box AI agent for SRE, teams get clearer context on the alerts that matter and more confidence in the overall health and performance of their apps.

Learn MoreLegacy observability solutions aren’t able to support both of these scenarios simultaneously, which forces teams to choose between skyrocketing observability costs and lost data that could have helped during incident response.

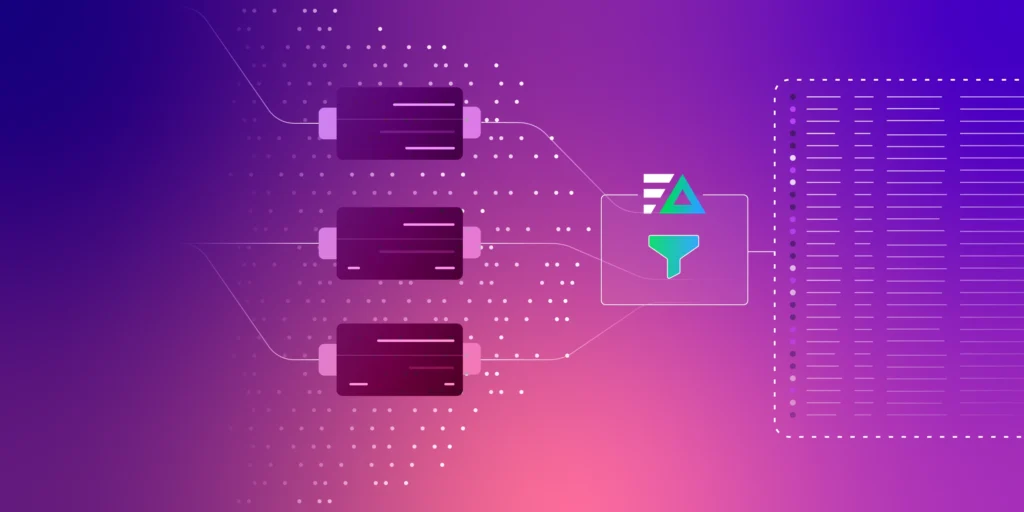

Edge Delta’s intelligent Telemetry Pipelines address this challenge by enabling teams to easily implement dynamic, scenario-based telemetry routing — known as flow control. By automating the transition between baseline volume optimizations and temporary full-fidelity routing, teams can ensure the right data is sent to the right place at exactly the right time. This approach reduces observability costs and alert fatigue, accelerates investigation workflows, and eliminates risky tradeoffs between cost and visibility.

In this post, we’ll dive deep into dynamic rate sampling — a primary implementation technique for flow control — and walk through how Edge Delta makes it easy to automate at scale.

Flow Control with Dynamic Rate Sampling

One of the most popular techniques used to reduce telemetry data volumes is sampling, which involves routing only a pre-defined subset of data to backend platforms to reduce costs without sacrificing significant visibility. But if sampling is too aggressive — especially during an incident — it can filter out the data needed to understand what went wrong, making root cause analysis slower and more difficult.

Dynamic rate sampling gives teams the ability to adjust how much data is sampled based on real-time conditions. With Edge Delta, teams can instantly adjust the sampling rate of their data at the source, dynamically dialing up full-fidelity logging for a deep investigation or dialing it down to control costs. And by integrating Edge Delta monitors into the workflow, teams can automate the switch based on when a specific alert triggers or turns off — ensuring sampling is disabled when they’re actively troubleshooting an issue.

Traditional implementations of dynamic sampling involve running several complex workflows to synchronize external events with telemetry data sampling rates. With Edge Delta, it’s as simple as adding a few processing nodes into a Telemetry Pipeline using our point-and-click interface.

How It Works: A Step-by-Step Breakdown

Dynamic rate sampling operates through a precise sequence of processing steps performed by the Edge Delta agent. Here’s how it works:

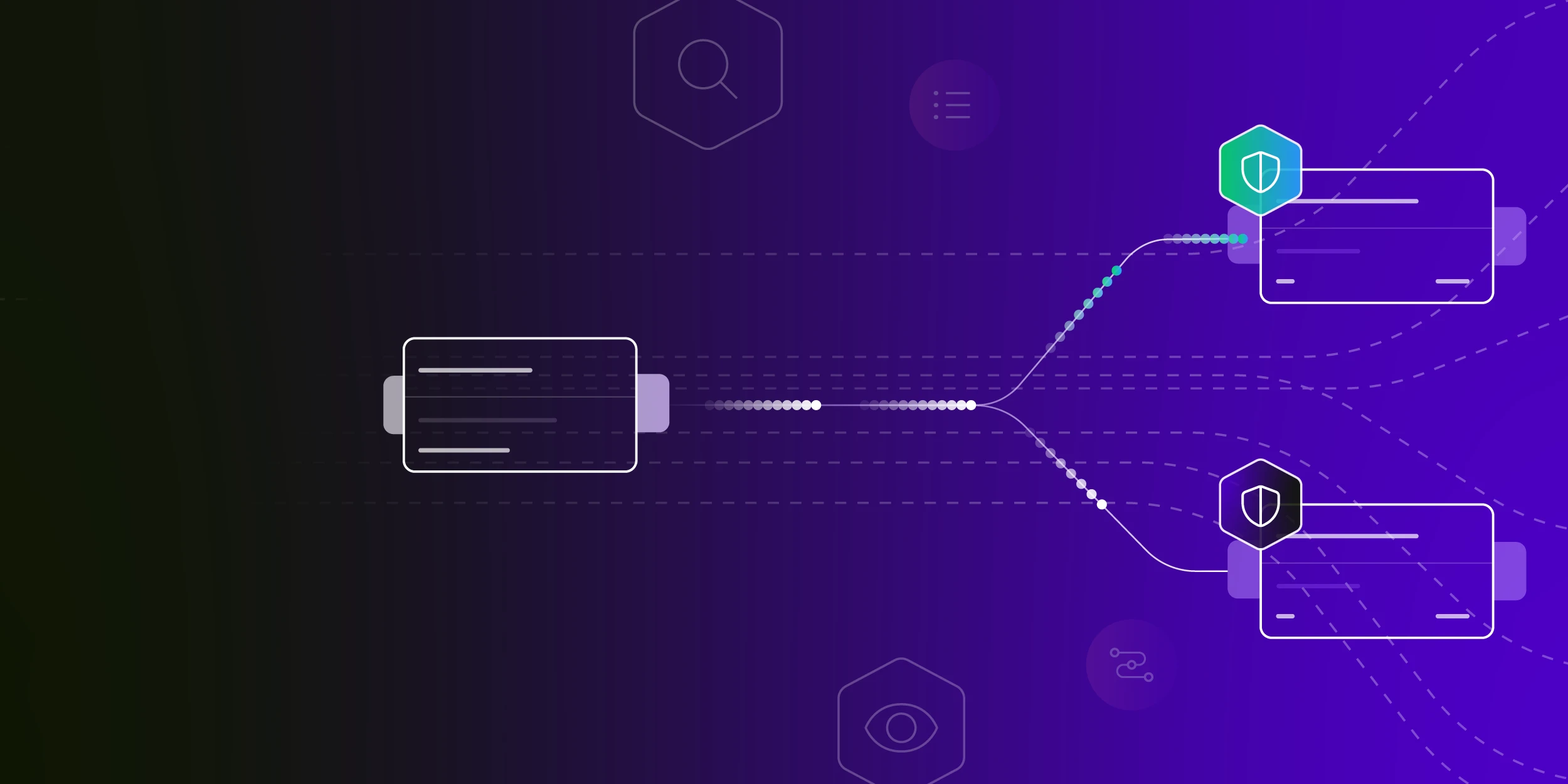

First, a custom OTTL (OpenTelemetry Transformation Language) statement prepares the incoming data by tagging each item with a default rate in its {attributes} section. This initial step is crucial, as it specifies the baseline sample rate that enables dynamic sampling on the specific telemetry objects you define.

The next processor in the sequence consults a lookup table to find the current flow rate (0-100%) and its corresponding expiration date. This allows you to manage rates for different services or conditions centrally, and alternate between the baseline rates established in the initial step and the ones specified in the lookup.

The following processor then applies conditional logic to check if the expiration date specified in the lookup table is in the past. If it is, the pre-configured default sample rate is applied. If the expiration date is still in the future, the system uses the rate pulled from the lookup table and stores it in the telemetry object’s {rate} attribute field.

Finally, the Sample Processor executes the decision by referring to the rate attribute on the telemetry object. Based on this attribute, it uses probabilistic selection to sample the data, ensuring the correct volume of data flows through the pipeline.

The truly powerful part of this design is its simplicity for the end-user. Once Flow Control is configured, there’s no need to edit pipelines or deploy changes — to “flip the switch,” a user simply has to update the associated CSV file either manually or via an automated trigger. This puts dynamic control directly in your hands, without any complex engineering effort.

Get Started with Flow Control

Edge Delta’s intelligent Telemetry Pipelines simplify flow control implementation and automation, enabling teams to dynamically adjust data processing — including rate sampling — to reduce costs, minimize downstream noise, and accelerate troubleshooting. Whether you’re an SRE focused on reliability, a security analyst hunting for threats, or an engineering leader managing a budget, Edge Delta provides the flexibility and control needed to manage observability and security data at scale.

If you’re curious about how flow control can help you manage your telemetry data, schedule a demo with one of our experts. You can also explore our intelligent Telemetry Pipelines for free in our interactive Playground.

In the next post in this series, we’ll show you how flow control solved a real-world crisis for one of our customers. It’ll be out soon — stay tuned!