Back in the 2000s, I fell in love with algorithms by creating a program to solve Sudoku. Recursion, stacks, Knuth’s Dancing Links — I remember watching in awe as my code backtracked through complex Sudoku puzzles in mere milliseconds.

Fast forward to 2025. My IDE now writes entire classes autonomously while I chat with an LLM, and the feeling is the same.

Automate workflows across SRE, DevOps, and Security

Edge Delta's AI Teammates is the only platform where telemetry data, observability, and AI form a self-improving iterative loop. It only takes a few minutes to get started.

Learn MoreThere’s that spark of, “Wait, it can do that?”

The first time I connected WhatsApp to an LLM, it even guessed my wife’s name and nailed the perfect gift suggestion.

The advent of MCP is another one of those “How is this even possible?” moments — and today, I’m thrilled to announce that Edge Delta will be a part of that journey with the release of our very own Edge Delta MCP Server.

What Is MCP, and What Is an MCP Server?

Model Context Protocol (MCP) is an open standard developed by Anthropic that streamlines communication between AI models and external data sources, tools, and environments. MCP follows a client-server architecture, which includes three primary components:

- MCP Hosts: AI-powered applications (e.g. Claude, Windsurf, OpenAI) that collect user prompts and manage MCP clients.

- MCP Clients: Clients living within the host that maintain the connection between hosts and MCP servers.

- MCP Servers: Servers that facilitate the communication between MCP clients and external tools.

By leveraging MCP, developers can abstract away the specifics of agent-to-tool API integrations, and focus on leveraging AI to enhance their workflows.

Edge Delta’s MCP Server Is Live

Edge Delta’s MCP Server provides the context, tools, and prompts needed to transform user-generated LLM requests into structured, real-time responses. Our server sits between your favorite developer tools and the Edge Delta platform, so you can bring generative AI directly and effectively into your observability workflows.

Why It Matters

By communicating with our MCP Server, you can intelligently sift through telemetry data and optimize pipeline configurations with ease:

- Instant Root Cause Analysis — Ask “Why did error X spike?” and get log patterns, metric deltas, and probable root causes in one answer.

- Adaptive pipelines — Let the model suggest sampling rules or suppression triggers based on live traffic.

- Effortless cross-tool orchestration — ask once and let the LLM weave Edge Delta anomalies together with Slack conversations and AWS KB fixes, no glue code needed.

Our MCP Server in Action

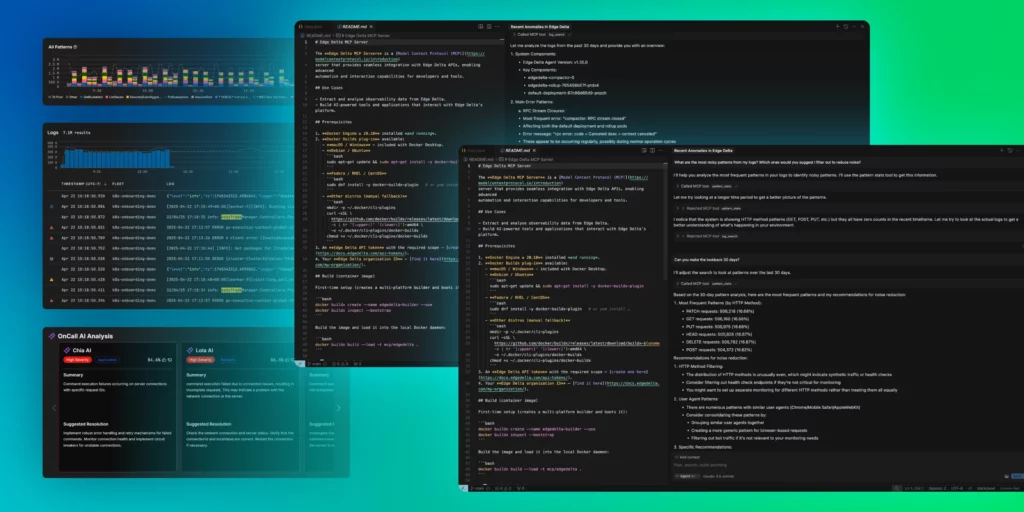

Once our MCP Server is configured, Edge Delta users can inspect their telemetry data from the comfort of their IDE. For instance, if a user wants to learn more about recent anomalies and search through any associated logs, or identify noisy log patterns to drop, they can write a few short prompts to their LLM and get the results back instantly:

Under the Hood

Edge Delta’s MCP Server is built on Go, and has minimal authentication requirements:

- Language: Go

- MCP layer: github.com/mark3labs/mcp-go

- Auth: Your Org ID + API Token

Get Started With a Single Setting in Your IDE

To get started with our MCP Server, simply add the following configuration in the appropriate location within your IDE of choice:

{

"mcpServers": {

"edgedelta": {

"command": "docker",

"args": [

"run",

"-i",

"--rm",

"-e",

"ED_ORG_ID",

"-e",

"ED_API_TOKEN",

"ghcr.io/edgedelta/edgedelta-mcp-server:latest"

],

"env": {

"ED_API_TOKEN": "<YOUR_TOKEN>",

"ED_ORG_ID": "<YOUR_ORG_ID>"

}

}

}

}

I hear the concerns: context‑window limits, latency, cost. But we said the same about Dancing Links on a 200 MHz laptop, and look where we are now. Every breakthrough starts with a prototype.

Stay curious,

Fatih Yildiz

CTO, Edge Delta