Logs are vital records of events, transactions, and operations within software, hardware, and networks. Security, developer, and IT operations teams use logs for various functions, including the following:

- Debugging codes

- Monitoring performance

- Ensuring system security

- Maintaining compliance with regulatory standards

Managing logs, however, presents several challenges, primarily due to the volume, velocity, and variety of log data.

Automate workflows across SRE, DevOps, and Security

Edge Delta's AI Teammates is the only platform where telemetry data, observability, and AI form a self-improving iterative loop. It only takes a few minutes to get started.

Learn MoreOrganizations often turn to log management tools and systems to address these challenges. These tools aggregate, store, analyze, and visualize logs to simplify log management and data analysis and provide actionable insights. However, in order to extract the most value from your logs, it’s beneficial to parse and structure your data.

Learn more about log parsing in this guide, which covers best logging practices, top tools to consider, and more.

Key Takeaways

- Log files are vital records of events, transactions, and operations that engineering teams use to debug codes and ensure system security

- Log parsing organizes log entries into fields and relational data sets

- In addition to being machine-parsable, log files should be human-readable

- Logs are frequently the target of data intrusion; as such, it is a good practice not to log sensitive information

- Technology like machine learning, AI, and big data analytics is shaping the future of log parsing.

Understanding What Log Parsing Is and How It Works

Logs are usually in plain text format, which can either be structured or unstructured, including the following:

- Timestamps

- IP addresses

- Error codes

- Usernames

Log management solutions must parse the files to extract meaningful information from logs. Log parsing identifies and categorizes log entries into relevant fields and relational data sets in a structured format, allowing information to be easily searched for and analyzed.

The following are some common log formats:

- JSON

- CSV

- Windows Event Log

- Common Event Format (CEF)

- NCSA Common log format

- Extended Log Format (ELF)

- W3C

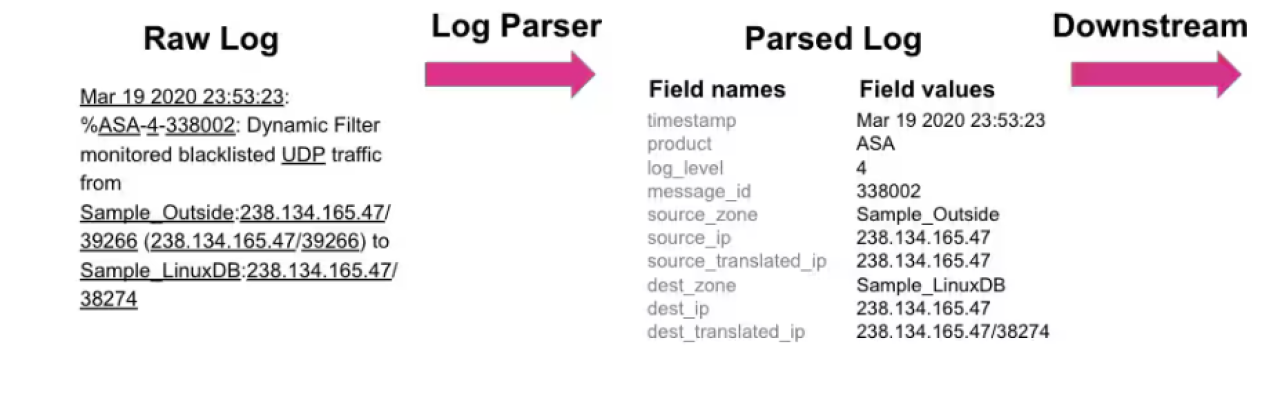

Here’s an example of how log parsing converts log files into a common format that computers can understand:

Source: Splunk

Parsing log files can be helpful to streamline any of the following initiatives:

- Monitoring and troubleshooting

- Security

- Compliance

- Business Analytics

Here’s an overview of how a typical log parser works:

1. Collect Log Files

First, log files from servers, applications, and network devices are collected. At this point, agents or log forwarders reliably and consistently gather logs.

2. Ingest Log Files

After collection, log files are ingested into a log management system. The ingesting process can be done in real-time or in batches.

3. Pattern Recognition

Log parsing tools use regular expressions or predefined patterns to identify and extract relevant data from log entries.

4. Tokenizing

Tokenizing log entries into structured data makes them easier to analyze. Analysts can easily search and filter logs based on specific criteria.

5. Normalizing

The log parsing process normalizes data to keep a consistent format. This process may involve converting timestamps to a standard date-time format or resolving abbreviations.

6. Storage

Following normalization, the extracted and parsed log data is saved in a central repository or database for future analysis and storage.

7. Query and Analysis

Once the log data has been parsed and stored, it can be queried and analyzed to extract insights.

A log parser performs all of the mentioned activities of converting raw, often unstructured log data into a structured format that is easier to analyze and understand.

Keep reading to learn the best tips and practices for smooth log parsing.

Best Practices for Seamless Log Parsing

Log parsing and analysis are crucial for monitoring performance, troubleshooting issues, and improving security as systems become more complex and generate more data. Organizations can turn log data into actionable intelligence for proactive decision-making and operational efficiency with the right methods and tools.

Here are some best practices for seamless log parsing that can help organizations maximize the utility of their log data:

1. Establish Clear Logging Objectives

Identify your business or operational goals and application core functions to set logging objectives. Determine the key performance indicators (KPIs) necessary to measure progress towards these goals. This clarity allows you to make informed decisions on which events to log and which to monitor via other methods like metrics and traces.

2. Use the Right Matchers

When writing parsing rules, simplicity is key. Often, there’s no need for complex regular expressions to match specific patterns when simple matchers are sufficient.

Consider using the following straightforward matchers:

- notSpace: matches any sequence of characters up to the next space.

- data: matches any sequence of characters (similar to .* in regex).

- word: matches consecutive alphanumeric characters.

- integer: matches and parses decimal integers.

These four matchers can efficiently handle most parsing rules, reducing the need for overly complicated expressions.

3. Make the Logs Human-Readable

Certainly, log files should be machine-parsable. They should, however, be readable by humans.

People will read log entries, likely stressed-out developers troubleshooting a faulty application. Do not complicate their lives by writing difficult-to-read log entries.

Here are some ways to achieve human-readable logs:

- Use standard date and time format (ISO8601).

- Add timestamps in UTC or local time, plus offset.

- Use log levels correctly.

- Split logs of different levels into different targets to control their granularity.

- Include the stack trace when logging exceptions

- For multi-threaded applications, log the thread name.

4. Log at the Proper Level

Log levels are the most fundamental signal for indicating the severity of the event being recorded. They let you distinguish routine events from those that require further scrutiny.

Here’s a summary of common levels and how they’re typically used:

- INFO: Significant and noteworthy business events.

- WARN: Abnormal situations that may indicate future problems.

- ERROR: Unrecoverable errors that affect a specific operation.

- FATAL: Unrecoverable errors that affect the entire program.

- DEBUG: Denotes specific and detailed data that is primarily used for debugging.

- TRACE: It represents low-level information on a specific event/context, such as code stack traces. These logs show variable values and error stacks.

Most production environments default to INFO to prevent noisy logs, but the log often lacks enough detail to troubleshoot some problems.

Some logging frameworks also allow users to alter log levels for specific components or modules within an application rather than globally. This approach provides more granular control and reduces unnecessary log output, even when logging at the DEBUG or TRACE levels.

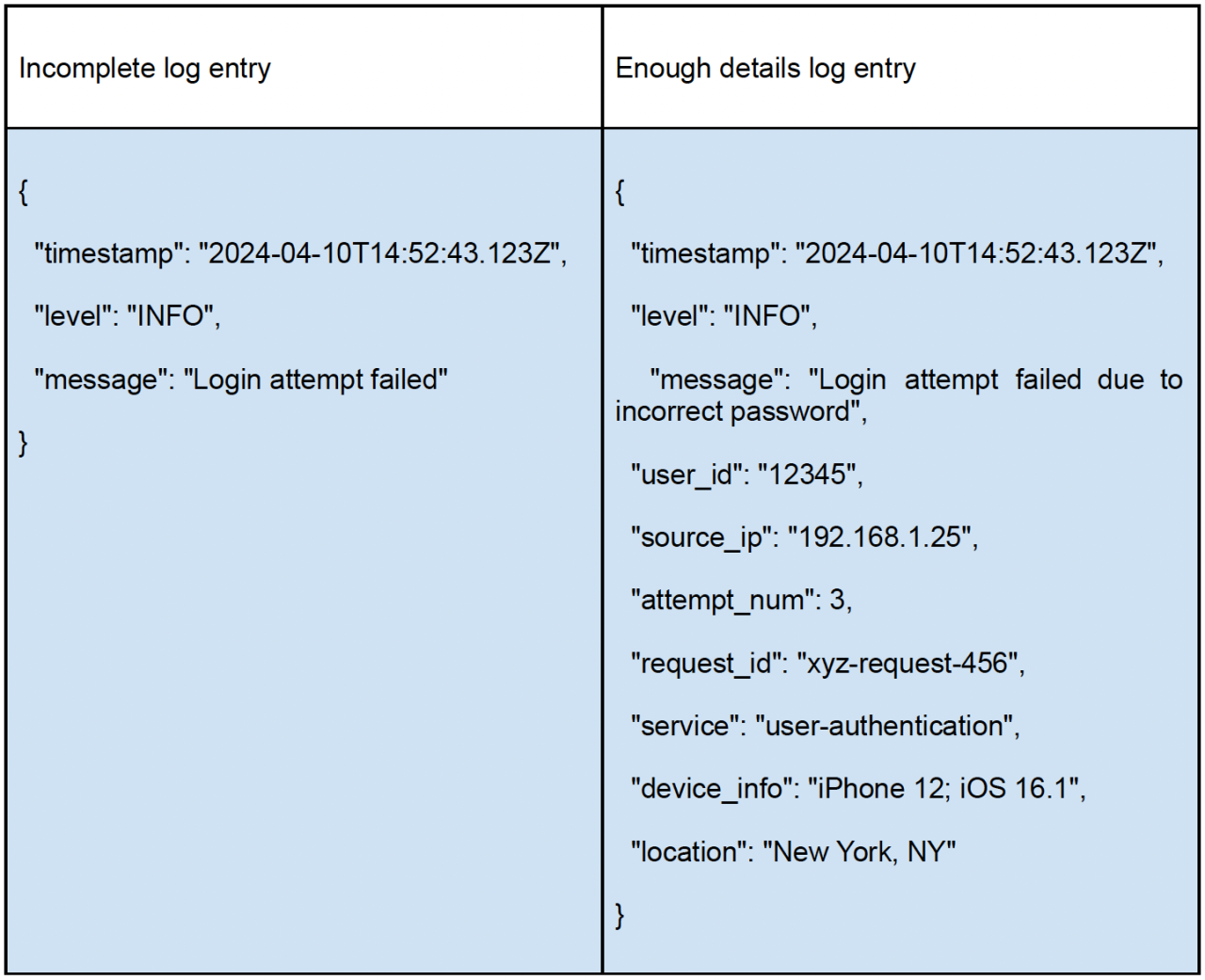

5. Write Meaningful Log Messages

The value of logs is directly tied to the quality of the information they contain. Log entries filled with unnecessary information will be ignored, undermining the entire purpose of logging.

When writing log entry messages, remember that there may be emergency situations in which all you have is the log file to figure out what happened. Write clear and informative messages that precisely document the event being captured.

Including multiple contextual fields within each log entry helps you understand the context in which the record was captured. It lets you link related entries to see the bigger picture. It also lets you quickly identify issues when a customer encounters problems.

Here are some essential details to include:

- Requests or correlation IDs

- User IDs

- Database table names and metadata

- Stack traces for errors

Here is an example of a log entry without sufficient context and with enough details:

6. Write Log Messages in English

Writing log messages in English using ASCII characters is recommended because it ensures readability and compatibility across different systems. ASCII minimizes the risk of corrupted or unreadable logs during transmission or storage.

English is widely understood in the tech community, making logs accessible to a global audience. Log user input accurately, but keep system messages in English to avoid compatibility issues.

Prioritize localizing user interfaces over logs, as logs are primarily for developers who likely understand English. If localizing logs, use standardized error codes (e.g., APP-S-CODE) to help users find language-independent support and understand issues:

- APP: Three-letter application abbreviation

- S: Severity level (e.g., D for debug, I for info)

- SUB: Part of the application related to the message

- CODE: Numeric code specific to the error

7. Avoid Vendor Lock-In

Organize your logging strategy so that you can easily swap out one logging library or framework for another if necessary.

A wrapper can help ensure that your application code does not explicitly mention the third-party tool. Rather, a logger interface with the necessary methods and a class should be created to implement it. Then, add the code called the third-party tool to this class. That way, you protect your application from third-party tools. If you ever need to replace it with another one, just a single place in the whole application has to change.

8. Don’t Log Sensitive Information

Mishandling sensitive information can have severe consequences for compliance and security. Logs are frequently the target of data intrusions, which result in unintentional data disclosure. For example, keeping sensitive information out of logs can significantly reduce the scope of an attack.

Here is some sensitive information you should not be logging:

- Passwords

- Credit card numbers

- Social security numbers

- Personal identifiable information

- Authorization tokens

9. Keep Logs Atomic

When logging information that only makes sense with other data, be sure to log everything in a single message. This creates what’s known as atomic logs, which contain all the necessary information in one line and are more effective for use with log management tools.

Remember, logs may not always appear in the order you expect. Many tools that manage logs can change the order or even skip some entries. Therefore, you shouldn’t rely on the order that timestamps suggest, as system clocks can sometimes be reset or become unsynchronized across different machines.

Additionally, avoid including newlines within log messages. Many log management systems interpret each new line as a separate message. For error messages that include stack traces, (which naturally contain newlines), ensure they are logged in one block to avoid confusion.

10. Implement Log Sampling

Log sampling is an essential cost-control strategy in systems that generate vast data volumes, such as hundreds of gigabytes or terabytes daily. It involves selecting a representative fraction of logs for analysis and discarding the rest without losing valuable insights.

This method significantly reduces storage and processing needs, making logging more economical. Typically, a basic log sampling method captures a fixed percentage of logs at regular intervals. For example, at a 20% sampling rate, only two out of ten identical events within a second are retained.

Advanced techniques offer finer control by adjusting rates based on log content, event severity, or exempting certain log types from sampling. This is particularly useful in high-traffic situations where reducing log volume can prevent high costs without compromising troubleshooting capabilities.

Ideally, integrate log sampling during log aggregation and centralization or implement it within the application if the logging framework supports it. Early adoption of log sampling is crucial to managing costs effectively.

11. Configure a Retention Policy

When aggregating and centralizing your logs, a crucial cost-control measure is configuring a retention policy. Log management platforms frequently base their pricing structures on the amount of log data ingested and its retention period.

When dealing with hundreds of gigabytes or terabytes of data, costs can quickly spiral without expiring or archiving logs. To avoid high costs, create a retention policy that meets organizational and regulatory needs.

This policy should specify how long logs must be kept active for immediate analysis and when they can be compressed. Depending on your organization’s needs, your data may be moved to long-term, cost-effective storage solutions or completely purged.

You can also apply different policies to different categories of logs. The most crucial thing is to consider log value over time and ensure that your policy balances accessibility, compliance, and cost.

12. Do Protect Logs with Access Control

Certain logs, such as database logs, often contain sensitive data. Therefore, you must protect and secure the collected data so only those who need it (like for debugging) can access it. Logs can be encrypted at rest and in transit using strong algorithms and keys to prevent unauthorized access, even if intercepted.

Select a log management provider that offers access control and audit logging. This ensures that sensitive log data is accessible only to authorized individuals, and all log interactions are recorded for security and compliance. Furthermore, confirm the provider’s procedures for managing, storing, accessing, and disposing of your log data once it is no longer necessary.

13. Don’t Limit Logging to Troubleshooting

Log messages serve multiple purposes beyond troubleshooting. Efficient uses can include the following:

Auditing: Capture key management or legal oversight events, such as user logins and activity.

Profiling: Utilize timestamped logs to profile sections of a program. Logging the start and end of an operation allows you to automatically infer some performance without incorporating additional metrics into the program.

Statistics: Record events to analyze program usage or behavior trends, potentially integrating alerts for error sequences.

14. Add Context to Your Log

Messages are just noise without context; they add no value and take up space that could have been used for troubleshooting.

Consider the following two log statements:

| Incomplete | Complete Context |

|---|---|

| “The database is unavailable.” | “Failed to Get users’ preferences for user id=1. Configuration Database not responding. Please retry again in 3 minutes.” |

Messages are much more valuable with added context, like the second logline. You can tell what the program was trying to do, which component failed, and if there is a solution. The logline should contain enough information to allow you to understand what happened and the program’s current status.

15. Keep Log Messages Simple and Concise

Include enough information in a log message, but do not overdo it. Too much data slows log searches, increases storage, and complicates debugging. Keep log messages brief and only collect essential data.

When formatting your logs, collect the information needed to debug an error without including every detail about the environment. For example, if an API fails, logging any error messages from the API may be helpful. But, consider omitting details about the application memory.

Take great care so your log messages don’t contain sensitive information. Protecting your customers’ data and avoiding legal issues are two very big reasons to watch for PII leaks into logs.

Top 7 Log Parsing Tools Every Developer Should Know About

There are a wide number of tools that can automate the log parsing process. Log parsing tools can help debug, monitor, and analyze system performance. Here are six of the best log parsing tools to consider:

1. Edge Delta

Edge Delta processes log data in real-time as it’s created. Its distributed architecture allows for significant flexibility in how data is routed and stored, ensuring that performance isn’t sacrificed for scale.

The system enables data shaping, enrichment, formatting, and analysis directly at the source, bypassing centralized processing bottlenecks.

Key Features

- Logs are processed in real-time, for immediate action

- Offer hybrid log search

- Focused data extraction and aggregation capabilities

- User-friendly Interface

- Automatically identify and extract patterns from log data

- Uses advanced analytics to identify unusual patterns or outliers in log data

Edge Delta is ideal for organizations with large amounts of data from multiple sources with real-time monitoring and troubleshooting. Its approach to log parsing is transformative for organizations looking to scale their data analytics capabilities without incurring prohibitive costs or complexity.

2. Datadog

Datadog provides a cost-effective log management platform for ingesting and parsing your logs. Log parsing with Datadog lets you define parsers to extract all relevant information from your logs.

Key features:

- Auto-tagging metric correlation

- Create real-time log analytics dashboards in seconds

- Rehydrate logs into indexes in a few clicks

- Support audits or investigations by quickly accessing archived logs

- Uses modern data compliance strategy with the Sensitive Data Scanner to prevent security breaches

Datadog is highly recommended for organizations seeking a unified log data parsing and analysis solution. It can provide accurate insights into log data viewed from a centralized control panel.

3. Loggly

Loggly automatically parses your logs as soon as it receives them. It extracts useful information, and presents it in a structured manner in its Dynamic Field Explorer. This viewer gives an intuitive approach to log analysis, allowing you to browse through the information without typing a single command.

Key features:

- Designed to handle unpredictable log volume spikes, protecting critical logs

- Needs little configuration and no proprietary agents to collect logs from various sources

- Supports a simple query language for trimming down the size of your logs

- Utilize charts and other analytics to identify trends over time

Loggly is highly recommended for businesses looking for a reliable log parsing and analysis solution focused on application performance management (APM). It has an exceptional ability to seamlessly integrate with a wide range of sources, as well as the capacity to aggregate data for comprehensive performance analysis.

4. Logz.io

Logz.io is built on the foundations of several open-source monitoring tools that have been combined and integrated into a single, centralized solution. It has excellent search and filtering tools with pre-built dashboards for monitoring.

Key Features:

- Automatically parses common log types like Kubernetes

- Offers many pre-built parsing pipelines for a large number of log sources

- Provides anomaly alerting features

- Automatically detects errors, facilitating prompt resolution and mitigation of issues.

Logz.io is recommended for those who want a fast, minimal-effort way to parse their logs. It is a fully-managed pipeline and analysis platform, making it not an ordinary parsing log tool.

5. Splunk

Splunk tracks structured, unstructured, and sophisticated application logs using a multi-line approach to collect, store, index, correlate, visualize, analyze, and report machine-generated data.

Using the tool, you can search through real-time and historical log data. It also allows you to set up real-time alerts, with automatic trigger notifications sent through email or RSS. You can also create custom reports and dashboards to better view your data and detect and solve security issues faster.

Key Features

- Designed to manage sudden increases in log volume

- Operates effectively without extensive setup

- Supports an easy-to-use query language for efficiently reducing log size

- Offers the capability to monitor data and events in real-time

- Easily scales from small operations to enterprise-level deployments

- Powerful search and filtering

Splunk is a good choice for those seeking a comprehensive logging tool with a user-friendly interface.

6. Dynatrace

Dynatrace leverages artificial intelligence to correlate log messages and problems your monitors register. Its log management and analytics can reshape incoming log data for better comprehension, analysis, or further processing based on your defined rules.

Key Features

- Unify logs, traces, and metrics of events in real-time

- Monitor Kubernetes easily

- Collect and parse log data in real time without indexing

- Filter, monitor, and transform log fields to meet policy and compliance requirements

- Keep logs for audits for days to years

Dynatrace is recommended for those who need to log information in multiple formats. It allows you to create custom log metrics for smarter and faster troubleshooting and understanding logs in context.

Final Words

Log parsing plays a vital role in system management by extracting information from raw data. It helps organize your data in a way that enables easier monitoring, troubleshoot, and security.

To increase efficiency, use best practices such as setting clear logging objectives, logging at the appropriate level, and automating parsing strategies. Moreover, top log-logging tools like Edge Delta can offer powerful features for diverse environments and are continually evolving.

Looking ahead, log parsing will most likely utilize AI and machine learning to anticipate problems before they worsen. These advances further integrate with real-time data analytics to improve system reliability and security.

Log Parsing FAQs

What are the levels of logging?

Most logging frameworks feature various log levels. These levels usually include TRACE, DEBUG, INFO, WARN, ERROR, and FATAL. Each name hints at its purpose. Categorizing logs by type and severity helps users find critical issues quickly.

Why is it important to maintain a good server log?

A good server log is critical to maintaining a website’s security, reliability, and performance. By tracking security threats, website issues, user behavior, and system performance, good server logs enable organizations to improve operations and make informed decisions.

What is a log management policy?

The log management policy governs log data collection, organization, analysis, and disposal. This policy secures and efficiently manages logs, supports legal and regulatory compliance, and monitors, troubleshoots, and improves system performance and security.