Redis is an open-source, in-memory data store used for high-performance applications that require low-latency data access. It serves as a database, cache, and message broker that stores all data directly in memory, enabling extremely fast read and write operations.

Redis is widely deployed in scenarios that demand rapid access to data, including web applications, gaming backends, and queueing systems, among many others. Unlike practically any other database solution on the market, Redis’ speed and durability gives it the unique ability to support real-time analytics, session management, and high-throughput caching, allowing companies to scale rapidly while maintaining high performance. As a result, organizations that rely on Redis must continuously ensure the health and well-being of their Redis infrastructure.

Another benefit of Redis is that it automatically generates a wide variety of logs, which serve as detailed records that capture critical information about events related to memory usage, database operation errors, slow queries, client database connections, and much more. However, analyzing Redis logs is challenging, as users aren’t able to instrument source code to change their format. Instead, they must first properly process these logs after they’ve been created and collected, and before performing any log-based analysis.

Want Faster, Safer, More Actionable Root Cause Analysis?

With Edge Delta’s out-of-the-box AI agent for SRE, teams get clearer context on the alerts that matter and more confidence in the overall health and performance of their apps.

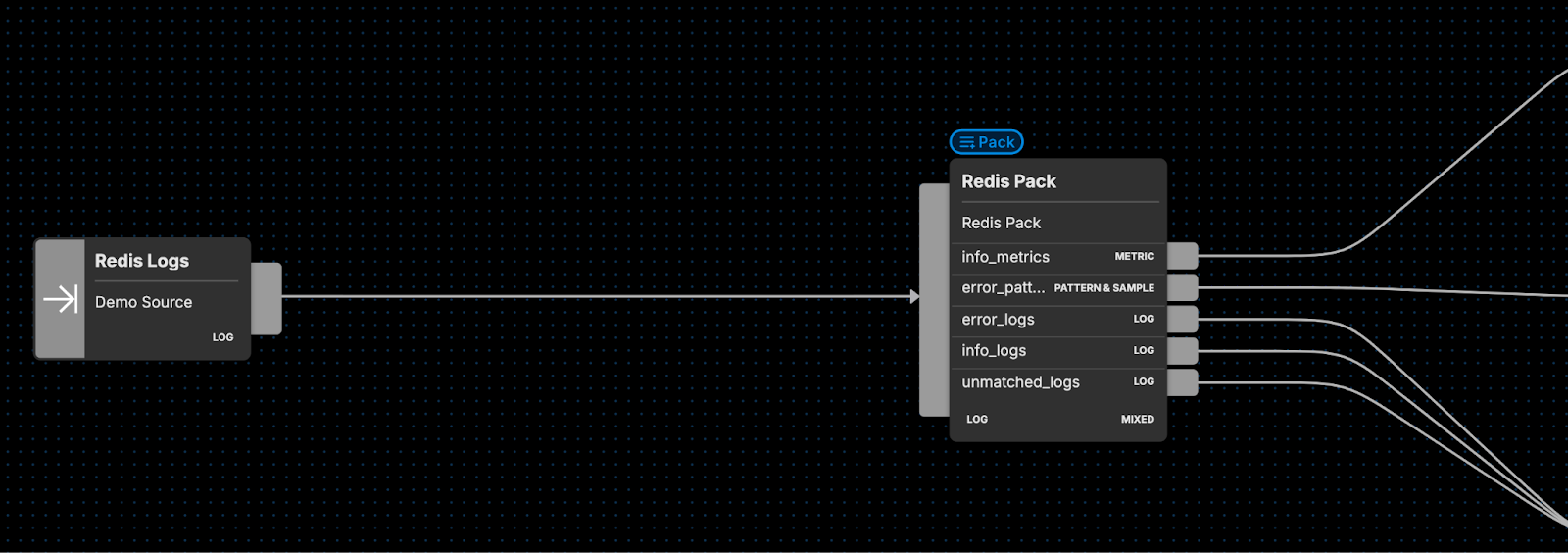

Learn MoreEdge Delta’s Redis Pipeline Pack is a specialized collection of processors built specifically to handle Redis-generated logs, enabling you to transform your log data to fuel analysis. Our packs are built to easily slot into your Edge Delta Telemetry Pipelines — all you need to do is route the source data into the Redis pack and let it begin processing.

If you are unfamiliar with Edge Delta’s Telemetry Pipelines, they are an intelligent pipeline product built to handle all log, metric, trace, and event data. They are also an on-the-edge pipeline solution that begin processing data as it’s created at the source, providing you with greater control over your telemetry data at far lower costs.

How Does the Redis Pack Work?

The Edge Delta Redis Pack streamlines log transformation by automatically processing Redis logs as they’re ingested. Once the processing is finished, these logs can be easily filtered, aggregated, and analyzed within the observability platforms of your choosing.

The pack consists of a few different processing steps, each of which play a vital role in allowing teams to use Redis log data to ensure database sessions and operations are running smoothly.

Here’s a quick breakdown of the pack’s internals:

Log Parsing

The Redis pack begins with a Grok node, which leverages a Grok pattern to convert the unstructured log message into a structured log item, by:

- Parsing the log message to locate and extract the associated process ID (pid), user role, timestamp, and log level

- Adding new fields in the

attributessection of the log item for each one, and assigning to them the extracted values

Converting to structured data allows for operating directly on the extracted values, which greatly simplifies the log search and analysis processes.

- name: grok

type: grok

pattern:

'%{INT:pid}:%{WORD:role} %{FULL_REDIS_TIMESTAMP:timestamp} (?:%{DATA:log_level}

)?%{GREEDYDATA:message}'

Timestamp Transformation

After the structured format conversion, the Redis pack normalizes the timestamp formats across all Redis logs by:

- Modifying the

timestampfield in each log item to a Unix Milliseconds format via the convert_timestamp CEL macro

This normalization process enables teams to get an accurate timeline of log events, which is critical for correlating, analyzing, and diagnosing performance issues and other anomalies over time.

- name: log_transform

type: log_transform

transformations:

- field_path: timestamp

operation: upsert

value:

convert_timestamp(item["attributes"]["timestamp"], "02 Jan 2006 15:04:05.000",

"Unix Milli")

Log Level Routing

After the timestamp transformation, the Redis pack leverages a Route node to:

- Check each log for their log level, stored in the

log_levelfield - Create two data streams by separating the

ERRORlevel logs from theINFOlevel logs

To catch any logs that have an improperly formatted log_level field, the Route node also checks the log body directly via regex to locate the ERROR or INFO statements within it.

- name: route

type: route

paths:

- path: error

condition:

regex_match(item["body"], "(?i)ERROR") || item["attributes"]["log_level"]

== "#"

exit_if_matched: false

- path: info

condition:

regex_match(item["body"], "(?i)INFO") || item["attributes"]["log_level"]

== "*" || item["attributes"]["log_level"] == "-"

exit_if_matched: false

Pattern and Metric Conversion

Finally, the Redis pack compresses logs into metrics and patterns to enhance further analysis. More specifically, it:

- Utilizes the Log to Metric node to aggregate and convert

INFOlevel logs into metrics, facilitating effective reporting and monitoring - Utilizes the Log to Pattern node to identify and extract patterns from

ERRORlevel logs, to pinpoint issues and anomalies

Pack outputs

In total, there are five output paths from the Redis pack:

error_logs– all Redis logs that have been classified asERRORlevelinfo_logs– all Redis logs that have been classified asINFOlevelinfo_metrics– generated metrics from theINFOlevel Redis logserror_patterns– generated patterns fromERRORlevel Redis logsunmatched_logs– Redis logs that weren’t properly processed in theLog ParsingandTimestamp Transformationsteps

For a more in-depth understanding of these processors and the Redis Pipeline Pack, check out our full Redis Pipeline Pack documentation.

Redis Pack in Practice

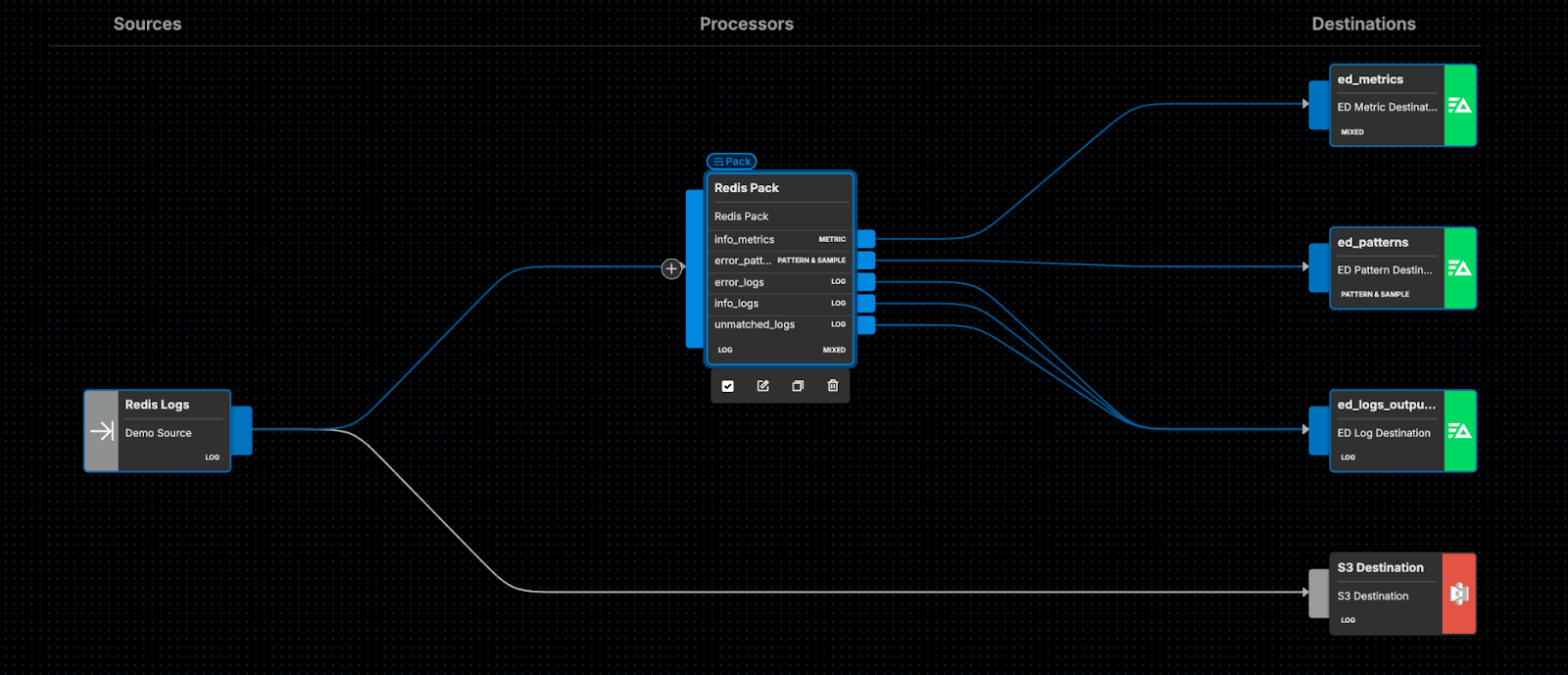

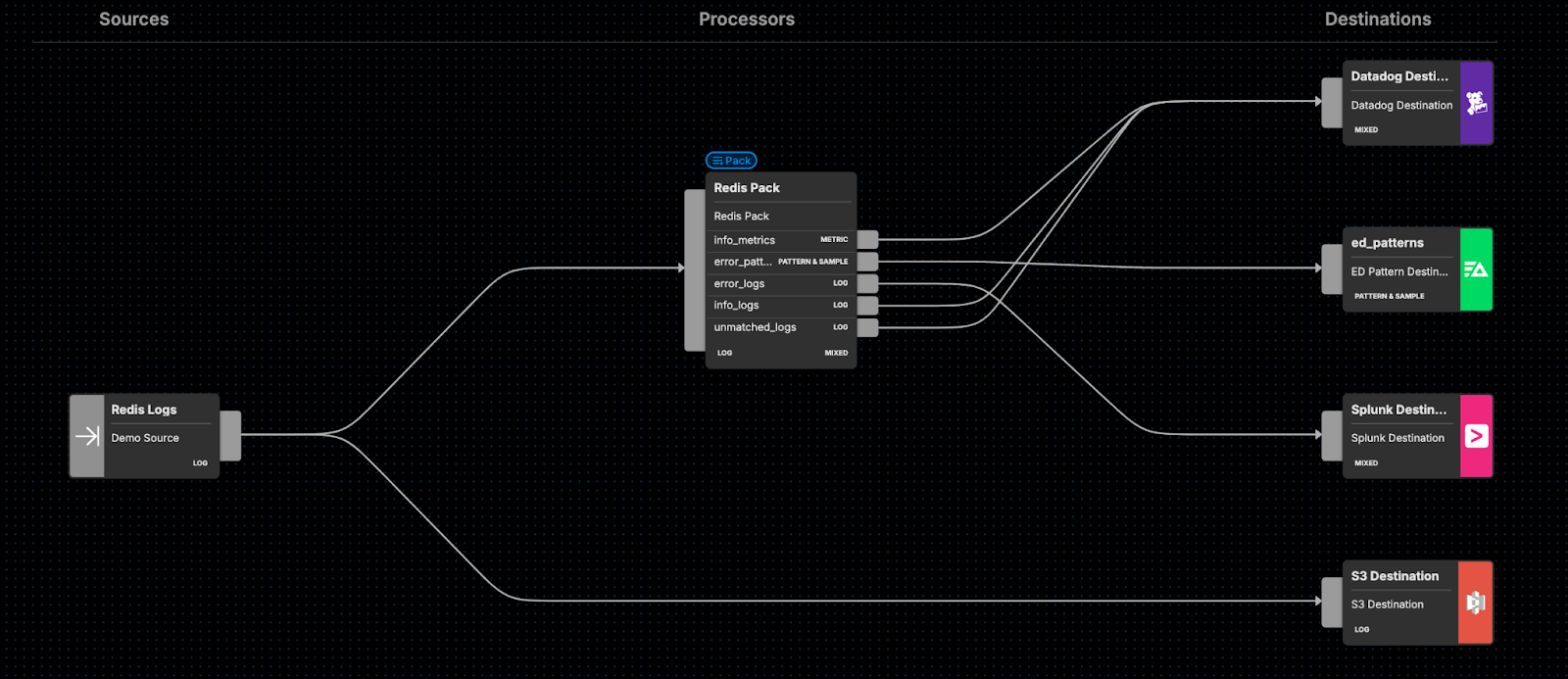

Once you’ve added the Redis pack into your Edge Delta pipeline, you can route the outputted log streams anywhere you choose.

For instance, you can route your error_patterns data flow into Edge Delta’s ed_patterns_output to leverage Edge Delta’s unique pattern analysis, route your info_metrics data flow into ed_metrics_output for metric threshold monitoring, and ship the processed logs into Edge Delta’s log search and analysis tools, as shown below:

Alternatively, you can route your Redis logs to other downstream destinations, including (but not limited to) Datadog and Splunk. As always, you can easily route a full copy of all raw data directly into S3 as well:

Getting Started

Ready to see our Redis pack in action? Visit our pipeline sandbox to try it out for free! Already an Edge Delta customer? Check out our packs list and add the Redis pack to any running pipeline!