Kubernetes (K8s) is one of the industry standards for container orchestration. It provides complete automation of container operations for deployment, scaling, and management levels.

In cloud computing, Kubernetes offers automation for engineers to manage modern applications at scale effectively. Understanding Kubernetes and its components can be overwhelming.

Want Faster, Safer, More Actionable Root Cause Analysis?

With Edge Delta’s out-of-the-box AI agent for SRE, teams get clearer context on the alerts that matter and more confidence in the overall health and performance of their apps.

Learn MoreThis article will explain Kubernetes and its components: containers, pods, nodes, and clusters. Read on to learn more.

Key Takeaways:

- Containers serve as the basic unit of software in Kubernetes, packaging applications with their necessary code, libraries, and dependencies.

- Pods are the smallest deployable units within Kubernetes, comprised of multiple containers that share resources to facilitate efficient communication and resource usage.

- Nodes and clusters in Kubernetes are collections of physical or virtual machines that run containers and manage their resources, with clusters optimizing resource utilization and ensuring scalability and high availability across multiple nodes.

- Best practices for Kubernetes should be observed to maintain a resilient and optimized container orchestration environment.

An Overview on Kubernetes Pods, Nodes, Containers, and Clusters

Kubernetes is an open-source platform that allows users to manage, scale, and deploy applications with container technology. It empowers operations teams to schedule and run application containers efficiently in any way required across the cluster.

Kubernetes has allowed businesses to move from monolithic architectures to microservices-based architectures, making it possible for application components to be separately scaled and deployed. In that case, both the resilience of the system and the possibility of it becoming scalable are improved.

In the next sections, we will dive into the different pieces that make up Kubernetes.

What are Kubernetes Containers? The Building Blocks of Application Architecture

Containers are a unit of software that packages applications. They are considered the lowest in the hierarchy of nodes, pods, and containers. Containerized applications are similar to a box, which contains everything that an application needs to run, such as:

- Application codes

- Libraries

- Dependencies

These containers are widely accepted as a standard and are often packaged as Linux containers. This standardization promotes ease of deployment and sharing, with abundant pre-built images readily available.

The practice of containerization supports streamlined CI/CD pipelines and fosters a microservices architecture. However, manual management of these becomes complex. This is where Kubernetes comes in place to help manage the containers efficiently.

How Do Kubernetes Pods Work? The Atomic Unit of Kubernetes Deployment

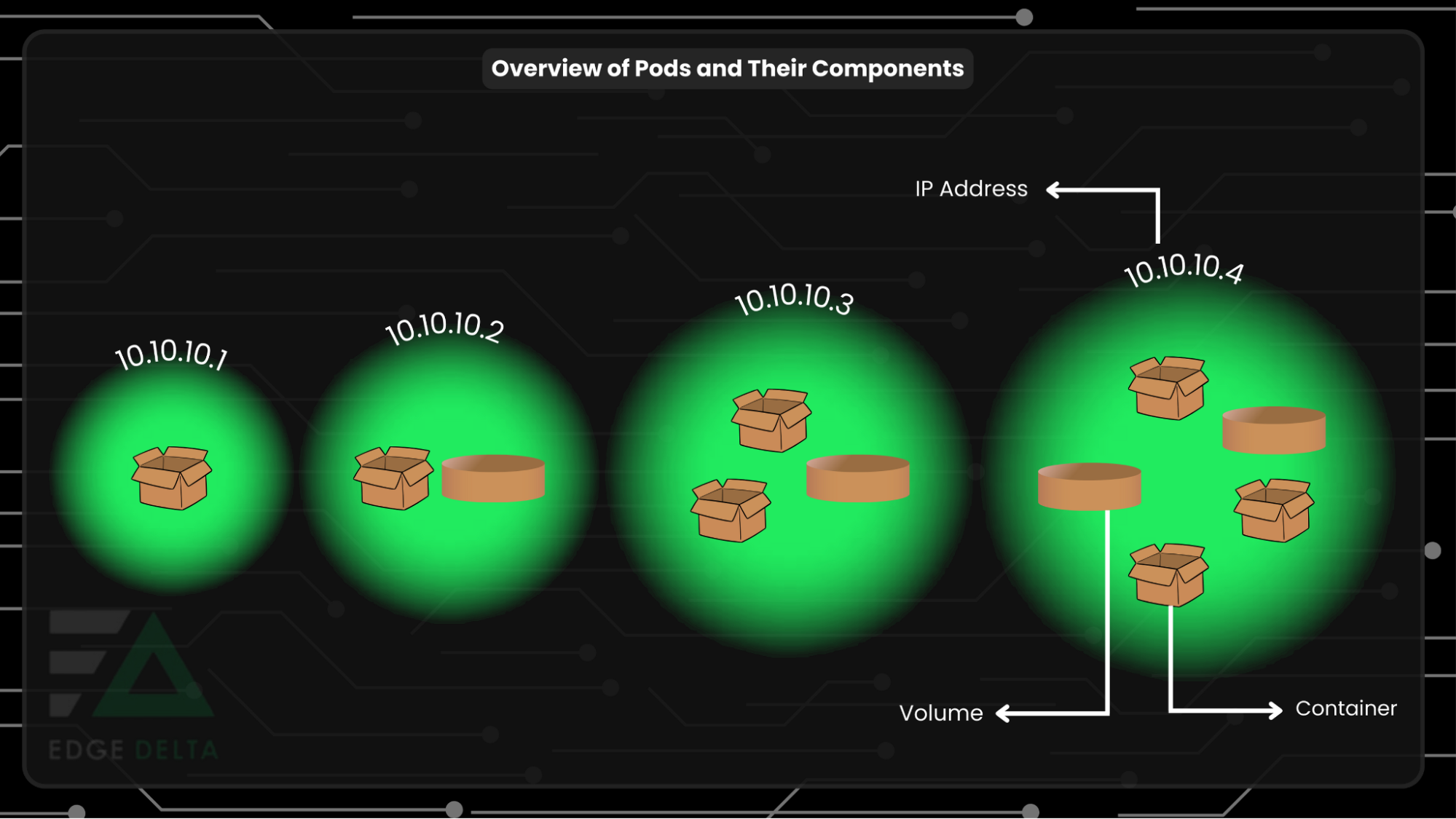

Pods are the smallest deployable units of Kubernetes. In its most basic definition, a Pod is a single running instance of a process in a cluster, possibly compromising many containers managed as a single unit by Kubernetes.

Pods are hosting co-located containers that share resources for efficient communication and resource use. This becomes extremely helpful when, for example, sidecar containers need to add functionality to the main container.

There are two ways Pods are used in a Kubernetes cluster:

- Running a single container: This is where Kubernetes handles the running of a Pod, and it doesn’t take place if, and only if, the case the Pods are wrapped around.

- Running multiple containers: It is possible to containerize an application comprising multiple co-located containers to share resources. This is an advanced use case and should only be used if there are instances where the containers are coupled tightly.

Generally, a pod remains in a node until the following:

- The process is terminated.

- The object is deleted.

- The resources are lacking.

- The host node is terminated.

Limiting the containers in one pod is advisable to ensure they are scaled quickly.

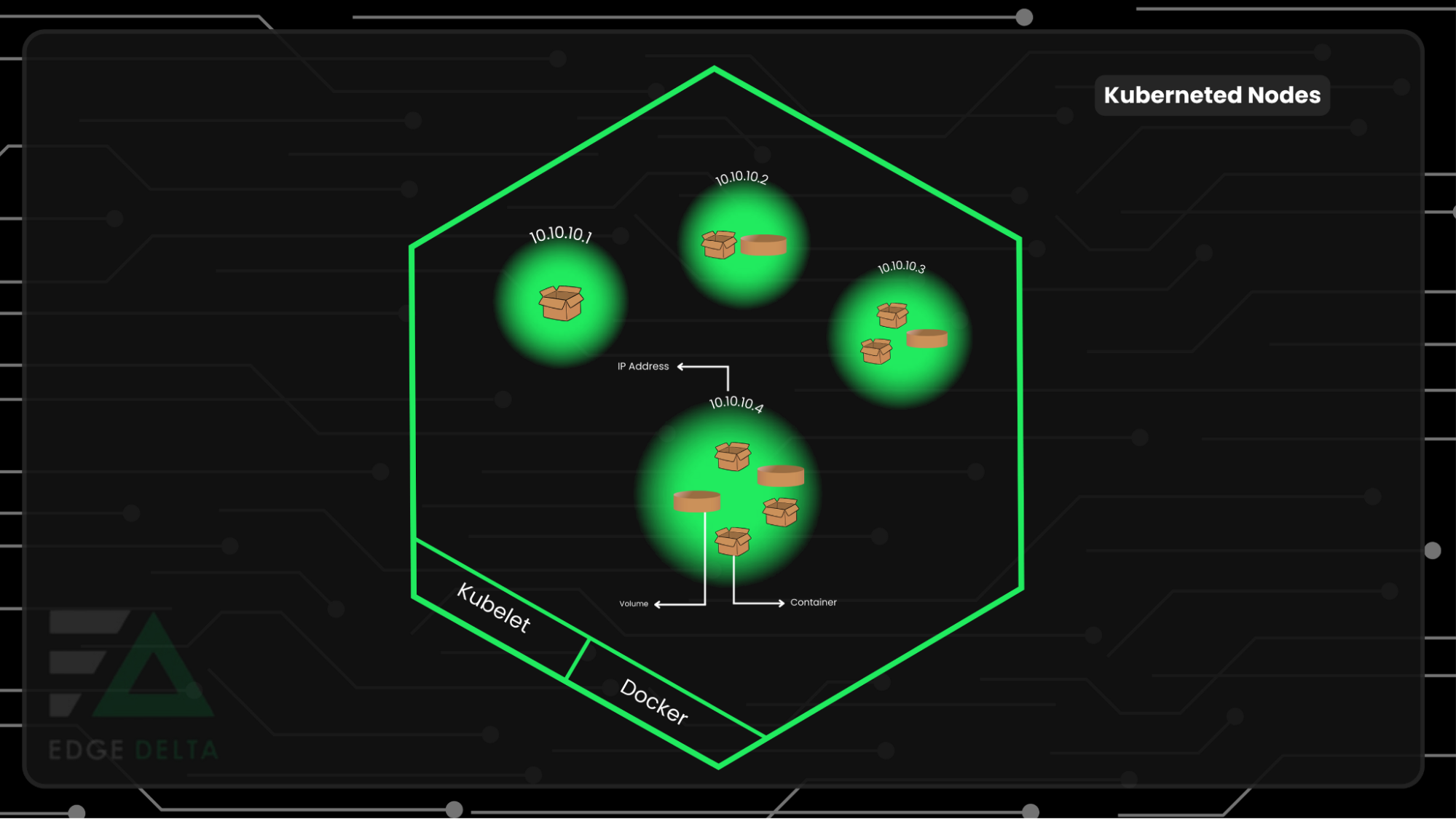

What are Nodes in Kubernetes? The Workhorses of the Kubernetes Ecosystem

In Kubernetes, Nodes are a collection of resources that help run different processes. They are also the smallest units of computing power in the platform. Each node helps run the containers that Kubernetes manages.

Key Components of a Kubernetes Node:

- Kubelet: the Kubernetes node agent that ensures the containers are running in every cluster node, as defined by Kubernetes manifests (configuration files).

- Container Runtime: This is the software or engine running containers. Kubernetes supports many container runtimes, including Docker.

- Kubernetes Proxy (kube-proxy): This network proxy runs on each node, maintaining the network rules. It maintains the communication of the pod to other network endpoints, including other pods within or outside the cluster.

- cAdvisor: stands for “Container Advisor.” It runs on every node, but cAdvisor acts to collect and expose resource usage and performance metrics for the containers and the nodes in general.

- Pods: the smallest deployable units in Kubernetes. The pod may contain one or more containers and related network and storage components. The control plane schedules the Pods onto Nodes.

Nodes also have their specific roles in the orchestration. These are the following:

- Execution: Each node executes the assigned tasks, running the containers that make up your applications.

- Networking: Nodes handle communication between Pods, services, and external traffic.

- Storage: Nodes may provide local storage to the containers running on them. It might be used to have temporary data or for another purpose.

- Monitoring and Reporting: The nodes will have all needed status information, including resource usage and other metrics, reported to the control plane.

Kubernetes Clusters: The Heart of Kubernetes Scalability and Efficiency

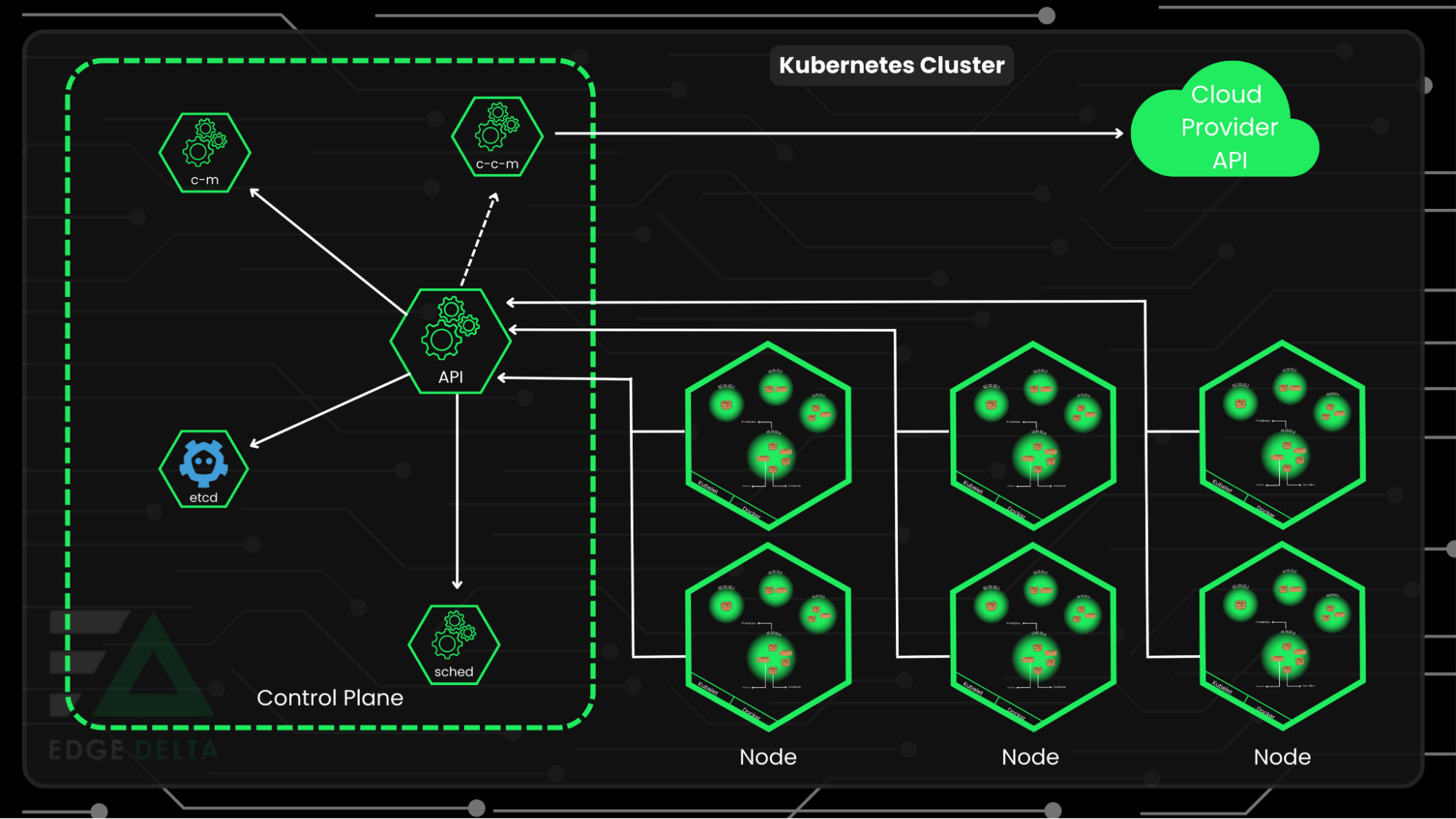

Kubernetes clusters help streamline the deployment of applications and management across different computing environments. It is composed of one controlling master node and many workers executing applications.

Clusters open the way for scaling applications that use lightweight containers, bringing flexibility and reducing the consumption of resources. Kubernetes also supports cluster namespaces for managing resources and allows the smooth running of complex projects through team collaboration.

A Kubernetes cluster is built on six core components that ensure its functionality and efficiency. These components include:

- API Server: This is the front-end interface of the Kubernetes Control Plane. It acts as a management endpoint of the entire cluster that allows both users and internal components to send and change the state of the cluster.

- Scheduler: It is a master component that schedules the assignment of pods to nodes in the cluster. It works by determining the best node for each pod concerning resource requirements, node capacity, or any other possible limitation and preference of the pod so that the effective use of resources is guaranteed.

- Controller Manager: Controls several Controller processes, determining the state of the cluster concerning the current state and comparing it with the desired state as described in the configurations.

- Kubelet: The kubelet instructs the container runtime (e.g., Docker) to go forth and run those containers.

- Kube-proxy: It manages network connectivity and applies network rules on each node to ensure the connection and communication of the pods from within and outside the cluster.

- Etcd: A strongly consistent, distributed key-value store that forms the foundation of the storage system for data storage in a cluster, containing all configuration and state information for the cluster.

Simply put, nodes pool together to create a more powerful machine. When programs are deployed onto the clusters, they will handle the work distribution to other nodes. The cluster will adjust according to how the nodes are added or removed.

Below is a table that describes the main components of Kubernetes: pods, nodes, containers, and clusters, along with their functions.

| Component | Description | Functions |

|---|---|---|

| Pods | The smallest deployable units of computing that can be created and managed in Kubernetes. A pod is a group of one or more containers. | Hosting application instances. Sharing resources and networking. Managing storage volumes. Atomic unit of scaling. |

| Nodes | Physical or virtual machines that make up the Kubernetes cluster. Each node can host multiple pods. | Running pods. Providing necessary resources to pods. Reporting status information to the master components. |

| Containers | Lightweight, executable packages that include everything needed to run a piece of software, including the code, runtime, libraries, etc. | Encapsulating the application workload. Ensuring environment consistency. Enhancing isolation and resource management. |

| Clusters | Sets of nodes pooled together to run your containers and services. | Orchestrating containers across multiple nodes. Managing workload scalability. Ensuring availability and resilience. |

Best Practices for Using K8s

Kubernetes has revolutionized container management and deployment. However, its complexity requires adherence to certain best practices to ensure efficient, secure, and stable operations.

Here are some important best practices for using Kubernetes and its components:

- Ensure efficient resource utilization by limiting the number of pods per node to prevent resource contention.

- Adhere to pod security policies to protect your cluster from unauthorized access and vulnerabilities.

- Maintain node security by implementing role-based access control (RBAC) and updating your nodes with the latest security patches.

- Monitor node health and performance regularly to address potential issues preemptively.

- Containers should be as lightweight and secure as possible. Include only the necessary packages and software to minimize the attack surface.

- Allocate resources efficiently to avoid wastage and ensure containers do not monopolize node resources, affecting other containers’ performance.

- Manage your clusters effectively by employing version control for cluster configurations, regularly backing up your cluster data, and having a disaster recovery plan.

These practices will help you have a resilient container orchestration environment, harness the full potential of Kubernetes, and maintain optimal operations.

Final Thoughts

Kubernetes stands as a powerful and sophisticated platform in container orchestration. Through its intricate yet efficient system of pods, nodes, containers, and clusters, Kubernetes offers a scalable, resilient, and highly automated ecosystem that adapts to the dynamic demands of modern cloud computing.

Understanding and effectively utilizing Kubernetes components like pods, nodes, containers, and clusters is essential for developers and operations teams aiming to harness the full potential of containerized applications. Whether you are just starting or looking to deepen your understanding, the journey into Kubernetes offers a rewarding path toward mastering container orchestration.

FAQs About Kubernetes

What is Kubernetes, and how does it work?

Kubernetes is an open-source platform designed to automate deploying, scaling, and operating application containers. It groups containers that make up an application into logical units for easy management and discovery.

How does Kubernetes differ from Docker?

Docker is a containerization platform that packages an application and its dependencies into a container that can run on any Linux server. On the other hand, Kubernetes is a container orchestration platform that manages containers deployed across multiple hosts, providing basic mechanisms for deploying, maintaining, and scaling applications.

What are the main components of Kubernetes?

Kubernetes comprises essential components like Pods, which represent running processes, Services for network exposure, and Deployments for managing application deployment and scaling. These components provide a robust framework for deploying, managing, and scaling containerized applications in a Kubernetes cluster.