Data transformation involves converting data from one format or structure into another to suit a specific standard. This process allows businesses to derive insights from raw data. In the context of observability, data transformation is the process of converting raw log data into a format that is more suitable for analysis, storage, or visualization.

In data transformation, you should use scalable and flexible tools capable of handling different formats and sources. These practices ensure that data transformation is implemented successfully. When it comes to observability, this often means using an observability pipeline.

Automate workflows across SRE, DevOps, and Security

Edge Delta's AI Teammates is the only platform where telemetry data, observability, and AI form a self-improving iterative loop. It only takes a few minutes to get started.

Learn MoreLearn more expert tips about data transformation and how it can benefit an organization. We’ll cover all applications of data transformation – both inside and outside the context of observability. Read on.

Data Transformation Overview

IT teams often start with raw data—such as text, figures, or images. However, it may not always be in the right format or structure for their needs. Data transformation converts unprocessed data from different sources into a consistent, verified format suitable for analysis, storage, and visualization.

The process involves a sequence of actions that cleans, arranges, and prepares the data for analysis. It helps make data more digestible and helpful in deriving insights or taking action based on its findings.

Data transformation is about changing the content or structure of data to make it valuable. It is a vital process in data engineering as it helps firms meet operational objectives and extract useful insights.

The data transformation process has two main stages:

- Data mapping: This stage involves carefully assigning components from the source system to the target system while precisely recording each change. However, complex transformations like many-to-one or one-to-many rules can complicate this process.

- Code Generation: Creating a transformation program that can run on several platforms comes next. This phase is key in securing seamless operation and compatibility across different platforms.

Transformation leads to structural, aesthetic, destructive, or constructive changes. It can be manual, automatic, or a combination of both.

- Constructive: Enhances data by adding, duplicating, or copying information to improve data quality and analysis for machine learning (ML).

- Destructive: Removing unnecessary records and fields to improve analysis and model performance.

- Aesthetic: Standardizes particular values for coherence and consistency. It guarantees clarity, visualization, and data format.

- Structural: Modifies the data structure to improve analysis, integrate various sources, enable ML, and improve visualization. An example is renaming, reorganizing, or combining columns for optimization and accessibility.

Although transforming data is time-consuming, investing time and effort yields different benefits that ultimately drive better decision-making and operational efficiency. The following section will discuss the various techniques to transform data.

Did You Know?

Currently, 99% of businesses fund data transformation projects. These companies report active investment in emerging areas in tech like big data and Artificial Intelligence (AI).

Data Transformation Techniques

Numerous issues in data analysis projects can be resolved using various data transformation techniques. The following are common data transformation strategies and brief discussions of how each technique works:

Data Smoothing: Identifies Patterns While Eliminating Unnecessary Data

When noise or fluctuation in the data masks the underlying patterns, smoothing can be helpful. This technique removes noise or irrelevant data from a dataset while uncovering subtle patterns or trends through minor modifications.

Data Aggregation: Combining Data for Accurate Analysis

Aggregation can be helpful in situations like financial analysis, observability, and sales forecasting when data needs to be examined. It consolidates data from various sources into a unified format, facilitating accurate analysis and reporting, particularly for large volumes of data.

Discretization: Simplifies Data Into Analysis-Ready Intervals

This technique enhances efficiency and simplifies analysis by using decision tree algorithms to transform extensive datasets into concise categorical data by creating interval labels in continuous data.

Generalization: Summarizes Detailed Characteristics for Clarity

Concept hierarchies, employed to convert detailed attributes into higher-level ones, offer a clearer data snapshot. In situations like picture or speech recognition, where the dataset is too complicated to evaluate, generalization can be helpful.

Attribute Construction: Creates New Attributes for Deeper Insights

In Attribute Construction, new attributes are generated from existing ones, organizing the dataset more effectively to reveal additional insights. The objective is to create additional data attributes that enhance the machine learning model’s performance and are more indicative of the underlying patterns in the data.

Normalization: Modifies Data to Facilitate Efficient Analysis

This process standardizes the format and structure of data to ensure consistency. This makes it easier to analyze and compare data.

Manipulation: Alters Data for Readability and Insight

It involves modifying data to enhance readability and organization, using tools to identify patterns, and transforming data into actionable insights. Data manipulation is necessary to make a dataset precise and dependable for analysis or machine learning models.

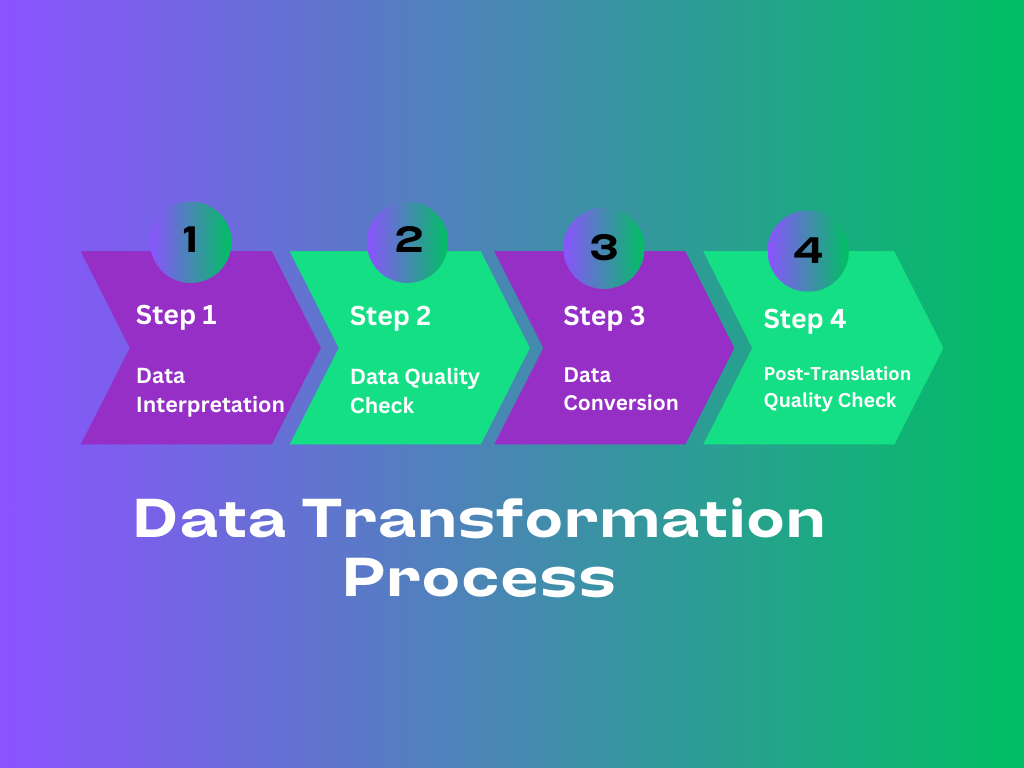

Data Transformation Process

Identifying the best action for fixing various data challenges will be easier if you know these data transformation processes.

Step 1: Data Interpretation

Understanding your data’s existing format and what needs to be changed depends on how you interpret it. This can be challenging due to discrepancies between database table names, file extensions, and actual contents.

Step 2: Data Quality Check

Conduct a thorough check of the source data to uncover anomalies, such as missing or corrupted values. Ensuring the integrity of the data at this stage is crucial for subsequent transformation processes.

Step 3: Data Conversion

Translate source data into the desired format, guaranteeing compatibility with the target format’s requirements. It may involve replacing outdated elements or restructuring the data for optimal organization.

Step 4: Post-Translation Quality Check

Verify the translated data to find any errors or inconsistencies the translation process may have introduced. This step guarantees the reliability and accuracy of the transformed data for future usage.

Following instructions enables you to keep clear of needless and expensive errors.

Data Transformation in Observability

When it comes to observability, there are slight differences in the application of data transformation. Specifically, you will likely need data transformation capabilities for your log events.

In a large-scale environment, you will have many different systems and services that emit different types of logs. Data transformation capabilities can help you standardize and optimize these datasets to ensure efficient observability.

In observability, you can execute the following steps to transform your log data:

Step 1: Parsing Log Data

Log datasets consist of unstructured or semi-structured formats. By parsing your log events, you break down the data into individual fields or attributes, such as timestamp, log level, message, source IP address, user ID, etc. This step makes it easier to work with the data and extract relevant information.

Step 2: Normalizing Events

Log data may contain inconsistencies or variations in formatting across different sources or systems. Normalization enables you to adopt a consistent format, making it easier to run analytics across datasets.

Step 3: Enriching Log Events

Sometimes, additional context or information may be needed to understand log entries fully and/or investigate issues faster. Enrichment involves augmenting the log data with supplementary data from other sources, such as reference tables, databases, or external APIs. This could include adding geographical information based on IP addresses, correlating user IDs with user profiles, or appending metadata about the environment in which the logs were generated.

Step 4: Filtering and Cleaning

Not all log entries may be relevant or useful for analysis. Filtering involves removing irrelevant or redundant entries, while cleaning involves correcting errors, removing duplicates, and handling missing or inconsistent data. In some cases, you also may want to remove specific fields from your logs to reduce their verbosity.

Step 5: Aggregation

In some cases, it may be beneficial to aggregate log data to summarize or condense information for analysis. This could involve grouping log entries by certain criteria (e.g., time intervals, source, severity) and calculating metrics such as counts, averages, or sums.

Step 6: Transformation

Finally, data may need to be transformed to meet specific requirements or to enable particular types of analysis or visualization. This could include converting data types, applying mathematical or statistical transformations, or reshaping the data into a different structure, such as pivot tables or time series.

Now that you know the key components of the data transformation process, here are some advantages and challenges of implementing this technique across your organization.

Data Transformation’s Role in Businesses

Why is data transformation required in businesses? Enterprises produce large amounts of data daily, but its real worth comes from their capacity to provide insights and foster organizational development. Unlocking this potential requires data transformation, which enables businesses to change unprocessed data into formats that can be used for various tasks.

In today’s data-rich environment, data transformation is crucial for companies, as it facilitates seamless data conversion and enables integration, analysis, and well-informed decision-making. Several data transformations are frequently included in data pipelines, transforming them into high-quality data that businesses may use to meet operational demands.

Unlocking the Power of Data Transformation

One of the main goals of data transformation is to convert data to make it usable for analysis and visualization, which is vital in a company’s data-driven decision-making. It is crucial for detailed analysis and modern marketing campaigns, requiring robust tools for automation.

Ensuring data interoperability across many sources is crucial in big data. Data transformation fills this gap by harmonizing data for seamless integration—often through replication processes for businesses with on-premises data warehouses and specialized integration solutions.

The most significant advantages of data transformation are listed below:

- Improves Data Quality: Ensuring data is correctly formatted enhances data quality and shields programs from potential anomalies, including null values, duplication, imprecise indexing, and incompatible formats.

- Optimize Performance: Converting data into more efficient formats can lead to faster processing times and improved efficiency.

- Enhances Data Utilization: By standardizing and improving data accessibility, data transformation technologies enable organizations to utilize the data’s potential for insights and decision-making fully.

- Improves Data Organization: Data can be transformed into an organized format, making it easier during analytics.

- Accurate Insights: Data transformation helps organizations reach exact goals by creating enhanced data models and turning them into usable metrics, dashboards, and reports.

- Improves Data Consistency: By resolving metadata errors, data transformation makes data organization and comprehension easier while promoting consistency and clarity among datasets.

- Platform Compatibility: Data transformation facilitates integration and exchange by promoting compatibility across many platforms, applications, and systems.

Data Transformation: Challenges to Face

Although data transformation has excellent business potential, several issues must be carefully considered. You can run into these issues if you don’t have the right technologies in place to manage data transformation centrally.

- High Implementation Costs: Significant costs may be associated with implementing data transformation.

- Expertise Requirements: Effective data transformation requires expertise, typically from data scientists.

- Contextual Awareness: Errors can occur if analysts lack business context, leading to misinterpretation or incorrect decisions.

- Tool Selection: Selecting the appropriate tool should consider the type of data being transformed as well as the particular needs of the project.

- Complexity of Process: The complexity of the transformation process rises with the volume and variety of data.

Organizations can enhance the effectiveness of their data transformation initiatives by following several best practices despite these challenges.

Data Transformation Best Practices

By investing in effective data transformation practices, companies can clean and analyze large datasets for actionable insights, improving decision-making and customer experiences.

- Identify Your Targets Clearly: Decide what you hope to accomplish through the data transformation before setting any targets.

- To start, clearly define your goals for the data translation process. Whether your goal is to optimize data integration, improve data accessibility, or improve data accuracy, having clearly stated objectives can help you make progress.

- Profile Data: Assess the state and data complexity to gauge transformation requirements.

- Make a thorough evaluation of the current condition of your data. This entails being aware of the complexity, quality, and organization of the data sets you’re working with. By profiling the data, you can find any irregularities, discrepancies, or locations that need extra care during the transformation process.

- Cleanse Data: Ensure data quality by addressing formatting and integrity issues early.

- Effective transformation outcomes depend critically on high-quality data. Prioritize data cleansing to resolve missing numbers, formatting errors, or integrity problems. This may entail standardizing formats, eliminating duplicates, and validating data per predetermined norms to ensure correctness and reliability.

- Employ the Proper Tools: Make sure the tools suit the tasks.

- Choosing the correct tools is essential to successfully automating the data transformation process. Scalability, flexibility, ease of use, and interoperability with your data sources and targets are a few things to consider. Larger-scale transformations might entail employing the ETL procedure. When it comes to observability, you should be using an observability pipeline to transform data centrally.

Conclusion

Understanding and implementing data transformation best practices are crucial for navigating today’s data-driven world. By guaranteeing data consistency, quality, and usability, companies can obtain a competitive advantage and gain insightful information. In the digital age, embracing efficient data transformation is essential to fostering innovation and long-term growth.

Data Transformation FAQs

How does data transform into information?

Data must go through a transformation process that consists of six fundamental processes to be used in decision-making: data collection, data organization, data processing, data integration, data reporting, and data utilization.

What is an example of a data transformation process?

The process of transforming data from one format into another is known as data transformation. It is frequently used to convert temperatures between degrees Celsius and degrees Fahrenheit or between pounds and dollars.

What is the focus of data transformation?

The traditional data transformation methodology aims to enhance data quality and applicability for analysis or modeling by employing a systematic approach.