Those same principles extend to the realm of SRE and DevOps. Each member of these teams needs to work together and do their part to ensure that the next on-call is more manageable with respect to the last. This means increasing observability, improving SLAs, minimizing false positives, and eliminating alert fatigue. Auto-remediation can be a crucial tool to help with all of that and more. Here is a high-level plan (the “napkin sketch”) using a simple 3-step process to go from zero to initial levels of a self-healing production service (which you can then evolve into your custom “blueprint”).

At a high level, here are the 3 Steps required to go from zero to auto-remediation:

Want Faster, Safer, More Actionable Root Cause Analysis?

With Edge Delta’s out-of-the-box AI agent for SRE, teams get clearer context on the alerts that matter and more confidence in the overall health and performance of their apps.

Learn More- Achieve observability coverage that can reliably indicate a healthy (and unhealthy) production service.

- Identify repeatable remediation steps that consistently solve specific issues and do not require tribal knowledge or deep levels of human intelligence.

- Implement automated remediation that relies on multiple indicators of failure and does not overreach in disaster scenarios.

A healthy visibility stance into KPIs is not a destination but instead a constant iterative process. With every new push and version of the product or service, there is a possibility that new telemetry needs to be monitored, new KPIs added. Though that is a very important process within a healthy SRE team, there are also parts of productions services where these KPIs are very well-defined. Auto-remediation is not recommended for new services where there aren’t yet enough data points to determine what a typical repeatable remediation action might be. For the sake of this topic, we are going to scope down on those well-defined areas of production.

#1 – Observability Coverage

There are many factors that you should focus on when improving your observability coverage, and it is important to select a monitoring platform that can support these requirements. If you already have a mature organization that has an effective and mature monitoring platform, you should probably skip ahead to #2.

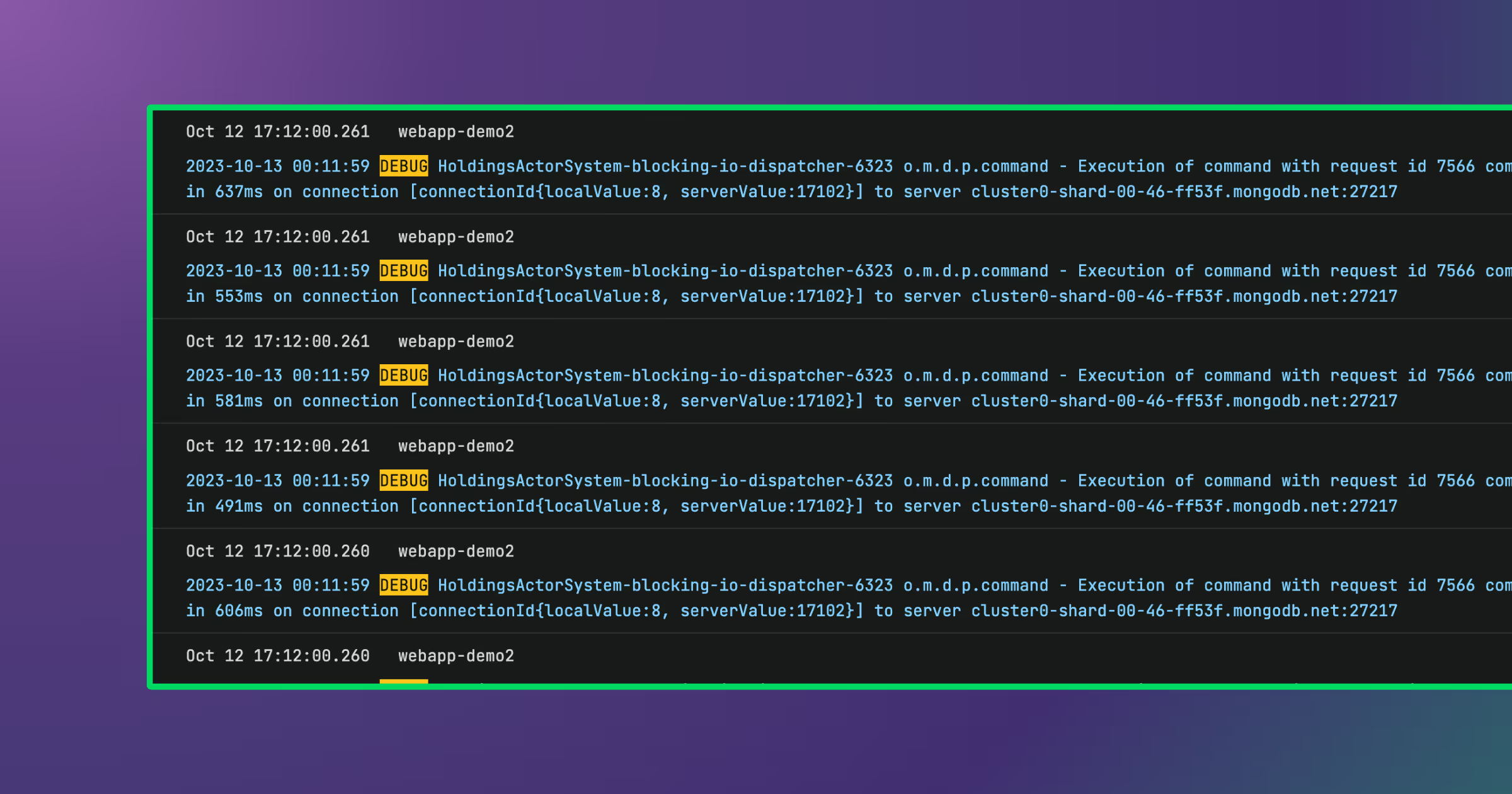

First, it is important to understand which data sets need to be collected so that the relevant indicators can be derived from that data. This primarily comes in the form of metrics, logs, events, and traces that need to be analyzed and aggregated. The rule of thumb is that metrics (and traces) will typically tell you “what” is going on, and logs (and events) will tell you “why” it is happening. Input should be taken from teams to determine the most important data sets to cover, and additional sets should be added any time service degradation or outages were not immediately caught.

The speed requirements for these data sets should also be determined. Organizations can differ in this regard, some saying “every millisecond counts” and others conceding “getting an alert 15 minutes faster isn’t a priority for us” (yes, really). It comes down to the business impact and with infinite possible business models out there, requirements can vary widely. If speed is a priority, consider taking a stream processing approach which allows you to analyze data in real time. If not – batching can save quite a bit on costs and remove stress from large scale production systems.

Next, look at the capabilities required for your use cases. If you are going to be monitoring widely used technologies, many monitoring platforms understand the formats and structures of the popular third party tools. This functionality can save your team a lot of time to implement and build the required parsers, dashboards, (and finally alerts). In addition, if you have complex requirements (anomaly detection, mathematical operators, machine learning, AIOps, etc.) ensure you’re moving in a direction that does not limit the long term potential of your team.

Once you’ve compiled requirements, you should try a few different solutions to see what the team prefers. There is simply no way to read through enough marketing material and documentation to replace the need try these first hand, and though these platforms are extremely similar in ways, they are also drastically different in many others. Some will win on traits that can objectively be quantified, and some will win on subjective feelings. The reality is that both are important, and ultimately the decision should come down to a combination of the value the solution provides, the cost of the solution, and ultimately the ROI that will be achieved in the long term, with the aforementioned subjective feelings being the tie breaker.

When trying these platforms, especially in the case of SaaS offerings, you should be able to do a free trial or a no-cost POC. If there is a lot of work required to implement, you could also consider a short paid-pilot, but those should be the rare exception. For the most part, any of the solutions in the Monitoring/Observability space will have a technical team that will walk you step-by-step through the process of implementing and evaluating their platforms. At the minimum you should get a scoped-out use case setup end-to-end: data collection, indexing, parsing, dashboarding, alerting, and an outbound integration or two.

#2 – Repeatable Actions Identification

Fast forward, you’ve chosen your platform. You should have setup a few alerts during the POC process, but it is extremely important to have a strong set of alerts so that your team does not require “eyes on glass” at all times. If 5 years from now someone told me there was a 12-book series just on infrastructure alerting it would not surprise me in the least. This is a loaded subject, and it is important to get right so that you don’t burn your SRE and DevOps teams out.

To oversimplify, the main rule I hear time and time again is that alerts should be actionable, and there should be an immediate critical need for a human response. As systems get more and more complex, this is easier said than done, but in an ideal scenario, the oncall engineer should from the context within the alert already be headed in the right direction with respect to remediation they need to perform. Having alerts that are packed with rich logs and events and pertinent information can save your oncall engineer, and therefore your production systems, a lot of downtime. We will not dive deeper into the topic of alerting strategy here as this is just the “napkin sketch” – but if you’ve setup your initial alerts and are able to iterate and add (or remove) based on learnings, you are setup well for the long term.

“Oh – go ask Daenerys, she’s the only one that has the tribal knowledge fix that service” is not a recipe for success. To solve this, you need playbooks / runbooks, which give a description or instructions to the oncall about which actions might need to be taken and are usually specific to an alert or set of alerts. Playbooks and runbooks can save a lot of precious time during an outage and give the oncall the confidence to be able to remediate without the need for multiple additional opinions.

Over time, you will be able to identify areas where your playbook rarely changes or needs updates. This is very common when working with third party tools where you may not have access to the source and therefore even the long-term strategy may involve some sort of “turn it off and back on again” approach. Your team will notice that some of these remediation actions are mundane and repetitive. In the hunt for finding good candidates for automated remediation, unchanged, mundane, and repetitive are fantastic traits.

You will also begin to notice trends in certain production issues specifically around how long it takes for a human to respond. If your SLAs are being negatively impacted due to slow manual human response times, this also makes for good auto-remediation candidates.

Automation as a concept is another loaded topic, but it extremely important for many reasons. Beyond the obvious quantifiable efficiency improvements, reducing the number of repetitive tasks your team needs to do will reduce frustration and burnout, and increase levels of fulfillment among team members. Sometimes the concept of “automating oneself out of a job” may come up, but nearly always the reality is that you’re able to optimize the mundane tasks, letting the team focus on the more meaningful and exciting ones.

When you have identified good candidates for automated remediation, you can progress/proceed to implementation.

#3 – Implement Automated Remediation

There is now a lot of writing on this napkin, and we’ve only now gotten to the most loaded topic, implementing the so called “self-healing” automation. There are many valid approaches here, but it is important to balance upside with risk. With software development, there is an infinite bar for quality. On one side of the spectrum you have “move fast and break things” which will allow tremendous progress but open the team up to additional risk. In a modern production environment, where a lot of your revenues and users most likely depend on real time digital services, this is generally a bad idea. On the other side of the spectrum you have a more measured approach.

Since we’ve already done the work to identify areas where the mitigation steps are concrete, we should aim to implement auto-remediation using the more measured approach. Here is an approach that has been successful:

- Write your initial alerts (first-order) to an index/partition. This allows you to do quite a bit around correlation and higher-level understanding of the current state, which in turn allows for better intelligence/logic around remediation (second-order). This is also the most effective way to reduce false positives (which is important in order to not kick off automation prematurely).

- Enrich your alerts with additional information. Add priorities, tags, notes, all of these can easily be accomplished as additional columns within an index and allow for second-order logic to be applied to your index.

Your auto-remediation should account for the possibility that at any moment 3 dragons could split up and strategically fly through the 3 datacenter availability zones where your production happens to be running (very unlucky indeed), the possibility that a human is interacting in an unrelated way with the systems concurrently, or the possibility that an oncall is also trying to manually remediate in parallel. Thinking through scenarios and diligent testing will reduce the risk of cascading failures. Suffering a catastrophic outage because your automation ran faster and wider than your team could have possibly kept up while it obliterated your infrastructure will set back your team’s view on auto-remediation (and your long-term prospects with that team) considerably. - Create your second-order alerts. This means that your logic to trigger remediation is not relying on a single indicator. For example, is there currently an anomaly in CPU usage on a node or service level? Write it to your alert index. Is there a lack of data being seen within another microservice? Write it to your alert index. Now, you can have simple logic running in that index looking for the combination of those two (or more) correlated events in order to trigger auto-remediation.

- Layer your auto-remediation. Your initial scripts/triggers should have lower thresholds (more sensitive) and perform less harsh actions. If you see sustained degradation or worsening of services, more invasive and stronger actions can then be triggered. For example, initially the trigger may restart a service, run a query, execute a script, etc. This can be powerful because for many setups, the relevant data or state of the node when the issue occurred is no longer accessible or otherwise unavailable. Subsequently, if the issue worsens, the trigger may restart the node entirely (or spin up a new one).

- If all layers of your auto-remediation fail to improve production, at that point the oncall should be paged, and if they’re aware of the layers of auto-remediation as they should be, they can also be accurately concerned that the issue is in fact potentially serious.

How do you know if it worked?

Fix-First is the goal here, but it’s not a substitute for post-mortem investigations. Still, an oncall will be much happier to look into why something was triggered at their desk after their breakfast burrito and coffee than after squinting at a screen looking for the “lower brightness” button at 3am. In the long term, you should also look into auto-remediation that’s being triggered too often. Though this can be difficult especially in the case of third-party technologies, ideally, you’d like to prevent the underlying issue from happening entirely. Rule of thumb, if a fixed auto-remediation action gets triggered and resolves the issue a significant amount of times, it might be time to fix the issue at the source rather than relying on auto-remediation.

Yet, a lot of engineers swear by auto-remediation, and for very good reason. Although auto-remediation has a lot of requirements before a specific use case can be identified, the rewards are typically substantial. Improving SLAs, minimizing false positives, and eliminating alert fatigue can have positive business impacts everywhere from increasing revenue to employee retention. It is very common to be able to take a task that a human needed to perform multiple times a week and convert that into one they need to investigate once a year. This can be a massive value add in the long-term for nearly any organization.

Since this is a napkin sketch, we have had to oversimplify throughout, but let’s end with a final rule of thumb: If your auto-remediation is solving more problems than it’s creating, it’s a net positive and a success. How you determine that will differ for each team, but is ultimately up to you.