Large Language Models (LLMs) rapidly gain traction with the rise of generative AI. These models simplify complicated structures into straightforward prompt-response pairings. With a CAGR of 33.2%, the LLM market can grow by USD 36.1 billion by 2030. This rapid growth is driven mainly by the increasing demand for natural language processing (NLP).

As AI models continue to improve, LLMs encounter more issues. For this reason, achieving LLM observability becomes crucial. This observability means gaining control over LLM performance and resolving issues it encounters. Moreover, it helps boost performance, unlocking the full potential. Knowing how these powerful models work is essential for effective management and application.

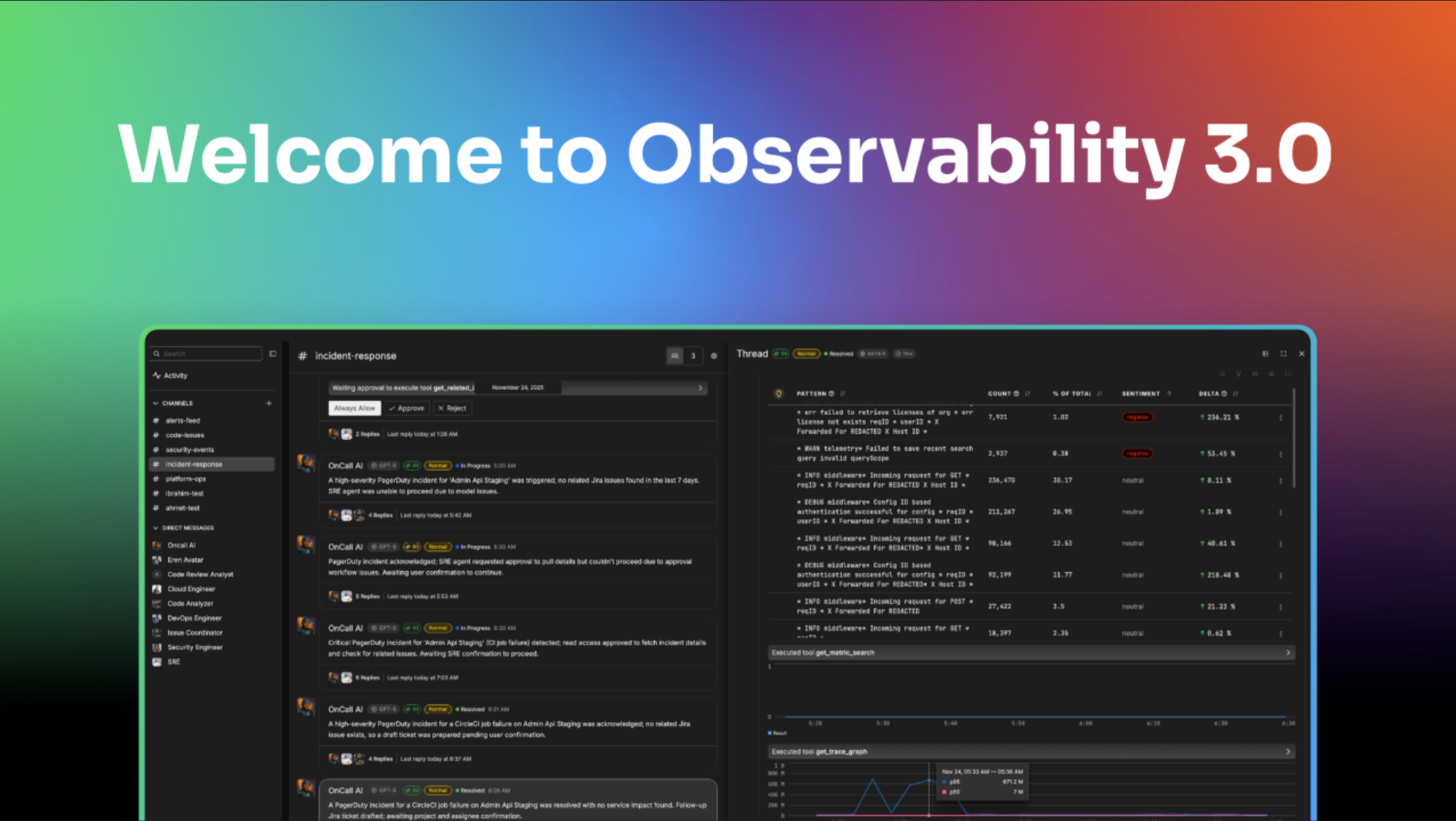

Automate workflows across SRE, DevOps, and Security

Edge Delta's AI Teammates is the only platform where telemetry data, observability, and AI form a self-improving iterative loop. It only takes a few minutes to get started.

Learn MoreThis article discusses how to deal with LLMs’ observability. It includes the potential issues, their importance, tools, and best practices.

LLMs in a Nutshell

Large Language Models (LLMs) are sophisticated machine learning models trained on extensive datasets to mimic human-like text generation. They possess diverse capabilities, from text completion and summarization to translation tasks.

Understanding LLM Observability And The Issues It Solves

LLM observability is the process of understanding and tracking complex AI models’ actions. This way, organizations can ensure that they produce correct answers or predictions. It includes tools, techniques, and strategies to help teams to do the following:

- Manage and Understand LLM apps and their performance

- Discover biases or drifts in the outputs they produce

- Fix issues before they affect the operations or the end-users

LLM observability helps organizations thoroughly examine how these models operate. With this process, app developers get insights that help them make decisions. It also shows ways to fix issues and opportunities to boost the LLMs’ performance.

LLM monitoring and observability include tracking LLM accuracy, errors, unfair behavior, and potential biases. This tracking capability helps concerned teams address common LLM application issues like:

- Hallucinations: Occasionally producing false data

- LLM Cost and Performance: Slow third-party APIs, inconsistencies, and high costs due to high data volumes

- Prompt Hacking: User manipulation of LLM apps to produce specific content

- Privacy and Security: Data leaks and output biases due to errors in training data

- User Prompt and Response Inaccuracy: Inaccurate answers due to varying user prompts

Through vigilant observation and thorough assessment, organizations can guarantee that their LLMs operate efficiently and produce dependable outcomes.

The following section shows how to deal with LLM observability using ten tried and tested solutions.

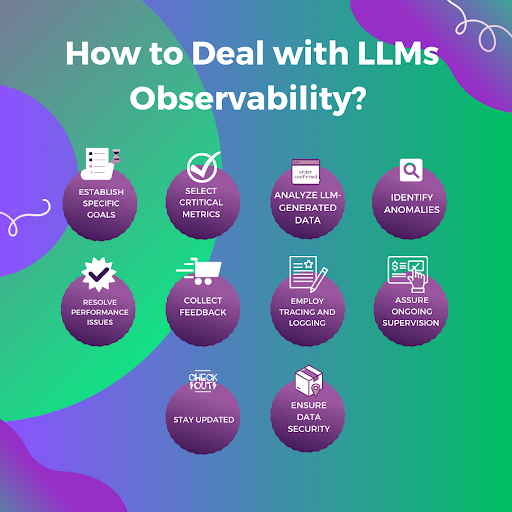

10 Best Practices to Achieve LLM Observability

Managing the observability of large language models entails putting techniques and instruments in place to track, evaluate, and enhance these models’ output. The following actions can be taken to achieve the observability of LLMs:

1. Establish specific goals that you want your LLMs to achieve.

Understanding the specific objective of your LLMs is necessary to optimize their performance. These goals will help you know the Key Performance Indicators (KPIs) relevant to your LLM. Some of these KPIs are text production quality, fluency, and range.

2. Find the best metrics to track your LLM’s efficiency.

Once you have the goal, find suitable metrics that can help you track your LLM’s efficiency. These metrics include accuracy, precision, memory, and ethical fairness. With these metrics, you can evaluate the efficacy and detect problems that your LLM may have.

3. Analyze LLM-generated data for troubleshooting and optimization.

Collect and examine data generated by your LLM to find inefficiencies or areas that need improvement. Based on this data, you can analyze to find patterns, trends, and anomalies. The results can help you in proactive system troubleshooting and optimization.

4. Identify anomalies using detection tools and systems.

Set up anomaly detection systems to spot odd behavior or departures from the norm. These anomalies may indicate several problems like the following:

- Model Drift: A progressive decline in the model’s performance

- Data Drift: A change in the data’s properties over time

- Performance Loss: A result of outside influences

Note

Consider using third-party tools like Edge Delta for anomaly detection. With this tool, you can easily spot anomalies using AI/ML and enjoy quick troubleshooting with AI recommendations. It also correlates data to alert, so you’ll know if there’s something in your LLM that’s worth looking into.

5. Resolve performance issues by immediately fixing the detected errors.

Carefully review the data you’ve collected to find any errors or irregularities. Since these errors cause performance issues, you must immediately take corrective action. In most cases, these problems are due to factors like:

- Hardware problems

- Data biases

- Incorrect settings

Resolving these issues can boost performance and keep your LLM from encountering more problems.

6. Collect feedback to gain better insights on what to improve.

User feedback is crucial when improving your LLM and achieving observability. Thus, requesting input from users, stakeholders, and subject matter experts is crucial. These feedbacks can help you assess the caliber and applicability of LLM outcomes. Take advantage of these suggestions to improve and fine-tune the model.

7. Employ tracing and logging to obtain comprehensive LLM data.

Logs generated by your LLM contain data that can help you detect anomalies. Thus, by leveraging traces and logs, you can get comprehensive data on the following:

- Model Inference Requests

- Processing Durations

- Dependencies

With these data, you can better debug and troubleshoot your LLMs. However, managing it can be challenging, especially since LLMs generate high-volume logs.

✅ Pro Tip

Using third-party observability platforms like Edge Delta can improve your log analysis. Its AI and ML capabilities can sort data quickly, detect issues immediately, and optimize your LLM resource allocation.

8. Always monitor and improve your LLM’s performance.

To sustain optimal performance, you must continuously monitor and improve your LLMs. Always review your monitoring plan to maintain your LLM’s dependability and legitimacy. It’s also best to act quickly when necessary and adjust to its changing needs.

9. Stay updated with the latest developments on LLMs.

Stay informed about new observability tools, approaches, and LLM research developments. It’s a good practice to connect with AI communities. These groups can give helpful insights into developing LLM trends and observability practices.

10. Ensure data security and privacy to ensure compliance.

Put safeguards in place to secure sensitive data and to ensure you follow privacy laws. This practice involves protecting your LLM inputs, outputs, and model internals. Use security features like encryption, access controls, and secure data handling procedures.

Did You Know?

LLMs, trained through self-supervised or semi-supervised methods, predict the next word based on input data. They can produce various artistic works, including songs, stories, poetry, code, and essays.

By following these recommended practices, you can effectively manage and optimize your LLMs. These practices will guarantee LLMs’ dependability, correctness, and better performance in various applications.

If you want to achieve LLM Observability, open-source tools are available. These tools can help you monitor and observe your LLM apps. Continue reading to learn more.

Open-Source LLM Observability Tools: Best Options to Consider

Plenty of open-source tools can help you maximize your LLM monitoring and observability. Here are some of them:

LangKit

LangKit is an open-source toolkit explicitly designed for LLM monitoring. With this tool, you can analyze your LLM’s sentiment and toxicity. It also helps in detecting hallucination errors and in evaluating text quality.

Prometheus

Prometheus is an open-source LLM observability platform that collects and evaluates LLM metrics. With real-time data collection, processing, and evaluation, it offers helpful information.

AllenNLP Interpret

AllenNLP Interpret is an LLM observability tool for interpreting and visualizing LLM predictions. With this tool, you can have model explanation and cross-model debugging for better interpretation.

Grafana

Grafana is another LLM observability platform that enhances monitoring systems like Prometheus and Elasticsearch. With this tool, you can have powerful functions for evaluating and displaying LLM metrics and logs.

AI Fairness 360

AI Fairness 360 is a full-featured toolkit that checks machine-learning models like LLMs. With this tool, you can detect, record, and reduce discrimination and bias at every stage of LLM development.

Conclusion

Observability is essential for Large Language Models (LLMs) to function at their best and be productive in various applications. When an organization correctly implements the observability practices above, it can improve its LLM behavior comprehension and mitigate any difficulties.

By applying best practices and having a firm grasp of LLM observability, you’ll be able to confidently and effectively leverage the potential of modern AI systems. To optimize the impact of Large Language Models (LLMs) in your projects, remain watchful, flexible, and dedicated to ongoing improvement.

With more operations relying on LLMs, achieving observability becomes crucial. This process ensures your LLMs operate smoothly and avoid any issues. Besides implementing the best practices, leveraging observability platforms like Edge Delta can improve your LLM performance. This tool can automate your observability process using AI, reducing costs while ensuring your LLM works well.

LLM Observability FAQs

What are the top 3 Large Language Models?

The top 3 LLMs include GPT-4, LlaMA 2, and ChatGPT.

Does ChatGPT use Large Language Models?

Yes. OpenAI’s GPT backend powers ChatGPT, an automated chatbot service. The Large Language Model (LLM) is the foundation for the Generative Pre-Trained Transformer (GPT).

Which LLM is the most advanced today?

Unlike former LLMs, Claude v1 offers a more sophisticated design. This feature allows it to handle data quickly and produce more accurate predictions.