With the increasing complexity of system infrastructure, observability has become a fundamental element of modern software development. 90% of software leaders and engineers consider observability paramount to business strategies.

Since it emerged in the 1960s, observability has become increasingly important in IT. The concept evolved and branched out to various segments. However, two terms have long been the center of confusion: observability data and data observability.

Want Faster, Safer, More Actionable Root Cause Analysis?

With Edge Delta’s out-of-the-box AI agent for SRE, teams get clearer context on the alerts that matter and more confidence in the overall health and performance of their apps.

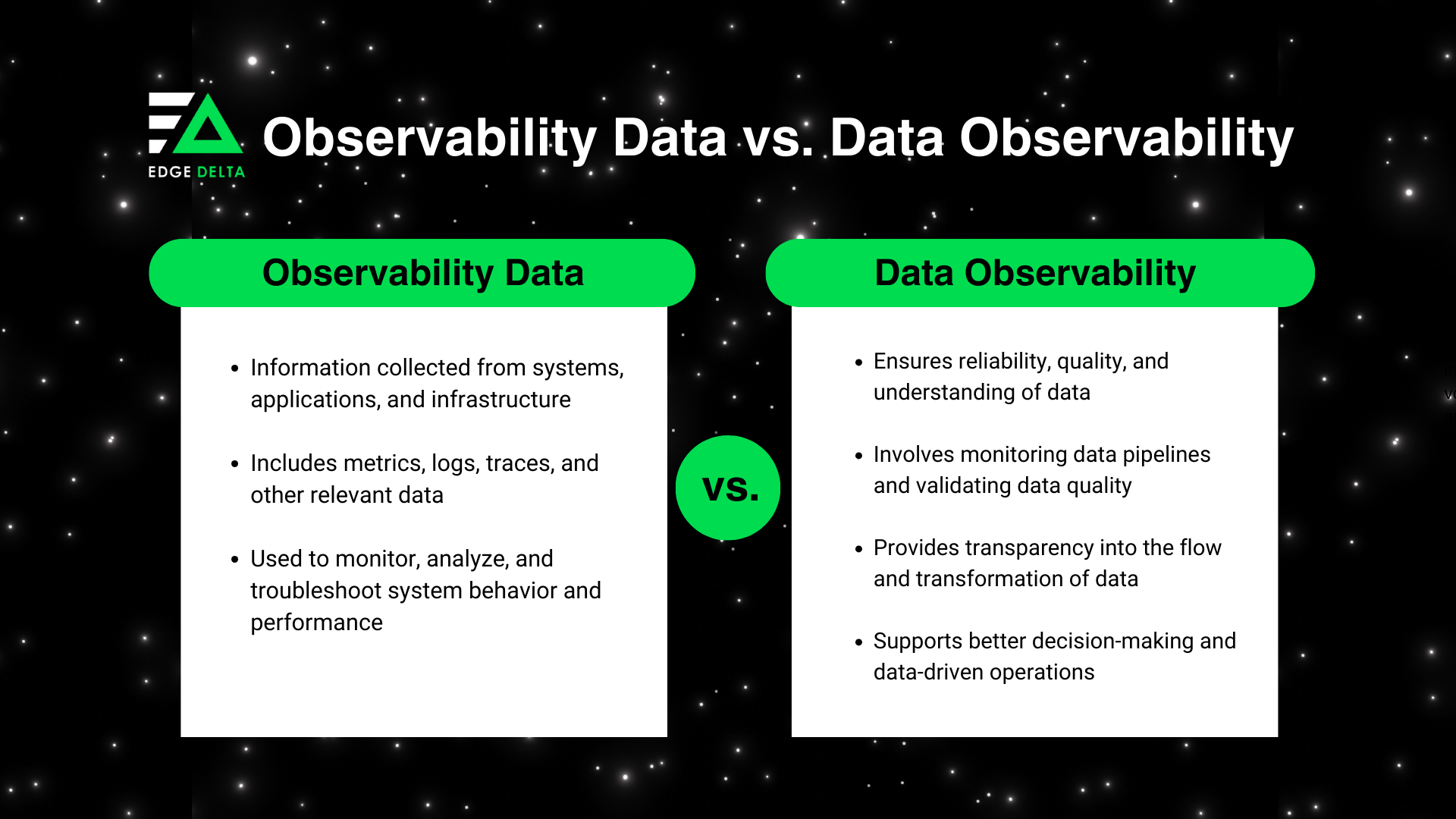

Learn MoreObservability data involves information and insights about a system’s health and behavior. Meanwhile, data observability refers to comprehensive visibility into a system’s entire data lifecycle.

Explore the differences between data observability and observability data to see which concept works for you. Let’s dive in.

Key Takeaways

- Observability data focuses on system health and behavior, while data observability ensures data-driven process reliability.

- DevOps teams are the target users of observability data, while data observability serves ETL programmers, data warehouse admins, and data engineers.

- Observability data is used for monitoring system performance and health, and data observability maintains high data quality and reliability standards.

- Predictions reveal that observability data will integrate ML/AI integration, enhance cybersecurity measures, and shift towards real-time analytics in the future.

- Data observability forecasts reveal that there will be increased emphasis on the following: data quality monitoring, comprehensive data lineage mapping, automated anomaly detection, and scalability.

Key Differences between Observability Data and Data Observability

Observability data involves a system’s logs, metrics, traces, and other telemetry data. It gathers information about the system’s performance, health, and behavior.

Data observability pertains to monitoring the data flow in a system to guarantee its trustworthiness, consistency, and availability. This approach emphasizes visibility into the entire data pipeline.

Observability data is used by DevOps teams that are liable for system security. It helps them spot threats and understand the system. Data observability serves ETL programmers, data warehouse administrators, and data engineers.

In a nutshell, observability data examines a system’s health and behavior, while data observability scrutinizes data quality and reliability. Both concepts are essential for optimizing complex systems, especially data-driven ones.

Check out more distinctions between observability data and data observability in the following sections.

Goals and Core Objectives

The objectives of observability data revolve around understanding and optimizing complex systems. It allows organizations to identify, diagnose, and resolve issues for better system reliability and user experience.

Here are several objectives of observability data:

- Enhanced Visibility into System Health: Provide a deep understanding of system behavior and interactions

- Proactive Issue Identification: Enable the early detection of potential issues or anomalies

- Optimization and Performance Improvement: Support the identification of bottlenecks and areas for improvement

- User Experience Enhancement: Provides data on the product experience to help boost overall user satisfaction

- Operational Efficiency: Facilitates operational processes and resolution time

Data observability is centered around maintaining a high standard of data quality. It ensures that organizations can trust and derive value from their data. It aims to establish robust data monitoring, validation, and governance practices.

Below is a list of data observability goals:

- Data Quality Assurance: Guarantees that the data is accurate, complete, and consistent

- Trustworthiness and Reliability: Build trust in the data to enable secure decision-making

- Timely Detection of Data Issues: Allows prompt detection and resolution of data issues

- Data Governance and Compliance: Ensure that data governance regulations and regulatory compliance are followed

- Collaboration and Accessibility: Foster collaboration among team members and facilitate effortless data access

Advantages

Observability data is paramount in the improvement of system performance. Its ability to provide real-time system insights is a major benefit. Teams can quickly identify and resolve issues, reducing operational inefficiencies and downtime.

Also, observability data allows organizations to make informed decisions based on a comprehensive understanding of system behavior. It helps teams anticipate and resolve issues.

The transparency offered by observability data fosters a culture of continuous improvement, as teams can iteratively refine systems based on actionable insights, resulting in more robust and resilient architectures.

Data observability ensures the reliability and quality of data-driven processes within a system. Its data flow anomaly detection is a central benefit. Teams can detect discrepancies early and prevent system-wide errors by monitoring data pipelines.

Moreover, data observability enhances data lineage tracking, allowing businesses to trace how data transforms across various stages. This transparency aids in compliance with regulatory requirements and boosts confidence in data-driven decision-making.

Challenges and Limitations

Data observability and observability data come with multiple advantages that help organizations make informed and confident decisions. However, both concepts have limitations.

The challenges associated with data have become increasingly complex. This means that understanding and addressing the problems is crucial to harness the full potential of observability.

Analyzing observability data can be challenging due to the following:

- Data Volume: Every day, about 328.77 million terabytes of data are created. Observability tools may become overwhelmed by the immense volume of data modern systems produce. Handling and analyzing massive datasets may lead to performance bottlenecks.

- Data Quality: Inaccurate or incomplete data can cloud analysis, making understanding a system’s behavior hard. With low-quality data, an organization gets incorrect insights. Fixing data quality issues in real time is also a complex task.

- Data Security and Privacy: Handling sensitive data can raise concerns about privacy and security compliance. Complying with data security regulations can limit access to specific information, which reduces the scope of observability and impedes threat detection.

- Data Latency: Real-time observability requires low-latency data processing. Delays in data collection, transmission, or analysis can compromise the ability to respond promptly to critical events.

- Integration Complexity: Modern systems use multiple technologies and architectures. Integrating observability into every layer of a complex system can be intricate and time-consuming. Incomplete integration may result in blind spots affecting system behavior and performance.

Helpful Article:

Data is growing rapidly, and organizations are struggling to keep up. Many companies are starting to limit log ingestion to cut costs, which affects observability. Gain more insights into the challenges associated with the management of observability data by reading this report on the current state of observability.

Understanding the lineage and context of data is fundamental for maintaining a clear picture of dynamic and distributed systems. However, data observability also comes with its own set of limitations. These include:

- Data Lineage and Context: Tracking the data lineage is crucial for data observability. However, maintaining accuracy in tracking the data lineage of dynamic and distributed systems is difficult. Lack of comprehensive data lineage can make it hard to understand data changes.

- Automated Anomaly Detection: Adapting to the evolving patterns of system behavior and distinguishing anomalies is a complex task. Data observability may be compromised by false positives or negatives that alert unnecessary or overlook critical issues.

- Scalability: As the volume of data grows, the scalability of data observability solutions becomes crucial. Ensuring that tools can handle increasing amounts of data without sacrificing performance is challenging.

- Inadequate scalability can limit the scope of data observability, preventing organizations from effectively monitoring and managing their expanding data ecosystems.

- Operational Overhead: Implementing data observability practices often requires additional resources, including skilled personnel and advanced technologies. Without proper investment and commitment, teams may struggle to leverage data observability effectively.

Future Trends

As technology advances, observability and its components will also progress. Here are what you can anticipate for observability data in the future:

Machine Learning and AI Integration

Observability data will utilize machine learning and artificial intelligence to obtain actionable insights. This integration will allow automated anomaly detection and predictive analysis. Manual efforts will be reduced, and improving the overall system performance will be easier.

Enhanced Security Observability

Observability will play a crucial role in enhancing cybersecurity measures. This means that additional observability features will be added for prompt identification and reaction to security threats to protect the system proactively.

Real-time Analytics and Streaming Data

With the rise of event-driven architectures, observability solutions must handle and analyze data streams instantly. The demand will mark a shift towards real-time analytics and streaming data processing.

Event-Driven Architecture Definition

Event-driven architecture (EDA) is a software design pattern in which applications interact by sharing events that signify important changes or incidents in the system. Unlike traditional request-response methods, EDA makes services independent and scalable.

Data observability is experiencing a deep transformation, denoted by the escalating emphasis on data quality. Foreseen trends for the approach include:

Data Quality Monitoring

The importance of data quality will increase over the years. This will lead to data observability tools incorporating advanced monitoring capabilities to ensure data accuracy, completeness, and consistency.

Data Lineage and Dependency Mapping

Data observability will evolve to provide comprehensive data lineage and dependency mapping. Understanding data flows and dependencies will be crucial for compliance, data governance, and analytical insights.

Automated Data Anomaly Detection

Advanced algorithms and ML will be applied to detect anomalies in data patterns automatically. This will help organizations spot unexpected data behavior changes, speed up response times, and prevent issues.

Scalability for Big Data Environments

As organizations deal with the increasing data volume, data observability solutions will continue to evolve to handle scalability challenges. This includes efficient processing, analysis of large datasets, and storage.

How Observability Data and Data Observability Affect Decision-Making

Observability data and data observability are pivotal in enhancing decision-making processes across various domains. Both concepts provide a clear picture of the system’s work and ensure the data is trustworthy and accurate.

By collecting and analyzing observability data, organizations gain valuable insights into performance metrics, user behavior, and system health, enabling them to make data-driven decisions. The real-time visibility boosts proactive problem-solving, minimizes downtime, and optimizes operational efficiency.

On the other hand, data observability secures the quality and reliability of data to avoid flawed decision-making. Data observability tools help monitor and ensure the integrity of their data. When decision-makers trust the data they are working with, they are more likely to make confident and accurate choices.

Conclusion

Both observability data and data observability are crucial for optimizing complex systems. The former focuses on system health and behavior, and the latter guarantees the reliability and quality of data-driven processes.

Integrating the two concepts enhances decision-making processes. Doing so offers organizations and decision-makers a clear picture of system functionality and trustworthy data for informed choices.

Observability Data vs. Data Observability FAQs

What is the difference between data observability and ML observability?

Data observability concentrates on securing data quality and reliability, while ML observability specifically monitors a machine learning model’s overall performance during deployment.

Is data observability part of data governance?

Data observability is not inherently part of data governance. The latter is a broader framework that includes policies for managing and protecting data assets.

Who uses observability?

Observability is mostly utilized by software developers, IT operations, and security teams.