A staggering 98% of organizations worldwide currently use cloud-computing technologies to streamline their computing operations, typically through popular third-party providers like AWS, Microsoft Azure, and Google Cloud. Of course, cloud environments have their weaknesses. According to a recent IBM report, around 45% of security breaches are cloud-based, which is a significant concern in particular for companies that work with sensitive data. As a result, organizations are constantly looking to implement tools and practices to improve their overall security.

One popular technology teams can leverage to help protect their infrastructure from security threats are Virtual Private Clouds (VPCs). VPCs are isolated cloud segments contained within a public cloud that grant users full control over their cloud-based network infrastructure. Teams can use VPCs to protect their network by configuring their networking infrastructure in a number of different ways, including privatizing subnets, implementing route tables, creating security groups, and much more.

Want Faster, Safer, More Actionable Root Cause Analysis?

With Edge Delta’s out-of-the-box AI agent for SRE, teams get clearer context on the alerts that matter and more confidence in the overall health and performance of their apps.

Learn MoreFor organizations using AWS, Amazon has their very own VPC service that allows users to create and manage VPCs hosted within the cloud. Amazon VPC components also generate VPC logs that capture information about the IP traffic going to and from the network interfaces within your VPC. However, these logs aren’t properly structured, making it extremely difficult to ensure your environment is secure via log-based analysis to identify and prevent network issues.

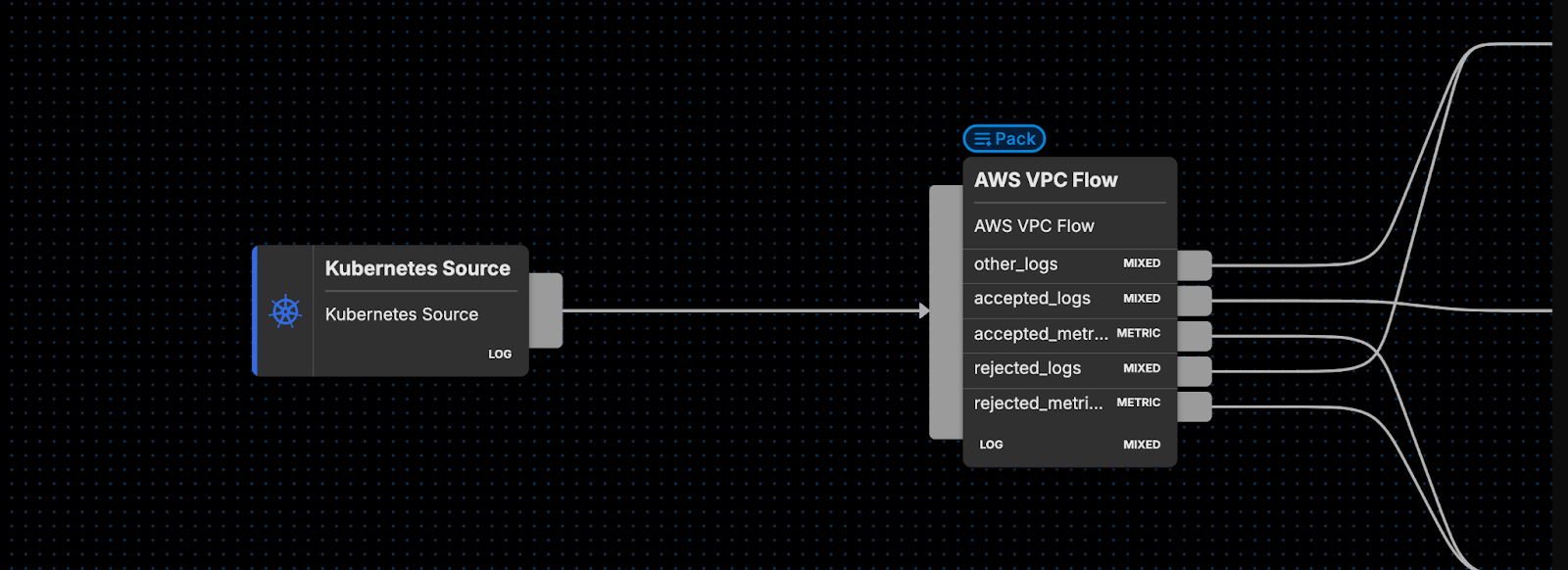

Edge Delta’s AWS VPC JSON Pack is a specialized collection of processors built specifically for automatically converting AWS VPC logs into a more structured format, enabling you to use platforms like Datadog or Edge Delta to fuel analysis — no instrumentation needed. Our packs are built to easily slot into your Edge Delta Telemetry Pipelines — all you need to do is route the source data into the AWS VPC JSON Pack and let it begin processing.

If you are unfamiliar with Edge Delta’s Telemetry Pipelines, they are an intelligent pipeline product built to handle log, metric, trace, and event data. They are also an on-the-edge pipeline solution that begin processing data as it’s created at the source, providing you with greater control over your telemetry data at far lower costs.

How Does the AWS VPC JSON Pack Work?

The Edge Delta AWS VPC JSON Pack streamlines log transformation by automatically processing AWS VPC logs as they’re ingested. Once the processing is finished, these logs can be easily filtered, aggregated, and analyzed within the observability platforms of your choosing.

The pack consists of a few different processing steps, each of which play a vital role in allowing teams to use VPC log data to ensure their networks are protected and operating smoothly.

Here’s a quick breakdown of the pack’s internals:

Data Filtering

The AWS VPC JSON Pack begins by using an Edge Delta Regex Filter Node to filter out the extraneous logs that begin their message with the word “version”, by:

- Utilizing a regular expression

^versionto match all log lines that begin with the wordversion - Removing all matched logs from the primary flow

This filter ensures that only the highest-value data continues through the pack’s subsequent processing steps.

- name: omit_header_data

type: regex_filter

pattern: ^version

negate: true

Field Extraction

The pack then leverages Edge Delta’s Grok Node to convert the unstructured log message into a structured log item, by:

- Parsing the log message via a Grok expression to locate and extract the associated fields, including but not limited to the

vpc_version,aws_account_id, andnetwork_source_ipfields - Adding new fields in the

attributessection of the log item for each one, and assigning to them the extracted values

Converting to structured data allows for operating directly on the extracted values, which greatly simplifies the log search and analysis processes.

– name: grok_extract_fields

type: grok

pattern: '%{INT:vpc_version} %{NOTSPACE:aws_account_id} (?:%{NOTSPACE:network_interface}|-)

(?:%{NOTSPACE:network_source_ip}|-) (?:%{NOTSPACE:network_destination_ip}|-)

(?:%{INT:network_client_port}|-) (?:%{INT:network_destination_port}|-) (?:%{NOTSPACE:network_protocol}|-)

(?:%{INT:network_packet}|-) (?:%{INT:network_bytes_written}|-) %{INT:vpc_interval_start}

%{INT:vpc_interval_end} (?:%{WORD:vpc_action}|-) %{WORD:vpc_status}.*'

Data Routing

After the structured format conversion, the AWS VPC JSON Pack then utilizes an Edge Delta Route Node to create two distinct data streams, by:

- Evaluating the

vpc_statusfield under theattributessection of each log item - Establishing two separate data streams by only forwarding logs which have a

vpc_status≠NODATAfor further processing

All logs with a vpc_status of NODATA are still saved, and routed out of the pack in a separate rejected_logs output.

- name: skip_nodata

type: route

paths:

- path: all_non_nodata

condition: item["attributes"]["vpc_status"] != "NODATA"

exit_if_matched: true

Timestamp Transformation

After isolating the desired logs, the pack then performs timestamp normalization using the Log Transform Node by:

- Updating the

vpc_interval_endfield to a Unix Milliseconds format - Inserting the updated value back into the timestamp field using the Convert Timestamp Macro.

This normalization process enables teams to get an accurate timeline of log events, which is critical for correlating, analyzing, and diagnosing performance issues and other anomalies over time.

- name: log_transform_timestamp

type: log_transform

transformations:

- field_path: item["timestamp"]

operation: upsert

value:

convert_timestamp(item["attributes"]["vpc_interval_end"], "Unix Second",

"Unix Milli")

Data Routing

One last routing step is performed, separating the logs into two distinct streams depending on whether their vpc_action field is set to REJECT or ACCEPT. Once separated, the pack routes the logs as follows:

- Logs with

vpc_actionset toREJECTare routed to therejected_l2mandrejected_logsnodes - Logs with the

vpc_actionset toACCEPTare routed to theaccepted_l2mandaccepted_logsnodes - Logs that do not match either condition are routed to the {other_logs} node

This classification allows you to differentiate between accepted and rejected requests, which helps in isolating and investigating network security events. By routing logs based on specific actions, you can streamline your monitoring processes and focus on the logs that are most relevant to your network’s security, ensuring quicker identification and resolution of issues.

- name: action_router

type: route

paths:

- path: rejected

condition: item["attributes"]["vpc_action"] == "REJECT"

exit_if_matched: true

- path: accepted

condition: item["attributes"]["vpc_action"] == "ACCEPT"

exit_if_matched: true

Log to Metric Conversions

Finally, the AWS VPC JSON Pack derives key metrics from the processed logs, including network bytes written, network packets, and VPC interval duration, via the Edge Delta Log to Metric Node, to enhance further analysis. More specifically, it:

- Converts logs flowing through the

accepted_l2moutput into key metrics, facilitating effective reporting and monitoring - Converts logs flowing through the

rejected_l2moutput into key metrics, to pinpoint issues and anomalies

Pack Outputs

In total, there are five outputs from the AWS VPC JSON Pack:

accepted_logs– all logs that havevpc_actionset toACCEPTrejected_Logs– all logs that havevpc_actionset toREJECTother_logs– all logs that don’t meet the specific conditions for acceptance or rejectionaccepted_metrics_output– metrics derived from the accepted logsrejected_metrics_output– metrics derived from the rejected logs

For more in-depth coverage of these processors and the AWS VPC JSON Pack, check out our full documentation.

AWS VPC JSON Pack in Practice

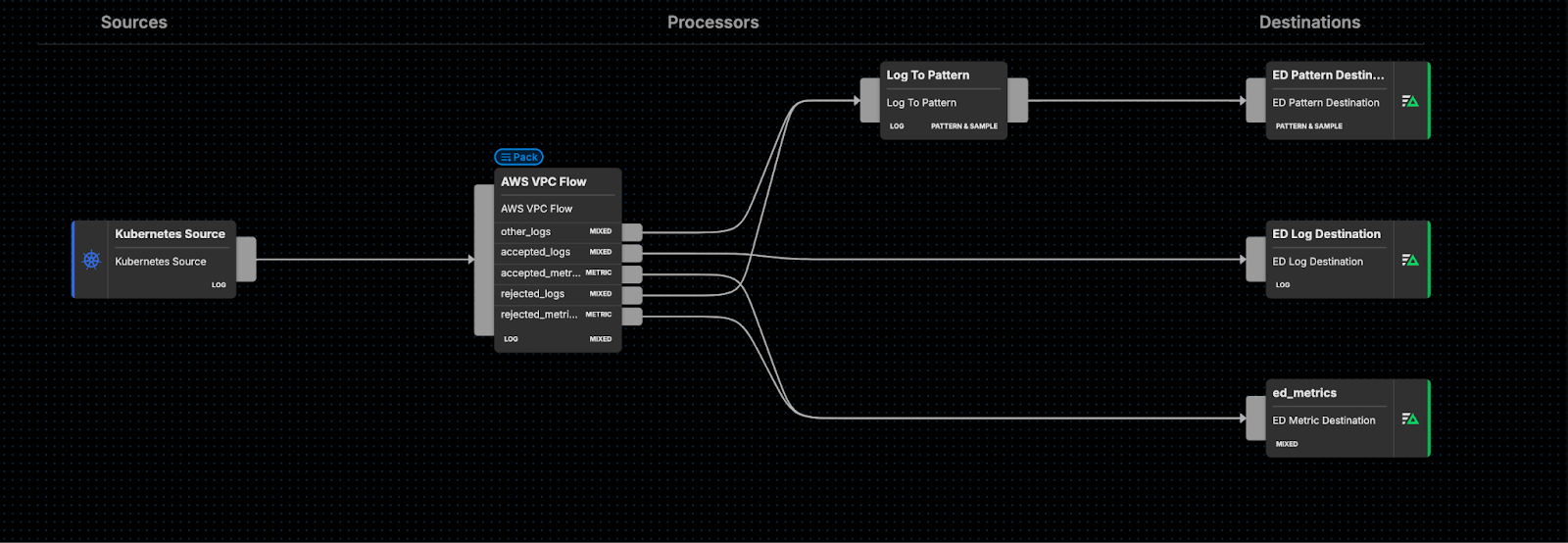

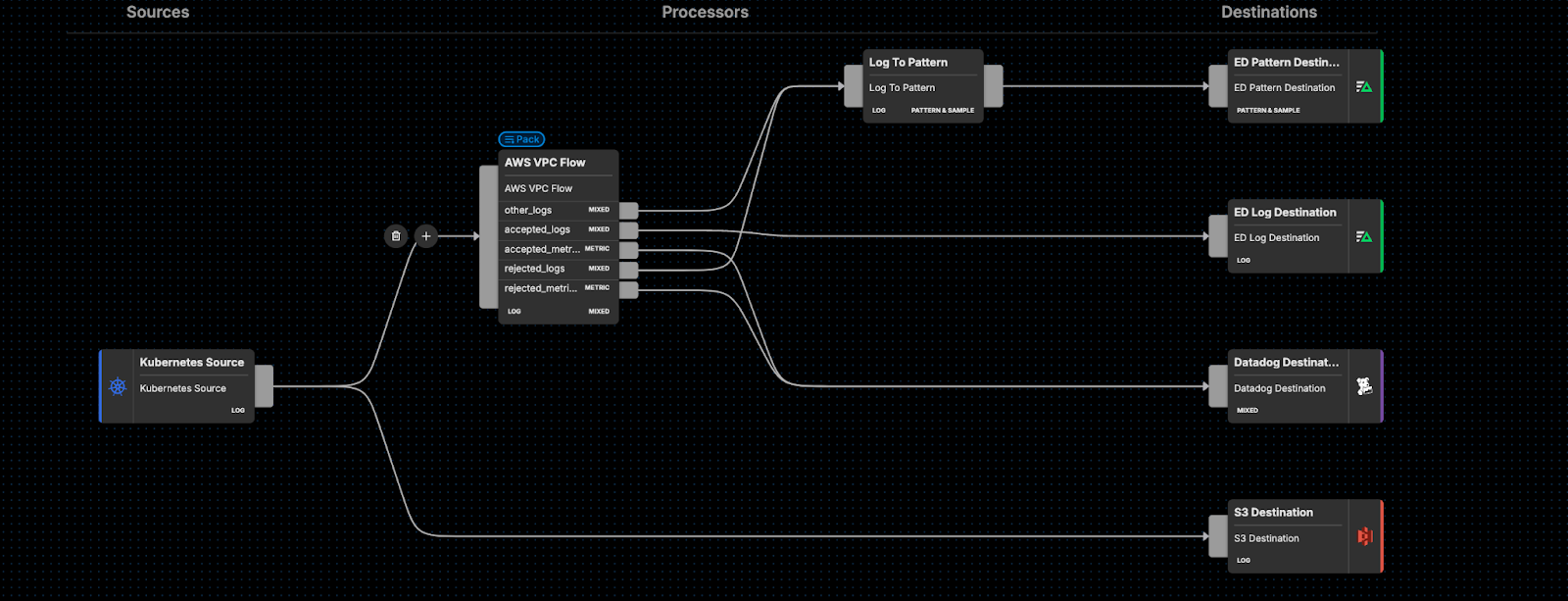

Once you’ve added the AWS VPC JSON Pack into your Edge Delta Pipeline, you can route any output to any destination of your choosing.

For instance, you can convert the {other} and {rejected} logs into patterns, and route them along with the derived metrics into Edge Delta’s backend for further analysis, as shown below:

Alternatively, you can use Edge Delta to store your VPC flow logs, and send only the derived metrics into a more expensive downstream destination like Datadog, while sending a full copy of raw data into S3 for compliance:

Getting Started

Ready to see our pack in action? Visit our pipeline sandbox to try it out for free! Already an Edge Delta customer? Check out our packs list and add the AWS VPC JSON pack to any running pipeline!