Typically, log data is not evenly distributed. Certain datasets consume a much larger percentage of your resources than others. Among the data you index in your observability platform, there is a ton of data that is of little quantifiable value.

Frequently, we find that as much as half of a customer’s log data could be categorized as low-value. It simply doesn’t make sense to relegate this data to a premium storage tier, like your observability index. Instead, it can reside in archive/object storage, a lower-cost log search platform, or discarded altogether (depending on how you use the data).

Want Faster, Safer, More Actionable Root Cause Analysis?

With Edge Delta’s out-of-the-box AI agent for SRE, teams get clearer context on the alerts that matter and more confidence in the overall health and performance of their apps.

Learn MoreIn this article, I’m going to walk through three steps to identify low-value data and move it to the proper storage destination:

- Identify and remove unnecessary or redundant log messages

- Remove unneeded information from log messages

- Standardize your application logs

For the purposes of this article, I’m defining a “dataset” as a type of log (for example, NGINX logs). In reality, you can categorize your log data further (for example, if NGINX is being used across multiple applications, environments, and architectures), but that isn’t necessary for now.

Before You Start: Identify the Top Datasets by Volume

Most logging and observability tools provide visibility into volumes of data via specific metadata tags. Look into a seven-day, or better yet, 30-day window of your data to measure your highest-volume datasets, since these numbers can vary seasonally. Once you’ve identified your highest-volume datasets, you know where you’ll see the largest returns on your optimization efforts.

The steps below assume that there are no entire datasets that can be omitted altogether. If you are sending such a dataset to your logging platform you can of course remove it entirely.

For each of the top volume generating datasets, perform the following steps.

Step 1: Identify and Remove Unnecessary or Redundant Log Messages

Once you’ve identified your highest volume datasets, the lowest-hanging fruit is to remove log messages from your log index that either aren’t needed or are redundant. In my experience, removing these messages from your index can reduce data volumes by over 30%.

Example #1: Access Logs

Your access logs capture all requests sent to a specific service (e.g., NGINX, Apache, load balancer, etc.). Within your access logs, identify specific endpoints that receive requests on a cadence or regular interval. For example, health checks (/api/healthcheck) or pings (/ping). The volume of data these checks generate can be significant – especially if they occur every minute or more. These logs are rarely useful, since most teams handle health checking through other means.

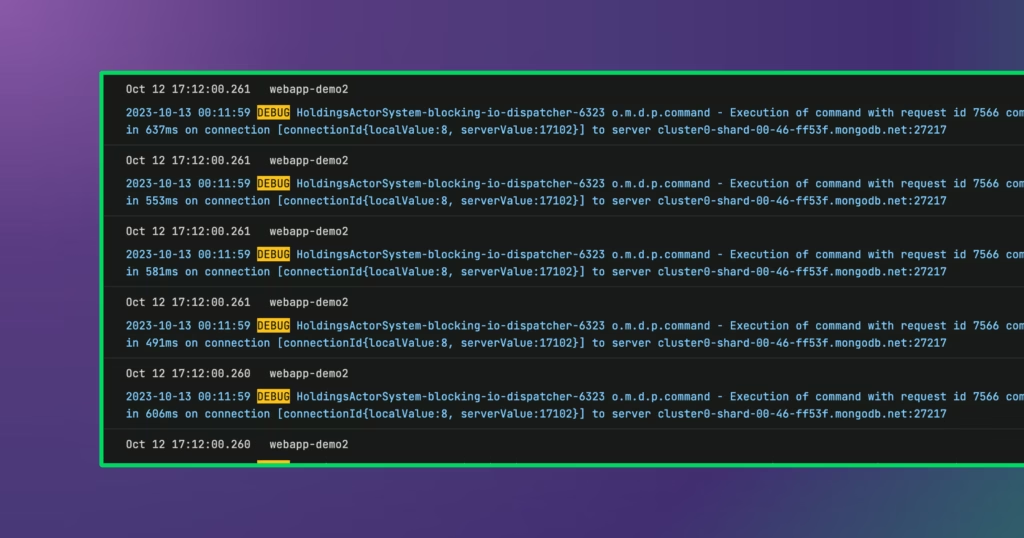

Example #2: DEBUG Logs

Often, users turn on DEBUG logs for a specific purpose and forget to turn them off. This action causes many unexpected spikes in log volumes. In an ideal world, you would stop logging DEBUG messages altogether. However, that is often an extreme measure.

Instead, analyze your DEBUG messages, measuring their volume and how frequently they are queried (if at all). Subsets that are infrequently used can be filtered out. Alternatively, you can use Edge Delta to centrally turn DEBUG logs on when you need them and off when you don’t.

Step #2: Remove Unneeded Information from Log Messages

Once you complete step #1 and remove the “low hanging fruit” from your log index, you can cut down the verbosity of your log messages. It’s becoming increasingly common for excessive tagging/enveloping to inflate log volumes. Within the log messages, you can identify sizable chunks of information that isn’t used and can be discarded.

Example: Kubernetes Logs

Logs from Kubernetes clusters are often enriched with Kubernetes tags (e.g., container, pod, and node-level information). Rarely do teams need these tags to search their logs. Additionally, you’ll often find redundant tags that you can consolidate, like pod_id and pod_name.

Note: this step is a bit more involved than Step #1. Before making any changes, it’s important to analyze your users’ queries to understand both the tags they use and the information they are seeking from their logs. This is not an exact science and you may want to talk to your “power users” from each dataset to confirm your findings.

Once you identify specific tags or parts of the message that can be removed, you can do the following:

- Change the agent configuration to remove unneeded tags, since tagging typically happens at the agent level.

- Mask parts of your message that can be removed at the agent level. Usually, you would mask sensitive information, but masking can also be used to help you cut log volume. Simply mask the unneeded parts of your message with empty strings.

- Change the logging itself to log only what is relevant. (Note: this action is the hardest of the three to execute since it involves code and config changes.)

Step #3: Standardize Your Application Logs

Step #3 is an evolution off of the previous section, since we already shaped the logs we produce to an extent. While this step applies primarily to application logs, it is also relevant for middleware and off-the-shelf data as well.

Shaping your log data is the most involved step detailed in this post. To execute this step, you need to involve your application owners.

Here, you will create a logging standard that defines what to log, and in what format, to drive greater efficiency. This includes standardizing metadata, but also the schema and contents of the logs themselves.

Like many things, there is an 80/20 rule with log optimization. Steps #1 and #2 in this blog post equate to 20% of the effort and 80% of the return on investment. This step is the inverse, and candidly, many opt to skip this.

However, by establishing logging standards, you are not only eliminating low-value data but also improving the usability of your data. In essence, you’re capturing the exact information you want and equipping everyone to find what they are looking for.

Many customers are seeking greater efficiency from their log indexes. They recognize that not all data is of equal value, and it’s not sustainable to route everything to a premium storage tier. The steps outlined above provide a good starting point for you to optimize your log index and drive greater cost efficiency across the organization.