Hello world! My name’s David, the Principal Sales Architect at Edge Delta.

Wait, Who Are You?

I’ve spent seven of the last nine years at Google Cloud. During this time, I worked with all kinds of companies to modernize their applications, take advantage of the cloud, and increase developer velocity. Before that, I spent two years at Sumo Logic, working directly with customers on improving their observability practice.

Automate workflows across SRE, DevOps, and Security

Edge Delta's AI Teammates is the only platform where telemetry data, observability, and AI form a self-improving iterative loop. It only takes a few minutes to get started.

Learn MoreI’m writing this blog post to explain why – after nearly a decade away from observability – I’ve decided to re-enter this space.

Observability Remains Vital, But Innovation Has Stagnated

When I first joined Google Cloud, the technical landscape looked entirely different than it does today. There was no Kubernetes. There was no Spanner or Athena or CosmosDB. Rust and golang were just getting off the ground. Google Cloud didn’t even have a Private Interconnect option available.

In short, the amount of change I saw while I was there was absolutely massive.

You would expect that observability and monitoring matched this pace. But instead, I’m struck by how little has changed.

The major players in the space are the same, with a few acquisitions here and there. Their messaging is still the same. The fundamental problems of growing data volumes and making meaning out of heaps of information was still the same (and if anything, less solved over time).

Yes, more features in observability tools keep emerging. But, the fundamental practice of observability is still the same, and not rising to the challenge of today’s software needs.

So, I decided to join Edge Delta for a few key reasons:

Edge Delta Takes a Fundamentally Different Approach to Observability

The old observability model is based almost entirely on the following steps:

- Get all the data from applications and machines to a central service

- Apply as much compute as possible to extract meaning from the data

This is a reasonable approach for reasonable data volumes. But for services that need to scale, its limits start to show… whether from an economic, performance, or pure manageability perspective.

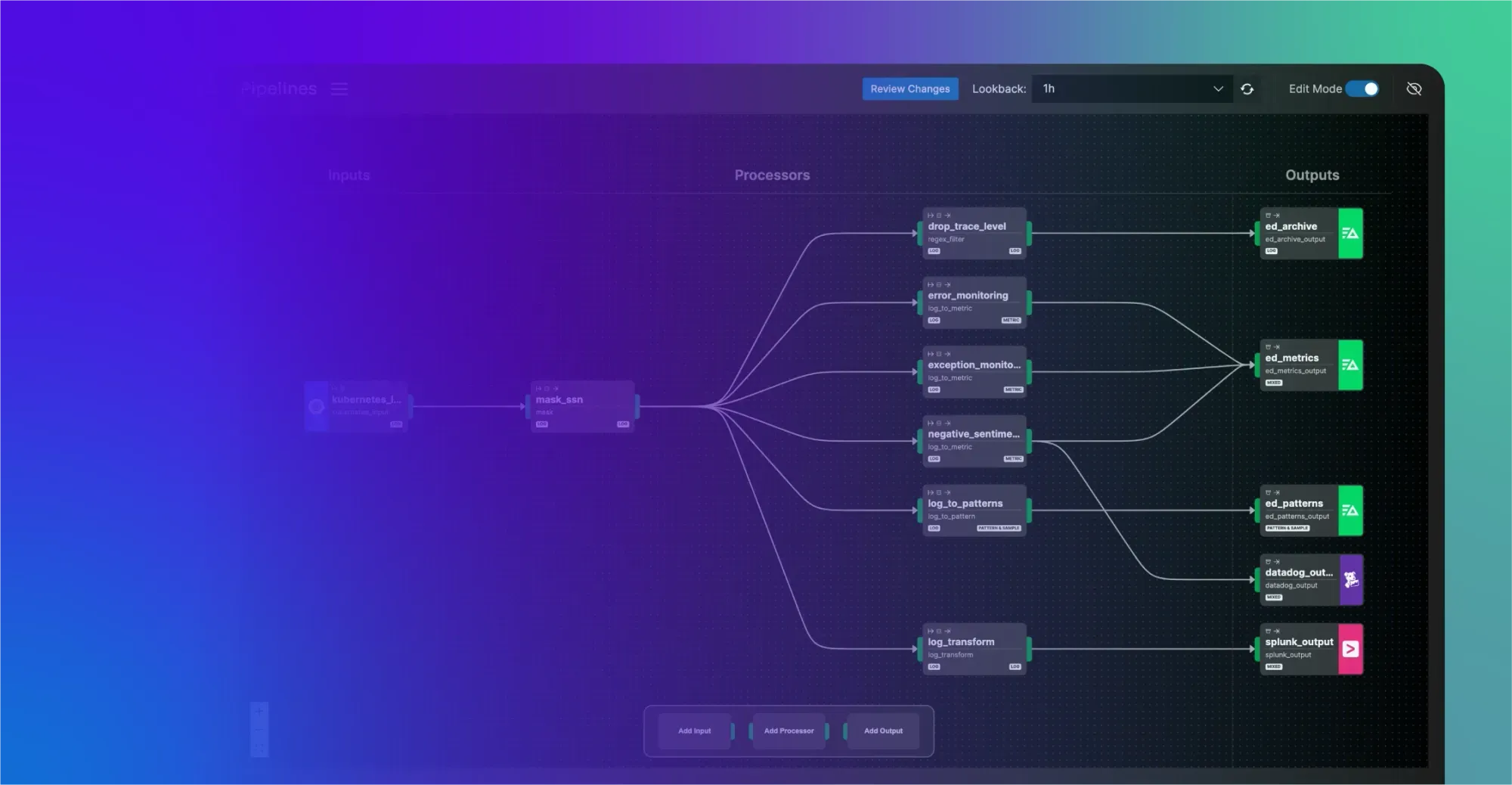

Edge Delta turns this model on its head, looking to pack as much analysis as possible in the first step of this process. That means processing and analyzing data where it’s created, rather than waiting for a mountain of compute to get any actionable information. The software that captures the data can route and transform it in flight, in a scalable and configurable way.

After all, all problems in computer science can be solved with another layer of indirection. At Edge Delta, we’re making a layer that was previously untweakable into another lever in the observability toolbox.

Which means as part of that…

Edge Delta is on the Road to Remake Observability in a Better Way

We can’t keep using centralized, Early 2000s techniques to approach the observability needs of the 2020s and beyond. We need to enable a practice that helps teams meet the challenges of more data, more distributed decision making, and a faster pace of change than ever before. Keeping the lights on and keeping them green is the goal, but the task is getting more and more complex each year.

Observability needs to be more dynamic.

As part of that, teams that engage with observability tools will need every option at their disposal to build a practice that works for them. Edge Delta is enabling more capabilities at a neglected area of the stack to ensure teams can get the right information they need, when they need it, without compromises.

The Future is Uncertain, but it WILL Be Better

As of this writing, AI is taking the tech world by storm. Organizations are just getting comfortable in the cloud and with containers, but the change is only accelerating. Keeping an eye on it all requires a shift in approach.

I’m confident that the future can be better. That’s why I’ve come back to observability, and to Edge Delta in particular, to help chart the path into a more dynamic future.