Amazon Simple Storage Service (Amazon S3) is known for its robust object storage capabilities. It offers excellent scalability, data availability, security, and performance. A key feature of S3 is access logging, making this tool crucial for monitoring and auditing bucket activities.

S3 access logs capture detailed information about every request made to a bucket. This includes PUT actions, GET actions, and DELETE actions. The logs also contain essential metadata, like the following:

Automate workflows across SRE, DevOps, and Security

Edge Delta's AI Teammates is the only platform where telemetry data, observability, and AI form a self-improving iterative loop. It only takes a few minutes to get started.

Learn More- Bucket name

- Operation type

- Requestor identity

- Timestamp for each action

Analyzing S3 access logs is a recommended security best practice. It helps maintain compliance standards and identify unauthorized data access. These logs are essential in data breach investigations, providing detailed insights into data access patterns.

This article will show you how to use S3 access logs. It will cover configuration, analysis, setup, best practices, and addressing challenges. Discover practical solutions and insights.

|

Key Takeaways

|

Understanding S3 Access Logs

S3 access logs provide detailed records of the requests made to your S3 buckets. These logs can help you analyze your S3 usage patterns, diagnose issues, and ensure security and compliance. Each access log entry contains several components that provide information about the request.

The following table explains each field’s purpose and gives an example of its contents in S3 access logs.

|

Component |

Description |

Example Value |

|

Bucket Owner |

Canonical user ID of the owner of the source bucket |

79a59df4-8c7e-4eaa-bc55-99d123456789 |

|

Bucket |

Name of the bucket that the request was processed against |

my-bucket |

|

Time |

Time when the request was received (in UTC) |

[12/Jul/2023:12:34:56 +0000] |

|

Remote IP Address |

IP address of the requester |

192.0.2.3 |

|

Requester |

Canonical user ID of the requester, or Anonymous |

79a59df4-8c7e-4eaa-bc55-99d123456789 |

|

Request ID |

Request ID associated with the request |

3E57427F3EXAMPLE |

|

Operation |

Operation that the request attempted |

REST.GET.OBJECT |

|

Key |

Key of the object that the request was made for |

photos/2023/07/12/image.jpg |

|

Request-URI |

Request-URI part of the HTTP request message |

GET /photos/2023/07/12/image.jpg HTTP/1.1 |

|

HTTP Status |

HTTP status code of the response |

200 |

|

Error Code |

S3 error code, if any |

NoSuchKey |

|

Bytes Sent |

Number of response bytes sent, excluding HTTP headers |

1024 |

|

Object Size |

Total size of the object in bytes |

2048 |

|

Total Time |

Time taken to serve the request, in milliseconds |

18 |

|

Turn-Around Time |

Time taken to respond to the request, in milliseconds |

14 |

|

Referrer |

Value of the HTTP Referrer header |

https://example.com/start.html |

|

User-Agent |

Value of the HTTP User-Agent header |

Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/58.0.3029.110 Safari/537.36 |

|

Version Id |

Version ID of the object that was requested |

3HL4kqtJlcpXrof3BJ-BqEJ1q9tdMIhS |

The S3 access logs are structured to provide detailed information about each request made to your S3 buckets. Each log entry is a single line that records a single request and contains several fields that offer crucial details about the request. The fields are space-delimited and follow a specific order.

Here is an example of an S3 access log format entry:

79a59df4-8c7e-4eaa-bc55-99d123456789 my-bucket [12/Jul/2023:12:34:56 +0000] 192.0.2.3 79a59df4-8c7e-4eaa-bc55-99d123456789 3E57427F3EXAMPLE REST.GET.OBJECT photos/2023/07/12/image.jpg "GET /photos/2023/07/12/image.jpg HTTP/1.1" 200 - 1024 2048 18 14 "https://example.com/start.html" "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/58.0.3029.110 Safari/537.36" 3HL4kqtJlcpXrof3BJ-BqEJ1q9tdMIhS

Configuring S3 Access Logs

S3 offers scalable storage for vast amounts of data. Monitoring access to your S3 buckets is crucial for security, troubleshooting, and compliance. Configuring S3 access logs allows you to track and analyze requests made to your buckets, providing valuable insights into usage patterns.

Keep reading to learn how to enable S3 access logging and configure log delivery.

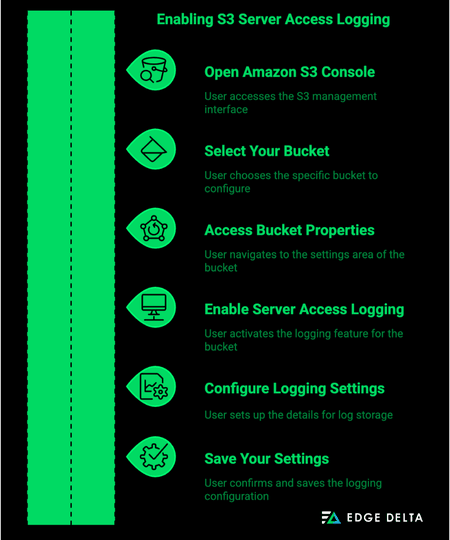

Enabling S3 Access Logging

Amazon S3 bucket logging provides detailed information on object requests and requesters, even if they use your root account. Follow these steps to enable S3 server access logging:

Step 1: Open the Amazon S3 Console

- Sign in to your AWS Management Console.

- Navigate to the Amazon S3 console by searching for S3 in the AWS Services search bar.

Step 2: Select Your Bucket

- Locate the bucket you want to enable logging for from the list of your S3 buckets.

- Click on the bucket name to access its settings.

Step 3: Access Bucket Properties

- Go to the ‘Properties’ tab in the selected bucket’s menu.

Step 4: Enable Server Access Logging

- Scroll down to the ‘Server Access Logging’ section in the Properties tab.

- Click on ‘Edit’ to modify the logging settings.

Step 5: Configure Logging Settings

- Enable logging by toggling the switch to the ‘On’ position.

- Choose a target bucket for storing the access logs. Ensure that:

- The target bucket is different from the main bucket.

- Both buckets are in the same AWS region.

- Specify your logs’ prefixes to distinguish them from other objects in the target bucket (e.g., “access-logs/”).

Step 6: Save Your Settings

- Review your settings to ensure everything is configured correctly.

- Click on ‘Save changes’ to apply the logging configuration.

Some important considerations

- Separate Buckets: The target bucket (where logs are stored) must be different from the main bucket (where logging is enabled) but situated in the same AWS region.

- Log Availability: Logs will typically be available for downloading within 24 hours after enabling logging.

Configuring Log Delivery

- Explain how to configure the destination bucket where the access logs will be delivered.

To configure the destination bucket for S3 access logs, you need to enable logging on the source S3 bucket and specify a target bucket where the logs will be delivered.

Follow the steps below to enable log delivery for Amazon S3 access logs:

Step 1: Create the Destination Bucket

- Log into AWS Management Console and navigate to S3.

- Create a new S3 bucket for storing access logs:

- Ensure the bucket is in the same AWS Region and owned by the same account as the source bucket.

- Ensure the bucket does not have S3 Object Lock default retention period configuration or Requester Pays enabled.

Step 2: Set Bucket Permissions

- Navigate to the new bucket’s “Permissions” tab.

- Edit the bucket policy to allow log delivery:

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Principal": {

"Service": "logging.s3.amazonaws.com"

},

"Action": "s3:PutObject",

"Resource": "arn:aws:s3:::YOUR-DESTINATION-BUCKET-NAME/*"

}

]

}

- Replace

YOUR-DESTINATION-BUCKET-NAMEwith your bucket name.

Step 3: Enable Access Logging on the Source Bucket

- Navigate to the source bucket’s “Properties” tab.

- Enable Server Access Logging:

- Specify the destination bucket.

- Optionally, specify a target prefix (e.g.,

logs/) for log file organization.

Step 4: Verify the Configuration

- Check the destination bucket after some time to ensure logs are being delivered.

This setup ensures that Amazon S3 access logs are correctly delivered to and organized within the specified destination bucket.

Manually analyzing S3 access logs using command-line tools involves downloading the log files from the S3 bucket to a local or remote system and using tools like ‘grep’, ‘awk’, ‘sed’ and ‘sort’ to filter, parse, and analyze the log entries.

By employing these utilities, users can search for specific patterns, extract relevant fields, and perform aggregations or sorting to gain insights into:

- Access patterns

- Identify unauthorized access attempts

- Monitor usage metrics

Analyzing S3 Access Logs

Analyzing S3 access logs is crucial for monitoring and understanding access patterns, detecting unauthorized access, and ensuring data security. This guide explores two primary methods for analyzing S3 access logs: manual analysis using command-line tools and leveraging specialized log analysis tools.

1. Manual Log Analysis

Manual log analysis uses command-line tools to filter, search, and extract relevant information from S3 access logs. This method is beneficial for quick, ad-hoc analysis and smaller log files.

You can efficiently analyze log data using command-line tools like grep, awk, and sed. Here’s a brief overview of these tools and how they can be used:

grep: Searches for specific patterns within log files.

grep 'ERROR' s3_access_logs.log

awk: Extracts specific fields from log entries.

awk '{print $4, $5}' s3_access_logs.log

sed: Performs basic text transformations on log data.

sed 's/old_string/new_string/g' s3_access_logs.log

Here’s a table summarizing common commands for log analysis:

|

Command |

Description |

|

grep |

Search for specific patterns within log files |

|

awk |

Extract specific fields from log entries |

|

sed |

Perform basic text transformations on log data |

2. Using Log Analysis Tools

For large-scale or continuous log analysis, utilizing specialized log analysis tools can provide advanced capabilities including real-time monitoring, complex queries, and visualizations. The table below summarizes some log analysis tools for analyzing S3 access logs.

|

Tool |

Description |

Key Features |

|

AWS Athena |

Serverless query service for analyzing logs |

SQL-based queries, integration with S3 |

|

Splunk |

Enterprise-level log management solution |

Real-time search, dashboards |

|

ELK Stack |

Open-source log analysis and visualization |

Elasticsearch, Logstash, Kibana |

|

Sumo Logic |

Cloud-native machine data analytics service |

Continuous intelligence, dashboards |

By combining manual log analysis techniques with the advanced capabilities of specialized tools, you can effectively monitor and analyze S3 access logs.

Setting Up Automated Log Analysis

Logs, which record events and activities within systems, play a vital role in this monitoring. However, manually sifting through logs to identify issues is time-consuming and prone to errors.

Find out how to set up an automated log analysis system to avoid problems with little effort.

1. Using AWS Athena

The prerequisite for using AWS Athena to query Amazon S3 access logs is to define date-based partitioning for your S3 structure. This process involves organizing your S3 bucket with a folder structure that maps to a timestamp column, typically in the format /YYYY/MM/DD.

Here’s a step-by-step guide to setting up AWS Athena for querying S3 access logs:

- Step 1: Enable Server Access Logging:

Turn on server access logging for your S3 bucket. Note the Target bucket and Target prefix values for use in Athena.

- Step 2: Set Up Date-Based Partitioning:

Organize your S3 bucket with a date-based folder structure (e.g., /YYYY/MM/DD).

- Step 3: Open Amazon Athena Console:

Set up a query result location in an S3 bucket.

- Step 4: Create Database:

Run this query in the Athena Query editor:

CREATE DATABASE s3_access_logs_db;

- Step 5: Create Table Schema:

Define your table with the following schema:

CREATE EXTERNAL TABLE s3_access_logs_db.mybucket_logs(

bucketowner STRING,

bucket_name STRING,

requestdatetime STRING,

remoteip STRING,

requester STRING,

requestid STRING,

operation STRING,

key STRING,

request_uri STRING,

httpstatus STRING,

errorcode STRING,

bytessent BIGINT,

objectsize BIGINT,

totaltime STRING,

turnaroundtime STRING,

referrer STRING,

useragent STRING,

versionid STRING,

hostid STRING,

sigv STRING,

ciphersuite STRING,

authtype STRING,

endpoint STRING,

tlsversion STRING,

accesspointarn STRING,

aclrequired STRING)

PARTITIONED BY (timestamp STRING)

ROW FORMAT SERDE 'org.apache.hadoop.hive.serde2.RegexSerDe'

WITH SERDEPROPERTIES ('input.regex'='...')

STORED AS INPUTFORMAT 'org.apache.hadoop.mapred.TextInputFormat'

OUTPUTFORMAT 'org.apache.hadoop.hive.ql.io.HiveIgnoreKeyTextOutputFormat'

LOCATION 's3://your-bucket-name/prefix/';

- Step 6: Preview Table:

In the Athena console, under Tables, choose your table and click “Preview table” to ensure the data appears correctly.

Here are some examples of Athena queries:

- Find Deleted Object Requests

When managing an Amazon S3 bucket, tracking deletion operations is often crucial to ensure data integrity and security. Use the following query to find the request for a deleted object:

SELECT *

FROM s3_access_logs_db.mybucket_logs

WHERE key = 'images/picture.jpg'

AND operation LIKE '%DELETE%';

- 403 Access Denied Errors

To investigate bucket access denied, you can search your logs for details on 403 Access Denied events. The following query fetches relevant details, including the request datetime, requester, operation, request ID, and host ID:

SELECT requestdatetime, requester, operation, requestid, hostid

FROM s3_access_logs_db.mybucket_logs

WHERE httpstatus = '403';

- HTTP 5xx Errors in a Specific Time:

Server errors, indicated by HTTP 5xx status codes, can significantly impact the availability and reliability of your S3 services. To diagnose these errors, you may need to focus on a specific time period to understand the context and frequency of these issues. The following query retrieves Amazon S3 request IDs, object keys, and error codes for all HTTP 5xx errors encountered within a specified time frame:

SELECT requestdatetime, key, httpstatus, errorcode, requestid, hostid

FROM s3_access_logs_db.mybucket_logs

WHERE httpstatus LIKE '5%'

AND timestamp BETWEEN '2024/01/29' AND '2024/01/30';

2. Integrating with AWS CloudWatch

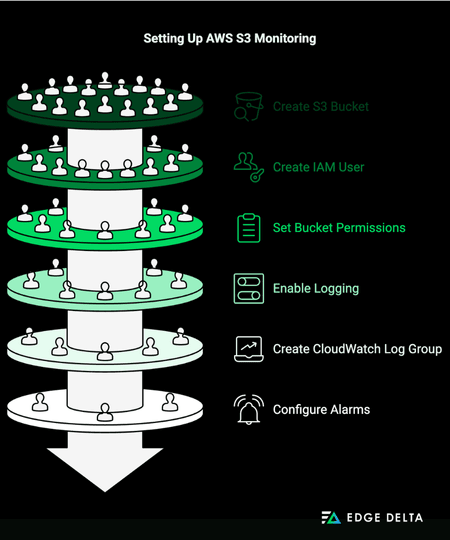

Integrating S3 access logs with AWS CloudWatch enables you to monitor metrics and set up alerts for various activities and events related to your S3 buckets. Here’s a step-by-step guide to setting up this integration:

Step 1: Create an Amazon S3 Bucket

- Go to the S3 Console: Open the Amazon S3 console at https://console.aws.amazon.com/s3/.

- Create a Bucket: Click “Create bucket,” name it, choose a region, and click “Create bucket.”

Step 2: Create an IAM User with Full Access

- Open the IAM Console: Go to https://console.aws.amazon.com/iam/.

- Create an IAM User: Click “Add user,” choose “Programmatic access,” and attach

AmazonS3FullAccessandCloudWatchLogsFullAccesspolicies. Save the access keys.

Step 3: Set Permissions on the S3 Bucket

- Select the Bucket: In the S3 console, select your bucket.

- Set Bucket Policy: Go to “Permissions” and set a policy to allow s3:PutObject. Here’s a sample policy:

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Principal": "*",

"Action": "s3:PutObject",

"Resource": "arn:aws:s3:::your-log-bucket/*"

}

]

}

Step 4: Enable S3 Access Logging

- Enable Logging: In the S3 console, select your bucket, go to “Properties,” and enable logging. Choose the bucket created for logs and save changes.

Step 5: Create CloudWatch Log Group

- Open CloudWatch Console: Go to https://console.aws.amazon.com/cloudwatch/.

- Create Log Group: Navigate to “Logs” and create a log group (e.g.,

/aws/s3/access-logs).

Step 6: Create a Lambda Function to Process Logs

- Create Function: In the Lambda console, create a function with Python 3.9. Attach the IAM role created earlier.

- Add Code: Use the following example code to read S3 access logs and send them to CloudWatch:

import boto3

import gzip

import json

import re

from io import BytesIO

s3 = boto3.client('s3')

logs = boto3.client('logs')

def lambda_handler(event, context):

bucket_name = event['Records'][0]['s3']['bucket']['name']

key = event['Records'][0]['s3']['object']['key']

response = s3.get_object(Bucket=bucket_name, Key=key)

bytestream = BytesIO(response['Body'].read())

gzipfile = gzip.GzipFile(fileobj=bytestream)

log_data = gzipfile.read().decode('utf-8')

log_group = '/aws/s3/access-logs'

log_stream = 's3-log-stream'

try:

logs.create_log_group(logGroupName=log_group)

except logs.exceptions.ResourceAlreadyExistsException:

pass

try:

logs.create_log_stream(logGroupName=log_group, logStreamName=log_stream)

except logs.exceptions.ResourceAlreadyExistsException:

pass

log_events = []

for line in log_data.splitlines():

log_events.append({

'timestamp': int(re.search(r'[(d{2})/(w{3})/(d{4}):(d{2}):(d{2}):(d{2})', line).group(0)),

'message': line

})

logs.put_log_events(

logGroupName=log_group,

logStreamName=log_stream,

logEvents=log_events

)

return {

'statusCode': 200,

'body': json.dumps('Logs processed successfully!')

}

Step 7: Configure CloudWatch Alarms

- Create Metrics Filter: In the CloudWatch console, navigate to the log group and click “Create metric filter.” Define a filter pattern and create the metric.

- Set Up Alarms: Create an alarm based on the metric, set thresholds, and configure notifications.

To leverage the downstream analysis of tools outside the AWS ecosystem, implement flexible and robust Telemetry Pipelines, like the ones pioneered by Edge Delta. Edge Delta’s pipelines can ingest log data directly from S3, process it in-flight, and and route it to any number of destinations, including Datadog, Splunk, New Relic, Edge Delta’s observability backend, and more.

Best Practices for Using S3 Access Logs

Amazon S3 access logs provide a wealth of information about requests made to your S3 buckets. By leveraging these logs, you can gain valuable insights into usage patterns, detect anomalies, and ensure security and compliance. However, managing these logs effectively requires adhering to certain best practices to maximize their utility and minimize potential overhead.

To utilize the capabilities of S3 access logs, best practices such as log centralization and efficient log rotation must be implemented. Read on to explore these best practices in more detail.

1. Centralizing Log Storage

Centralizing log storage is crucial for simplifying log management and enabling comprehensive analysis. Organizing logs in one place makes it easier to search, analyze, and correlate log data across buckets and services.

A central S3 bucket, Amazon CloudWatch Logs, Splunk, or Elastic are effective log management services. By configuring all S3 buckets to deliver their access logs to a single destination, you create a unified repository for all log data.

# Example S3 Bucket Policy for Centralized Logging

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Principal": {

"Service": "s3.amazonaws.com"

},

"Action": "s3:PutObject",

"Resource": "arn:aws:s3:::central-logging-bucket/*",

"Condition": {

"StringEquals": {

"aws:SourceAccount": "YOUR_AWS_ACCOUNT_ID"

},

"ArnLike": {

"aws:SourceArn": "arn:aws:s3:::source-bucket-name"

}

}

}

]

}

2. Implementing Log Rotation and Retention

Log rotation and retention policies are essential for managing log file sizes and ensuring compliance with data governance policies. Without proper rotation and retention, log files can grow indefinitely, leading to increased storage costs and potential difficulties in log analysis.

Using S3’s lifecycle policies, you can automate log rotation and retention. This process ensures that old log files are archived or deleted according to your organization’s data retention policies.

Here’s an example of a log rotation configuration:

# Example S3 Lifecycle Policy for Log Rotation and Retention

{

"Rules": [

{

"ID": "RotateAndRetainLogs",

"Prefix": "logs/",

"Status": "Enabled",

"Transitions": [

{

"Days": 30,

"StorageClass": "GLACIER"

}

],

"Expiration": {

"Days": 365

}

}

]

}

In this example, logs are moved to Amazon Glacier after 30 days, reducing storage costs, and are permanently deleted after 365 days. This log rotation practice ensures compliance with data retention policies.

3. Securing Log Data

Ensuring the security of your Amazon S3 buckets is critical to preventing unauthorized access and data breaches. Here are some best practices to enhance the security of your S3 buckets:

- Use encryption: Encryption (server-side or client-side) protects log data at rest and enforces HTTPS for data in transit.

- Implement Access Controls: Restrict access to S3 access logs using IAM and S3 bucket policies.

- Set Retention Policies: Implement appropriate data retention policies to manage the lifecycle of log data.

- Set Monitoring and Alerts: Use AWS CloudWatch and GuardDuty to monitor and set up alerts for suspicious activities related to access logs.

Here’s an example of security configuration enabling server-side encryption (SSE) and configuring IAM policies for access control:

aws s3api put-bucket-encryption --bucket my-access-log-bucket --server-side-encryption-configuration '{

"Rules": [

{

"ApplyServerSideEncryptionByDefault": {

"SSEAlgorithm": "AES256"

}

}

]

}'

4. Setting Up Alerts and Notifications

To ensure timely responses to critical events in your S3 access logs, it’s essential to implement a robust alerting and notification system. This system will help you quickly identify and address security breaches, performance issues, and operational anomalies, minimizing potential damage and downtime.

Here are some best practices for setting up alerts and notifications to stay on top of your S3 access log events:

- Enable Access Logging: Activate access logging on your S3 buckets using the AWS Management Console or CLI.

- Configure CloudWatch: To organize your logs, create a CloudWatch log group and log streams. Set up alarms to detect specific patterns or thresholds, such as multiple failed access attempts.

- Example Alert Configuration:

Alarm Condition: High number of PUT requests in a short period.

Action: Send an SNS notification.

aws cloudwatch put-metric-alarm --alarm-name HighPutRequests

--metric-name NumberOfObjects

--namespace AWS/S3

--statistic Sum

--period 300

--threshold 100

--comparison-operator GreaterThanThreshold

--dimensions Name=BucketName,Value=my-bucket

--evaluation-periods 1

--alarm-actions arn:aws:sns:us-east-1:123456789012:my-sns-topic

4. Integrate Third-Party Tools: Use tools for advanced monitoring and customizable alerts.

By following these steps, you can ensure critical events in your S3 access logs trigger immediate notifications, allowing for swift responses to potential security issues.

5. Regularly Reviewing Logs

Regularly reviewing logs is crucial for maintaining a proactive approach to system monitoring. Logs contain valuable information about the health and performance of your applications and infrastructure. By regularly analyzing these logs, you can identify and resolve issues before they escalate, ensuring the smooth operation of your systems. Proactive log review helps in spotting unusual patterns, understanding system behavior, and improving overall security posture.

Creating a structured log review schedule ensures that log analysis becomes a consistent part of your monitoring routine. Here’s an example of how you might structure such a schedule:

|

Review Frequency |

Logs Reviewed |

Activities |

|

Daily |

Application logs, Security logs |

Check for error messages, failed login attempts, and unusual activity |

|

Weekly |

System logs, Database logs |

Analyze performance metrics, resource usage, and backup statuses |

|

Monthly |

Network logs, Audit logs |

Review for policy violations, network anomalies, and compliance checks |

|

Quarterly |

Comprehensive log audit |

Conduct a thorough review of all logs, identify long-term trends, and update monitoring strategies |

Regular log reviews not only help in early detection of potential issues but also contribute to ongoing system optimization and security improvements.

Challenges and Solutions in Using S3 Access Logs

Amazon S3 access logs provide detailed records of requests made to an S3 bucket. These logs are invaluable for understanding usage patterns, diagnosing issues, and ensuring security compliance. However, leveraging S3 access logs effectively can pose several challenges.

Read on for specific challenges and solutions to maximize your S3 access logs.

Common Challenges

Businesses worldwide rely on Amazon S3 for its exceptional flexibility and robust security in object storage. However, managing and utilizing S3 access logs can present several challenges. Understanding these challenges is crucial for effective log management and security.

Here are some of the typical challenges faced:

- High Volume of Log Data

High-volume buckets can generate massive quantities of log data. For businesses with extensive usage, this translates to an overwhelming amount of data to store and analyze. Managing this volume can strain storage resources and complicate data management efforts.

- Ensuring Log Security and Privacy

Each file stored in S3 represents a potential target for threat actors. If access logs are not securely managed, sensitive information could be exposed. Ensuring log security involves implementing robust AWS Identity and Access Management (IAM) configurations and minimizing public access to logs. It’s critical to follow security best practices to mitigate these risks.

- Efficiently Parsing and Analyzing Log Data

Extracting meaningful insights from S3 access logs necessitates data analysis skills and a thorough understanding of AWS services. Logs are typically in a raw format, which necessitates efficient parsing to convert them into actionable data. This process takes time and resources and requires specialized tools and knowledge.

- Cost Considerations

Storing and analyzing large amounts of log data can be expensive. For high-traffic buckets, the expenses associated with long-term storage and the computational power needed for analysis can add up quickly. Businesses need to balance the benefits of detailed logging with the associated costs to maintain a sustainable approach.

Overcoming Challenges

To address these challenges, businesses can implement several strategies:

- Log Aggregation and Filtering

Combine logs from different sources into one system to simplify management and analysis. Tools like AWS CloudWatch Logs and the ELK Stack (Elasticsearch, Logstash, and Kibana) can help filter and aggregate log data, making it easier to handle.

- Log Security with Encryption and Access Controls

Secure your logs using server-side encryption (SSE) and enforce strict IAM policies to ensure that only authorized users have access. AWS Key Management Service (KMS) can be used to manage encryption keys, adding an extra layer of security.

- Advanced Log Analysis

Use AWS Athena to query S3 logs directly with SQL, simplifying the analysis process. Machine learning models can also automatically detect unusual patterns and potential security threats, enhancing your ability to respond to issues quickly.

- Automating Log Management

Use AWS Lambda to automate the processing and movement of log files, significantly reducing manual effort. Implementing S3 lifecycle policies to transition older logs to more cost-effective storage options like S3 Glacier can help manage storage costs efficiently.

- Cost Management

Monitor and manage the costs associated with log storage and analysis regularly. Tools like AWS Cost Explorer and AWS Budgets can help track expenses and set up alerts for cost overruns, ensuring a balanced approach to log management. Tools like Edge Delta’s Telemetry Pipelines can enable you to intelligently process data before routing it to more cost-effective analysis tools, reducing your overall spend.

- Security Enhancements

Continuously update and review security measures to protect log data. Conduct security assessments and audits to identify vulnerabilities, and implement multi-factor authentication (MFA) to enhance access security.

By using these strategies, businesses can effectively manage their S3 access logs, gain valuable insights, and maintain both security and cost efficiency

Conclusion

Using S3 access logs is crucial for monitoring and troubleshooting in AWS. The steps provided guide you through setup and configuration. Analyzing the data helps you implement best practices for performance and security. These insights help solve common S3 access logging challenges. This gives you better visibility and control over storage activities. Mastering S3 access logs enhances security and optimizes operations. It also helps you understand your S3 usage better.

FAQs for How To Use S3 Access Logs

How to read logs in S3?

To read S3 logs, you need to retrieve and make them readable before you can gain insights into the access information. While you can manually retrieve and parse S3 logs, AWS services like Amazon Athena are more efficient. Create a database in Athena, define a table for your logs, and use SQL-like queries to read and extract insights. This tool streamlines the process and enhances practicality.

How do you check who accessed the S3 bucket?

To check who accessed an S3 bucket, enable Amazon S3 server access logging to capture information about requests to your buckets and objects. You can also use Amazon Athena to analyze these logs and AWS CloudTrail to track API calls to your S3 resources.

How do I read access to my S3 bucket?

To read access your S3 bucket, create an IAM policy with s3:GetObject permissions and attach it to the relevant IAM user, group, or role. Ensure the bucket policy allows access for these IAM entities. Verify that network access controls permit access to S3 endpoints. These steps will enable secure read access to your S3 bucket.

How long do S3 access logs take?

The time it takes for S3 access logs to become available typically ranges from a few minutes to a couple hours after the request or event occurs. Amazon S3 periodically collects and aggregates the logs before delivering them to the specified S3 bucket. The delay can depend on several factors, including the volume of requests, the configuration of the logging system, and the overall load on AWS services.

List of Sources