Python logs are records of a Python-based program’s execution. This data helps developers understand the script to discover missed opportunities or errors. To leverage this data, developers must implement the Python logging process.

Implementing Python logging provides insights into a program or application’s errors and conditions. Since the Python standard library includes a logging module, programmers can add logging using only a few code lines.

Automate workflows across SRE, DevOps, and Security

Edge Delta's AI Teammates is the only platform where telemetry data, observability, and AI form a self-improving iterative loop. It only takes a few minutes to get started.

Learn MoreHere’s why logging is essential in software development:

- Gain insights into errors and events, making it easier to find and fix issues

- Monitor applications and programs in real-time

- Alert developers to potential problems before they worsen

- Create a record of events and actions, which is crucial for regulatory compliance

- Provide data for analyzing and improving application performance

Understanding the importance of logging helps in implementing and managing Python-based programs. This article covers Python logging, its implementation, and best practices for effective logging.

| Key Takeaways • Python logging captures details about a Python-based program’s script and execution. This process helps developers spot errors and opportunities for quick action. • With a robust logging system, programmers can improve applications. It also allows real-time event tracking, issue debugging, and performance monitoring. • Since Python’s standard library already has a logging module, developers can add logging with only a few script lines. • A basic Python logging setup helps track events during code execution, recording every event to track errors or opportunities. • With advanced configurations, Python logging can enhance log management and analysis. • Effective Python logging requires implementing the best practices for proper execution. These practices include solving challenges like handling large log files and ensuring data accuracy. |

Understanding Python Logging

Logging in software development lets developers see what is happening inside their app while running. It records information about a program’s execution, creating log entries. These entries help developers understand the program’s behavior, identify issues, and monitor performance.

Effective use of logging is essential for development and debugging. Developers can keep track of performance metrics, execution flow, and errors. This process helps developers better understand the program and discover unexpected scenarios.

Here’s a Python logging example of how to create a log entry in Python using the logging module:

import logging |

Here’s a table summarizing the Python logging levels, their descriptions, and their numeric values:

| Log Level | Description |

| Debug | Detailed information that’s crucial to diagnostic processes |

| Info | Detailed confirmation that things work as expected |

| Warning | Indication of an unexpected event that occurred or might occur |

| Error | Alert for a severe problem that can affect the system’s function |

| Critical | Alert for serious errors that can lead to system termination |

Logging is crucial for monitoring, debugging, and maintaining applications for several reasons:

- Monitoring: Logs allow continuous monitoring of an application’s health and performance. Developers and administrators can use them to find unusual behavior or performance issues.

- Debugging: Logs give detailed insights into an application’s flow and state, aiding in diagnosing and fixing bugs. Without them, debugging is like navigating in the dark.

- Maintenance: Everything your application does is recorded in logs. When something goes wrong, you can check the logs to find and fix the problem, helping to keep the application running smoothly.

- Security: Logs keep track of security events, assisting in identifying and responding to potential threats. They also provide evidence of unauthorized access attempts or suspicious activities.

- Compliance: Many industries require maintaining logs for legal or regulatory reasons. Proper logging records all necessary information and allows for audits when needed.

Setting Up Python Logging

Logging is essential for any application. It helps developers track events, debug issues, and monitor performance. Python’s built-in logging module provides a flexible framework for collecting log messages from Python programs. This module lets you create log messages and control the output and format of log messages. With proper logging setup, you can make your application easier to understand and fix.

Keep reading to learn how to set up Python logging.

1. Basic Configuration

Setting up basic logging in Python allows you to easily track events that happen while your code runs. Here’s how to configure basic logging:

python |

The following conceptual diagram illustrates the flow of log messages from the application to different outputs, such as the console or a log file.

| [ Application ] | v [ Logger ] | v [ Handlers ] —> [ Console / Stream ] | v [ Handlers ] —> [ File ] | v [ Handlers ] —> [ Email / HTTP ] |

This flow demonstrates how the logger receives and processes messages from the application through handlers. The logger then directs these messages to the appropriate destinations based on the configuration.

2. Advanced Configuration

For more complex applications, you might need advanced logging configurations, such as different handling and formatting commands. This process allows more flexibility in managing how and where log messages are output.

Here’s an example of an advanced logging configuration:

import logging |

In this example, the logger is configured to handle messages with different levels of severity, outputting warnings to the console and errors to a file. This setup helps ensure that critical information is captured and appropriately directed to facilitate troubleshooting.

To better understand the variety of handlers available for logging in Python, here’s a table summarizing standard log handlers and their descriptions:

| Handler | Description |

| StreamHandler | Sends log messages to streams (e.g., console) |

| FileHandler | Writes log messages to a file |

| RotatingFileHandler | Writes log messages to a file with rotation |

| SMTPHandler | Sends log messages via email |

| HTTPHandler | Sends log messages to an HTTP server |

Using Advanced Logging Features

Advanced logging features help manage and analyze logs more effectively. With file-based logging and log rotation, you can organize and manage log files to keep your application running smoothly.

Keep reading for a detailed discussion on using these advanced logging features.

1. Logging Configuration from a File

You can set up logging using module and class functions or by creating a configuration file or dictionary. Load these with fileConfig() or dictConfig(). Using a config file keeps your logging setup organized and easy to adjust. This method is helpful if you need to change your logging configuration while running the application.

Here’s how to set it up with a configuration file:

- Create a configuration file named

logging.conf

[loggers] |

- Next, load this configuration in your Python code:

import logging |

2. Log Rotation

Log rotation is crucial to prevent log files from becoming too large and consuming too much disk space. You can set it up using the RotatingFileHandler. Here’s an example:

import logging |

This log rotation setup ensures that app.log will rotate once it reaches 2000 bytes, keeping up to 5 backup files.

Best Practices for Python Logging

Effective logging is essential for robust Python applications since it provides valuable insights into your code’s behavior and performance. Further, it helps troubleshoot issues and monitor your application’s health.

Following Python logging best practices, you can create comprehensive and valuable logs. These practices make debugging more accessible and help keep your operations running smoothly.

Read on to learn the best practices to logging in Python, making your system reliable and easy to maintain.

1. Consistent Log Format

One of the most essential best practices for log formatting is maintaining a consistent format throughout your logs. Using the same format every time makes logs easy to read and analyze. This practice helps quickly find and understand log messages and makes it easier to use log management tools.

To standardize Python logs, configure the logging module to include timestamps, log levels, module names, and message content. Here’s how you can set it up:

import logging |

This configuration ensures that every log entry contains the necessary details for effective debugging and monitoring. Here’s a breakdown of the format components to help you understand their significance:

%(asctime)s: Timestamp when the log entry was created%(levelname)s: Level of the message%(name)s: Name of the logger that generated the message%(message)s: The actual log message

2. Logging Levels Management

Log levels denote the severity or importance of log messages, such as debug, info, warn, error, or fatal. Choosing the right logging level helps reduce unnecessary or redundant logs that can slow down your backend or consume excessive disk space.

Proper logging management is essential to capture helpful information without filling log files with too much data. By setting different logging levels, you can control the details of the logs, ensuring you gather essential data while avoiding unnecessary clutter.

Each logging level serves a specific purpose and helps capture the correct information. Here are the typical logging levels in Python:

- DEBUG: This level captures detailed, valuable information for diagnosing problems. It includes extensive information about the application’s state and is generally used during development or troubleshooting specific issues.

- INFO: This level provides general information about the application’s normal operations. It helps track the application’s flow without the granularity of debug logs.

- WARN: This level indicates potential issues that are not immediately harmful but may require attention. It helps identify problems before they escalate.

- ERROR: Logs error events that might allow the application to continue running. This level is crucial for identifying issues that need immediate attention.

- FATAL: This level captures severe error events that lead to the application’s termination. It helps post-mortem analysis to understand why the application failed.

To effectively manage logging levels, it’s essential to adjust them according to the environment in which your application is running. Here are some guidelines:

Development Environment

Enable detailed logging to capture extensive information about the application’s state and behavior. Detailed logs help developers identify and fix issues quickly.

logger.setLevel(logging.DEBUG) |

Testing/Staging Environment

Reduce the verbosity to capture essential information about the application’s operations while providing enough context for testing.

logger.setLevel(logging.INFO) |

Production Environment

Limit logging to warnings and errors to minimize log file size and focus on critical issues that affect application performance and stability.

logger.setLevel(logging.WARN) |

Here’s an example using a configuration file:

import logging |

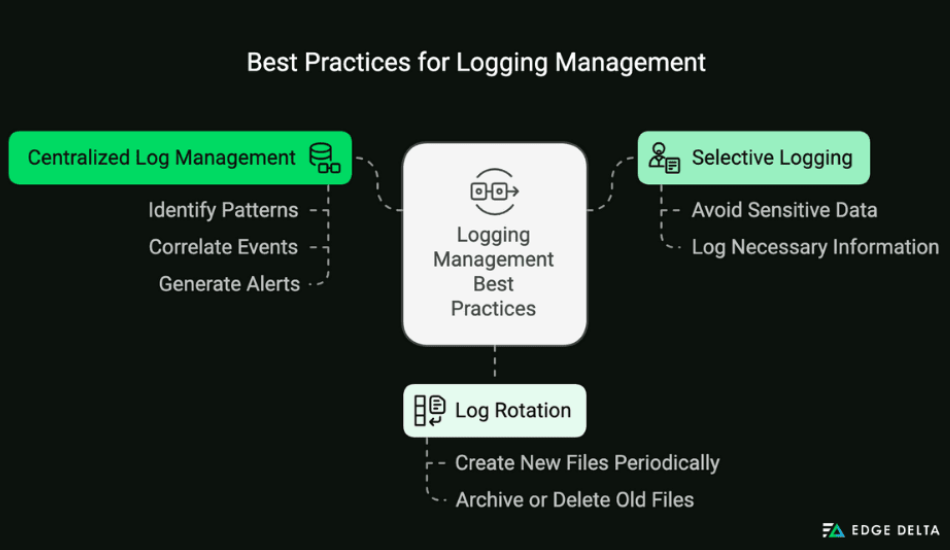

Here are some of the best practices for managing logging levels:

- Selective Logging: Log only the necessary information at each level. Avoid logging sensitive data and excessive details that do not add value.

- Log Rotation: Implement log rotation to manage log file size and prevent storage issues. This practice involves creating new log files periodically and archiving or deleting old ones.

- Centralized Log Management: Use centralized log management solutions to aggregate and analyze logs from multiple sources. These solutions help identify patterns, correlate events, and generate alerts.

3. Avoiding Sensitive Information in Logs

Log data frequently contains critical information about your applications, infrastructure, and databases. Logging sensitive information can pose significant security and compliance risks. Understanding why sensitive information should not be in logs is critical for implementing effective log management strategies.

Here’s why it’s essential to avoid logging sensitive data and how to achieve it:

- Compliance: Privacy regulations require organizations to handle personal data with care. Logging sensitive data can complicate compliance with these rules, making it harder to control and delete the data when needed.

- Security: Logs are often targets for data breaches. If sensitive data is logged, it can be exposed during an attack, leading to severe consequences such as identity theft or financial loss.

Knowing what types of data should be excluded from your logs is vital to protect sensitive information. Here’s a table summarizing sensitive information to avoid in logs:

| Information Type | Description |

| Passwords | Do not log user passwords. Passwords are susceptible and should always be kept secure and confidential. Exposure can lead to unauthorized access. |

| Personal Data | Avoid logging personal information like Social Security Numbers (SSNs), addresses, or phone numbers. This type of data can be used for identity theft and fraud. |

| API Keys | Do not log API keys or tokens. API keys grant access to services and should be protected to prevent unauthorized usage. |

| Credit Card Numbers | Avoid logging credit card numbers. Credit card details are critical financial information that must be protected to prevent fraud. |

To effectively avoid logging sensitive data, follow these best practices below, each tailored to address specific aspects of log management:

Encrypt Data in Transit and at Rest

The first step in protecting sensitive information is encryption. Ensure that all data and susceptible information are encrypted while being transmitted and stored. This way, even if logs are accessed, the data remains protected.

Isolate Sensitive Data

Isolating sensitive data can significantly reduce the risk of accidental exposure. Use a data privacy vault to store sensitive information separately from your application logs. This approach ensures sensitive data is not included in logs, backups, or database dumps.

Tokenize Sensitive Data

Tokenization offers a practical solution for including references to sensitive data without logging it directly. Replace sensitive data with tokens before logging. This practice allows you to keep a reference to the data without exposing it directly in the logs. If necessary, tokens can be converted back to the original data (detokenization).

Keep Sensitive Data Out of URLs

Avoiding sensitive data in URLs is another crucial practice. Avoid placing sensitive information in URLs, as web servers often log them. Instead, use unique identifiers, like UUIDs, to represent sensitive data.

Mask or Redact Sensitive Data

Masking or redacting sensitive data is essential in cases where some information is needed. Data masking hides parts of the data, such as showing only the last four digits of a credit card number. Redaction completely removes sensitive information from logs.

Conduct Code Reviews

Regular code reviews help catch potential issues early. During code reviews, check for logging statements that might include sensitive data. Use pull request templates to ensure this step is not overlooked.

Implement Structured Logging

Structured logging, using formats like JSON, can simplify the identification and exclusion of sensitive data. It organizes log data into key/value pairs, making it easier to identify and filter out sensitive information.

Set Up Automated Alerts

Automated alerts provide an additional layer of security. Deploy automated tools to scan logs for sensitive information and alert the team if any is found. This method helps quickly address any issues that might arise.

4. Using Contextual Information

Contextual logging adds extra “context” data to log entries to provide meaningful information about application events. This approach enhances traditional logging by embedding proper metadata within log entries.

Enhancing log messages with contextual information dramatically improves the debugging process. Here are the key benefits:

- Improved Clarity and Detail: Contextual logs provide additional details, such as user IDs, session IDs, and transaction IDs.

- Easier Event Correlation: Contextual data helps correlate related log entries. For example, a transaction ID links all messages related to a specific transaction. It aids in tracing actions and understanding the application’s behavior.

- Enhanced Error Analysis: Contextual logs give a detailed picture of errors. They reveal patterns and pinpoint root causes. For instance, knowing which user actions led to an error can identify specific problematic features or inputs.

- Reduced Debugging Time: Contextual information allows developers to find the cause of an issue quickly. Contextual logging minimizes the need to sift through unrelated log entries, speeding up debugging and reducing downtime.

- Better User Tracking: Contextual logs track individual user interactions. This practice helps debug user-specific issues. For example, a session ID can find all related log entries for a user-reported problem.

- Context Preservation in Multi-Threaded Environments: In multi-threaded applications, contextual logging includes information specific to each thread. This contextual logging maintains clarity and prevents confusion when multiple users or processes generate logs simultaneously.

- Facilitation of Automated Monitoring and Alerts: Contextual information enables precise monitoring and alerting. Alerts can trigger specific data, such as the number of errors for a particular user or session. This information helps detect and address issues proactively.

- Enhanced Performance Analysis: Including context like response times and log load details helps identify performance bottlenecks. This data is crucial for optimizing performance and ensuring a smooth user experience.

Here’s a simple example of how to implement contextual logging in Python using the logging module:

import logging |

5. Regular Log Monitoring and Alerts

Monitoring logs regularly and setting up alerts for critical issues are crucial for maintaining the health of your applications. Logs provide a detailed record of application activities, errors, and other significant events. By monitoring these logs consistently, you can gain the following:

- Early Detection of Issues: Regular log checks help spot issues before they become big problems.

- Performance Optimization: By identifying log patterns, you can fix issues before they impact users.

- Security Monitoring: Monitoring logs helps detect unusual activities, enhancing security.

- Easier Debugging: Detailed logs make troubleshooting simpler when problems arise.

- Compliance and Auditing: Regular log monitoring ensures you meet industry regulations and are ready for audits.

Setting up alerts for critical issues is an essential aspect of log monitoring. Alerts give you a heads-up about any significant problems that require immediate attention. Here are the best practices for setting up alerts:

- Identify Critical Events: Determine which log events are critical for your application. These might include system errors, failed transactions, security breaches, or performance degradation. Further, define what constitutes a critical event to avoid alert fatigue.

- Use Thresholds: Set thresholds for different metrics to trigger alerts, such as when you want to receive an alert if the error rate exceeds a certain percentage or if response times exceed acceptable limits.

- Integrate with Notification Systems: Integrate your logging system with notification services such as email, SMS, Slack, or other messaging platforms. This practice ensures that alerts reach the appropriate team members in real-time.

- Automated Responses: Where possible, configure automated responses to specific alerts. For example, restart a service if it crashes or scale up resources if the load exceeds a specific threshold.

- Regular Review and Tuning: Review and fine-tune your alerting rules regularly. As your application evolves, the nature and thresholds of critical events may change. Continuous tuning ensures that your alerts remain relevant and practical.

Here’s a basic example of implementing log monitoring and alerts in Python using the logging module with email notifications:

import logging |

Common Challenges and Solutions

Log data is crucial for maintaining application performance, security, and reliability. However, handling log files presents several challenges, especially as applications scale and generate larger volumes of data.

Read on to learn common challenges and practical solutions for Python logging.

1. Handling Large Log Files

One of the main challenges in log management is handling large log files. As applications grow, the amount of log data can become overwhelming. This large volume of data makes it hard to store, process, and analyze logs effectively. Large log files can slow down the system, use too much disk space, and make it harder to find issues quickly.

Here are some potential solutions to address large log files:

Rotating Log Files

Implementing log rotation helps manage large log files by creating a new log file when a specific size limit is reached. This practice prevents any single log file from becoming too large.

Implement rog rotation in Python by using the RotatingFileHandler from the logging module. This tool automatically rotates logs when they reach a specified size, keeping them manageable.

from logging.handlers import RotatingFileHandler |

Log Compression

Compressing old log files can save disk space while keeping historical data accessible. This process reduces file sizes, freeing up storage without losing important information. It is beneficial for long-term data storage.

Centralized Log Management

Centralized log management systems collect logs from various sources in one place. This process makes it easier to manage and analyze large amounts of data.

You can quickly search and filter logs, identify patterns, and generate reports. This approach improves efficiency and helps you find issues across different applications and systems more efficiently.

2. Ensuring Log Data Accuracy

Accurate log data is crucial for effective monitoring and troubleshooting. It offers a clear timeline of events during system issues, helping with root cause analysis and prevention strategies. Accurate log data allows for tracking performance metrics like response times and resource usage, which aids in optimization and capacity planning.

On the other hand, inaccurate or inconsistent logs can cause incorrect issue diagnoses, delayed responses, and more significant problems in the application. Here are methods to ensure log data accuracy:

| Method | Description |

| Time Synchronization | Ensuring server time is synchronized across all systems to maintain consistent log timestamps |

| Log Format Consistency | Using consistent log formats across applications to simplify log parsing and analysis |

| Error Handling | Implementing robust error handling to ensure logs capture all relevant information without omissions |

| Validation Mechanisms | Using validation mechanisms to check the integrity and accuracy of log entries |

Conclusion

Logging is essential for maintaining and debugging applications. Setting up Python logging, advanced configurations, and best practices simplifies debugging and improves reliability. Effective Python logging enhances system observability, helping systems run smoothly and efficiently. This Python logging ensures better performance and easier troubleshooting.

FAQs on Python Logging

What is the purpose of logging in Python?

Logging in Python records events that happen during a program’s execution. Logging in Python helps with debugging, monitoring, and troubleshooting. It provides a way to output messages that can be used to track the program’s flow and diagnose issues.

How do you manage logging in Python?

The `logging` module manages logging in Python. It allows you to configure loggers and handlers and control the logging behavior. You can set log levels to determine the severity of messages to be logged and specify output destinations, such as the console or log files.

Why use Python logging instead of print?

The logging module offers flexible message logging to multiple outputs, such as consoles, files, and networks. Unlike print, which simply outputs to the terminal and clutters the output, logging is a better practice, providing more control and not slowing down execution.

What are the logging standards in Python?

Python’s logging standards involve using the `logging` module with levels like `DEBUG,` `INFO,` `WARNING,` `ERROR,` and `CRITICAL.` Key components include loggers (`logging.getLogger(name)`), handlers (e.g., `FileHandler,` `StreamHandler`), and matters to customize log message formats. Configuration can be done through basic setups, configuration dictionaries, or files.

List of Sources: