CI/CD automation testing is the practice of embedding automated validation directly into continuous integration and continuous delivery pipelines. These tests are not run in isolation or on the side. They execute within the workflow that determines whether code progresses or is blocked.

Most modern teams already run automated tests, but that alone does not mean they are practicing CI/CD automation testing effectively. Pipelines can contain numerous checks and still feel slow, noisy, or unreliable. Failures may halt releases without clearly signaling risk. Engineers rerun jobs, ignore results, or bypass automation altogether.

Want Faster, Safer, More Actionable Root Cause Analysis?

With Edge Delta’s out-of-the-box AI agent for SRE, teams get clearer context on the alerts that matter and more confidence in the overall health and performance of their apps.

Learn MoreThis article explains CI/CD automation testing from a systems perspective. It defines the concept, examines how it behaves in real pipelines, and explores how teams design automation that supports delivery rather than obstructing it. The focus is not on tools or frameworks, but on architecture, intent, and operational behavior.

| Key Takeaways • CI/CD automation testing is about controlling flow. Tests matter because they decide whether a change moves forward, pauses, or stops. • Signal quality beats quantity. A few fast, deterministic tests are more effective than many unreliable ones. • CI and CD serve different goals. CI focuses on protecting integration with quick feedback, while CD focuses on release confidence. • Test results are signals, not the absolute truth. Pipelines are binary, but the risks they represent are not. • Test placement shapes behavior. The same test can be helpful early and harmful late in the pipeline. • Flaky and ambiguous failures erode trust. When signals are unclear, engineers rerun or bypass gates. • Effective CI/CD automation testing is architectural. It depends on intent, ownership, and observability, not just tools. |

What Is CI/CD Automation Testing?

CI/CD automation testing refers to automated checks that run as an integral part of the delivery pipeline. These checks are not advisory. Their outcomes directly determine whether a change progresses, pauses, or is stopped.

This is what distinguishes CI/CD automation testing from traditional test automation. Traditional tests can exist without affecting delivery. CI/CD automation tests operate on the critical path, where their results shape flow, timing, and risk.

Because of this placement, even simple tests can have an outsized impact. Test sophistication matters less than where and how a test influences delivery decisions.

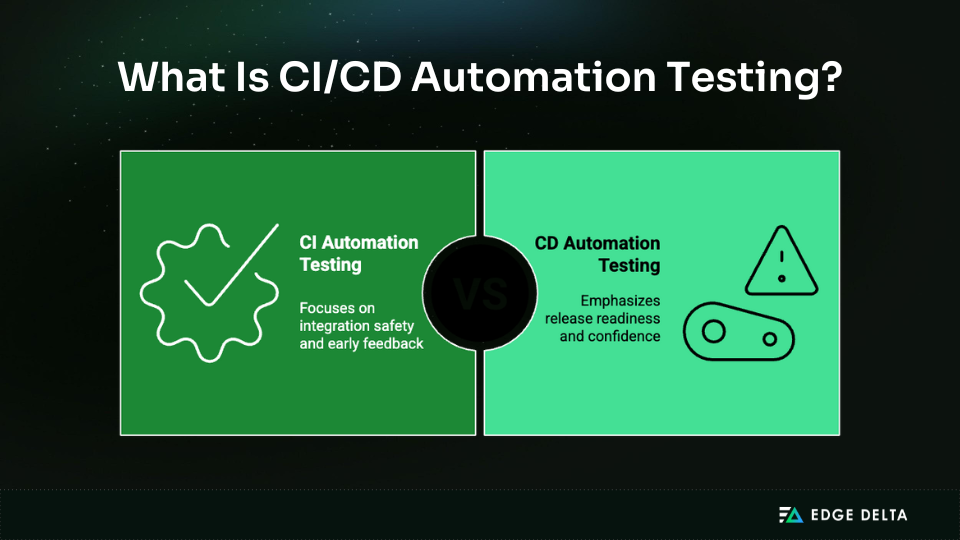

CI Automation Testing

In continuous integration, automation testing exists to protect integration boundaries. Tests run immediately after code is merged or proposed for merge, when changes are small, and the context is still fresh.

CI tests are intentionally constrained. They optimize for speed and determinism. Their purpose is not to prove correctness, but to detect when a change violates assumptions that other parts of the system depend on.

Failures at this stage are expected. A failing CI test is not an incident. Early feedback prevents more expensive failures later.

CD Automation Testing

Continuous delivery introduces different concerns. Here, automation testing shifts from integration safety to release readiness.

Tests that run closer to production carry more authority. A failure may block promotion entirely, not because the system is definitely broken, but because confidence is insufficient to proceed safely.

This distinction explains why the same test behaves differently depending on where it runs. Noise tolerated in CI becomes an unacceptable risk in CD. Pipelines that ignore this difference tend to become brittle and slow.

CI/CD Automation Testing and Continuous Testing

CI/CD automation testing is often discussed alongside continuous testing, but the two are not interchangeable.

Continuous testing refers to the practice of validating software continuously throughout the development lifecycle. CI/CD automation testing is a subset of that practice. It focuses specifically on tests that influence delivery decisions inside pipelines.

Not every automated test is a CI/CD automation test. A test becomes part of CI/CD automation testing only when its result governs whether the change can move forward. This distinction matters because it determines how much authority a test carries and how carefully it must be designed.

Why CI/CD Automation Testing Matters

CI/CD automation testing matters because it is one of the few scalable ways to balance delivery speed with operational safety. As deployment frequency increases, manual review becomes slower and less reliable. The system needs a way to enforce expectations consistently, even under pressure.

Automation embedded in pipelines allows teams to move faster without increasing cognitive load. Decisions happen continuously rather than being deferred to high-stress release moments. When designed well, this reduces risk rather than concentrating it.

Adoption alone, however, is not enough. Many organizations technically practice CI/CD while still struggling with delivery performance. The gap is rarely tooling. It’s the signal quality.

CI/CD Automation Testing as Decision Infrastructure

At scale, CI/CD automation testing functions as a decision infrastructure. Pipelines continuously answer questions about whether a change can be merged, whether it can be promoted, or whether it should be held back.

Automated tests supply the evidence that those decisions rely on. When the evidence is consistent and meaningful, delivery flows smoothly.

When it is noisy or ambiguous, teams compensate by rerunning jobs, adding retries, or bypassing checks altogether. This behavior is not a discipline problem, but a predictable response to low-quality signals.

How CI/CD Automation Testing Works in Practice

In practice, CI/CD automation testing works by producing signals that control pipeline flow. A passing result allows progression. A failing result stops movement until uncertainty is resolved.

The critical nuance is that test outcomes are signals, not proof. A pass does not guarantee correctness, and a failure does not always indicate a defect. The value of automation lies in how strongly failures correlate with real risk.

How Pipelines Interpret Test Outcomes

Pipeline logic is binary, but the reality it represents is probabilistic. A failure may reflect a regression, a test defect, or an environmental issue.

High-performing pipelines are designed so failures are interpretable. When a gate fails, engineers should be able to quickly understand what kind of risk is being signaled and what response is appropriate.

Where Tests Typically Run in CI/CD Pipelines

Most pipelines concentrate automated testing in two zones:

- Early integration stages, where fast feedback prevents broken changes from spreading

- Pre-release stages, where higher-confidence validation supports promotion decisions

The same test can be appropriate in one zone and harmful in the other. Placement determines cost, urgency, and interpretation.

A useful way to think about this is from an economic perspective. Pipelines consume time, compute, and attention. The most constrained resource is usually the engineer’s trust. Once that is exhausted, automation loses authority.

Test Categories as Pipeline Signals (Conceptual)

Many teams talk about test categories such as unit, integration, regression, performance, or security testing. In CI/CD automation testing, these categories matter less as labels and more as signal types.

Each category produces a different kind of signal:

- Some detect local correctness issues quickly

- Others surface cross-system assumptions

- Some indicate long-term operational or security risk

The mistake is treating all categories as equally authoritative at all pipeline stages. Effective pipelines use different signal types at different points, based on the decision being made. This framing keeps the system focused on risk rather than taxonomy.

How to Do CI/CD Automation Testing Effectively

“How to do it” does not mean adding more tests. It means designing a system that produces reliable signals at the right points in the delivery flow.

Effective CI/CD automation testing depends on clear intent, deterministic behavior, and intentional distribution of validation cost.

1. Start With Pipeline Intent

Each pipeline stage exists to answer a specific question. Continuous integration protects shared history. Continuous delivery protects users and production systems.

Automation should be selected based on the decision each stage is responsible for making. When intent is unclear, pipelines accumulate checks without purpose. Failures become confusing rather than informative.

2. Optimize for Determinism Before Coverage

In CI/CD automation testing, reliability matters more than breadth. A small, deterministic test suite provides more value than a large, flaky one.

Flaky tests break the link between failure and risk. Once engineers stop trusting failures, reruns become routine, and gates lose authority. Determinism, in this sense, is not a quality concern. It is a governance requirement.

3. Distribute Test Cost Across the Pipeline

Not all validation belongs on the critical path. Some checks are fast and cheap. Others are slow, brittle, or resource-intensive.

Effective pipelines distribute validation based on decision value. Cheap signals run early to catch obvious issues. Expensive validation runs later, where the cost of delay is justified by reduced risk.

CI/CD Automation Testing and Security Signals

Security testing increasingly participates in CI/CD automation testing, not as a separate discipline but as another form of risk signal.

Vulnerability scanning, dependency checks, and policy enforcement become CI/CD automation tests when their outcomes gate delivery. Like any other signal, their value depends on determinism, clarity of remediation, and placement in the pipeline.

Security gates that produce ambiguous failures or unclear action paths behave like flaky tests. They slow delivery without improving safety. Well-designed security signals, by contrast, provide clear decisions and predictable impact.

Common Failure Modes in CI/CD Automation Testing

Most CI/CD automation problems are systemic rather than accidental. They emerge gradually as systems scale and delivery pressure increases.

The most common failure patterns include:

- Flaky tests that fail without code changes

- Pipelines overloaded with slow validation on every change

- Tests that lack ownership and drift over time

Each pattern reduces signal quality. Over time, pipelines stop guiding behavior and start obstructing it.

CI/CD Automation Testing and Feedback Latency

Automation always adds time. Every gate introduces waiting, even when it passes.

The goal is not to eliminate latency, but to ensure that latency buys meaningful risk reduction rather than consuming time and compute without improving decision confidence. When a delay does not increase confidence, it becomes friction.

As pipelines scale, feedback latency becomes visible even when failure rates drop. This is a structural property of gated systems, not a sign of malfunction. Optimizing for early, reliable signals often matters more than minimizing total pipeline duration.

CI/CD Automation Testing and Observability

As pipelines become more complex, failures become harder to interpret. A failing check may reflect a logic error, an infrastructure issue, or an environmental dependency.

Observability provides the context needed to distinguish between these causes, separating data quality problems, system behavior, and genuine defects. Logs, metrics, and execution context allow teams to classify failures instead of guessing.

Without observability, CI/CD automation testing degrades into trial and error. Engineers debug the wrong layer, add retries, and further reduce signal quality.

Trade-Offs Teams Cannot Avoid

CI/CD automation testing involves unavoidable trade-offs because speed, assurance, and maintainability pull in different directions.

| Trade-off | What you gain | What you risk |

| Faster feedback | Higher developer flow | Less validation depth |

| Stronger gates | Higher release confidence | More blocking and triage |

| Broader coverage | Fewer blind spots | Higher maintenance cost |

| More environments | Greater realism | More ambiguous failures |

The goal is not to eliminate these trade-offs, but to make them explicit so pipelines are designed intentionally rather than by accident.

Conclusion

CI/CD automation testing is not just about running tests automatically. It is about designing a delivery system that governs change through reliable signals.

When automation is aligned with intent, deterministic enough to trust, and observable enough to interpret, pipelines accelerate delivery while managing risk by turning raw execution data into decision-ready signals. When those conditions are missing, teams compensate in ways that slow progress and increase exposure.

Understanding what CI/CD automation testing is and how to do it means treating it as architecture, not mechanics.

Frequently Asked Questions

What is CI/CD automation testing in simple terms?

It is an automated validation that runs inside the CI/CD pipeline and determines whether a change can move forward, pause, or stop.

How is CI testing different from CD testing?

CI testing protects integration and shared history with fast feedback. CD testing protects release readiness and carries higher authority closer to production.

Why do flaky tests cause so many delivery problems?

They break the link between failure and risk. When engineers cannot trust a gate, they rerun or bypass it, which slows delivery and weakens governance.

What does “how to do it” mean if this is not a tutorial?

It means designing the pipeline as a decision system: aligning tests to stage intent, prioritizing deterministic signals, distributing validation cost, assigning ownership, and instrumenting failures.

How do teams keep pipelines fast without losing confidence?

By optimizing for early, reliable signals and reserving slower validation for later decisions where the cost is justified.

Sources