Businesses rely heavily on real-time monitoring across a multitude of use cases, as seen by 80% of companies that enjoyed revenue increases with real-time analytics. Moreover, real-time monitoring is a crucial part of observability, given that an hour of downtime can result in losses of six figures or more.

Effective real-time monitoring requires engineering teams to constantly analyze logs and metrics to understand the health and performance of their systems. It’s essential in optimizing resources, preventing system failures, and more.

Want Faster, Safer, More Actionable Root Cause Analysis?

With Edge Delta’s out-of-the-box AI agent for SRE, teams get clearer context on the alerts that matter and more confidence in the overall health and performance of their apps.

Learn MoreWhether you’re into observability or simply eager to learn more about this process, this article will explain real-time monitoring and its benefits.

Key Takeaways

- Real-time monitoring is the continuous analysis of telemetry data, delivered with low latency.

- Monitoring real-time data quickly detects anomalies, sends alerts, discovers optimization opportunities, and enables teams to solve issues.

- Real-time monitoring involves collecting, transmitting, processing, analyzing, alerting, and visualizing data. With the best practices, it can ensure data-process efficiency and avoid wasting resources.

- Edge Delta’s real-time monitoring works out of the box using AI-enabled anomaly detection and eBPF metrics.

Understanding Real-Time Monitoring: Its Importance, Process, and Use Cases

Real-time monitoring is continuous and instantaneous analysis and reporting of data or events as they occur. With zero to low latency, this process offers only a tiny delay from data collection to analysis. It allows for the immediate detection of negative behaviors or other changes that may indicate a production issue.

With real-time monitoring, organizations can enjoy:

- Stable and well-maintained operations

- Enhanced and up-to-date security

- Efficient delivery of data-driven services

Most industries do real-time monitoring to analyze events and execute quick responses immediately. In observability, you need to understand the current state of your production systems to deliver the best customer experience. As a result, real-time monitoring is a crucial part of a successful observability practice. It also provides the basis for alerts or signals in case of system-related issues or errors.

Real-time monitoring significantly improves data collection and decision-making with its low-latency data stream. Thanks to this process, engineers can quickly detect and resolve serious issues.

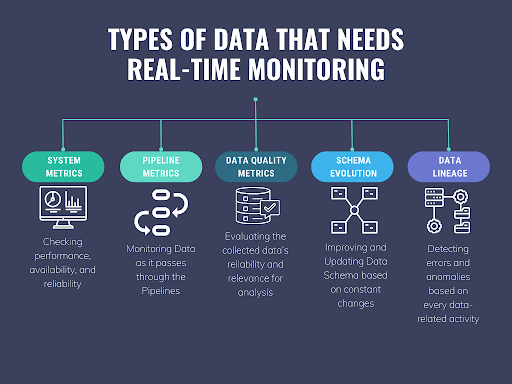

Types of Data That Work Well with Real-Time Monitoring

Implementing real-time monitoring with historical or archived data doesn’t make sense because they don’t require immediate analysis. It’s only applicable to data streams where immediate action is necessary.

Here are some system-related data types that require real-time monitoring:

System Metrics – For Performance, Availability, and Reliability Monitoring

System metrics are data related to performance, availability, and reliability. These metrics are analyzed in real time to reflect a system’s overall performance and health. Some system metrics include:

- CPU Usage

- Memory Utilization

- Disk I/O

- Network Traffic

System metrics serve as a basis for determining whether a system performs well. With real-time monitoring of this data, you can detect any system issues or errors and provide immediate solutions to them.

Pipeline Metrics – For Monitoring Data on Pipelines

You can also collect metrics to measure the status and health of your data throughout pipeline stages. Since data pipelines continuously stream data, they also need real-time monitoring. Some crucial metrics are:

- Data Volume

- Streaming Latency

- Error Rate

With these metrics, you can check if your data remains efficient and reliable. It’s also crucial in detecting any issues or errors happening in the pipelines.

Data Quality Metrics – For Evaluating Collected Data

Data quality refers to the current state of data used in operations. These metrics determine whether the data is reliable and valuable for any analysis. Some of the data quality metrics are:

- Accuracy

- Completeness

- Timeliness

- Validity

- Consistency

- Uniqueness

Real-time data quality monitoring ensures reliable and efficient processing and analysis. Otherwise, it can lead to bad data, wasted resources, and poor business decisions.

Schema Evolution – For Improving and Updating Data Schema

Schema Evolution is live data that needs constant checking for maintenance. Analyzing these changes keeps everything compatible and consistent throughout the different processing stages. Some examples included in data schema that need real-time monitoring are:

- Table Names

- Data Fields

- Data Types

- Relationship of Each Entity in the Schema

Real-time monitoring of schema evolution promotes immediate analysis and creates recommended updates. Without continuous monitoring, a system can encounter data loss, incompatibility, errors, and inconsistencies.

Data Lineage – For Error and Anomaly Detection

Data Lineage refers to the data flow record from beginning to end. This data allows businesses to see what happened if there’s an error or anomaly in the system. Some of the most common entities in data lineage are:

- Source and collection process

- Data transformations and actions such as formatting, deleting, or editing

- Destinations such as storage

Real-time monitoring of data lineage lets businesses see the entire lifecycle of every data. With an instant collection of this data, it becomes easier to pinpoint where an error occurred and find the best solution.

This real-time data helps improve the observability of data systems. With real-time monitoring, businesses can immediately detect issues and maintain data processing pipelines.

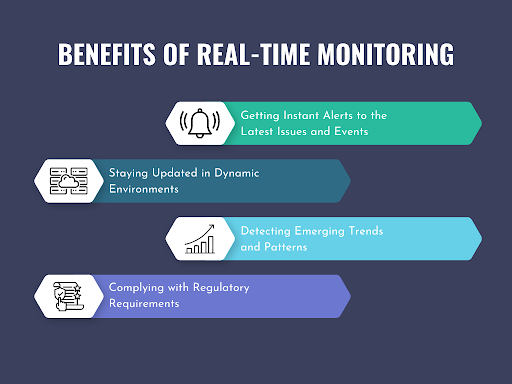

The Importance of Real-Time Monitoring for Observability

Real-time monitoring is crucial to observability since it offers instant insights into a system’s health and performance. With quick issue detection and response, organizations can proactively maintain system health to avoid downtime and optimize the user experience.

Here are some of the main benefits of real-time monitoring:

Getting Instant Alerts to the Latest Issues and Events

Real-time monitoring processes rely on analyzing logs and metrics as they are generated. Typically, there would be a delta between the time your data is created and when it is processed. However, when you begin processing data upstream, you can minimize this delta. Once data is analyzed, monitoring tools can send alerts for anomalies, negative behaviors, and other events that indicate there’s an issue.

Effective real-time monitoring can help you reduce critical stats such as mean time to detect (MTTD) and respond (MTTR). As a result, it helps maintain high service availability and reliability.

Staying Informed with Updates and Deployments in Dynamic Environments

Modern, fast-paced environments receive frequent changes and continuous system updates. With new data being created all the time, it’s crucial to do real-time monitoring as these changes occur. This way, all your engineers can stay informed of the health of their mission-critical systems as they make constant updates and deployments.

Besides the constant updates, real-time monitoring in dynamic environments also allows:

- Optimization of computing resources

- Focus only on active systems

- Reduce overload in processing tasks

- Avoiding unnecessary data and backlogs

Real-time monitoring works well with systems dealing with new data all the time. It focuses on relevant and new data, allowing efficient processing workflow.

Detecting Emerging Trends and Patterns

The continuous nature of real-time monitoring allows for quick detection of emerging patterns and trends. Such an advantage offers benefits to organizations like:

- Detecting known and unknown production issues

- Adapting to constant deployments and changes

- Making informed decisions based on real-time data

With advanced algorithms and predictive analytics, this process can help you spot issues you may have not seen before or identify opportunities for optimization.

Complying with Regulatory Requirements

Dealing with data involves complying with regulatory requirements. With real-time monitoring, organizations can use tools to detect any non-compliance issues, such as data that includes PII. This instant detection promotes immediate corrective actions and prevents further issues from happening.

With instant data collection and analysis, real-time monitoring improves a business’ accountability and transparency. It helps organizations ensure that every process adheres to regulatory requirements.

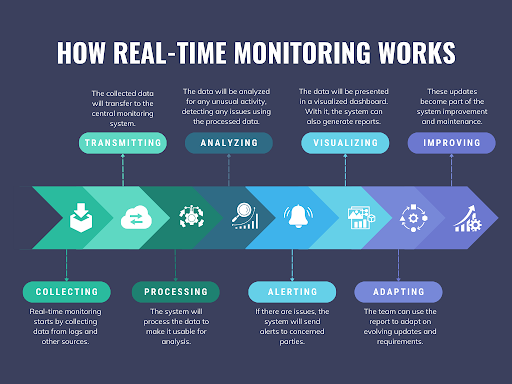

Seeing Real-Time Monitoring in Action

Real-time monitoring comes in different approaches depending on the industry. However, every method follows similar principles and steps. Here’s a general view of how real-time monitoring works:

Step 1: Collecting data from logs, metrics, and other data types

Every real-time monitoring process begins with collecting new data. Typically, machine data comes in the form of logs, metrics and traces, but it can also come from other stacks. This step involves automatic data collection of newly-created machine data, often using a software agent.

Unlike other providers in the market, Edge Delta also begins processing your data at the agent level, enabling faster and more proactive insights.

Step 2: Transmitting data into the central monitoring system

After collecting data, your agent will transmit it to your monitoring system. This step can happen through wireless networks, depending on the setup used.

Sometimes, you may ship data to intermediary tools, like a data processing pipeline, before transferring it to your observability platform. The most common process would be sending the data immediately to the system for instant analysis.

Step 3: Processing collected data for analysis

Using traditional observability tools, processing begins once the data reaches the central monitoring system. In this step, you’ll have to use filtering, parsing, combining, and even wrangling tools. Such tools automate data transformation, making all the raw data suitable for analysis.

Edge Delta takes a more modern approach and pushes many of these steps upstream.

Step 4: Analyzing processed data to detect issues

Once the data becomes consistent, uniform, and clean, it’s subject to analysis. This process detects any trends or patterns that need immediate attention.

Note:

While analyzing can come in many approaches, the most effective would be to automate it using artificial intelligence and machine-learning algorithms. The analysis aims to get optimization insights or rule out errors that need immediate repairs.

Step 5: Alerting based on analyzed data

Alerting happens when the results from the analysis detect any critical events. This step uses triggers to notify concerned parties via Slack, Teams, email, or other communication channels.

With this step, all concerned team members will know if they must attend to anything. This practice helps teams address issues as they occur – potentially before they lead to an incident.

Step 6: Visualizing and reporting based on analyzed data

Besides alerts, the analyzed data also undergoes visualization, presenting it in a digestible form via dashboarding. Dashboards can include charts, graphs, maps, and more.

With this step, every team member gets an overview of the data and understands everything at a glance. This process may also automatically create detailed insights or reports based on the analyzed data.

Step 7: Adapting the system based on evolving updates and requirements

Traditional real-time monitoring system requires adaptation since it evolves. With feedback and insights, the system and the process receive manual updates to ensure everything works constantly. This step can involve manual alerting changes, software updates, and introducing new data sources.

Edge Delta helps automate some of these steps. For example, it will automatically refine your alerts for you.

Step 8: Improving the system based on metrics and data requirements

Real-time monitoring requires continuous improvements due to its constantly evolving nature. With historical data and the system’s metrics, concerned teams can optimize the process by updating specific approaches. This manual process keeps the monitoring system functional, allowing it to work with new updates and requirements continuously.

⏳ In a Nutshell

Real-time monitoring provides a proactive approach to operations. It also improves decision-making and offers immediate insights into critical events. With these steps, real-time monitoring becomes effective in detecting and fixing errors and finding ways to optimize every operation.

Best Practices for a More Efficient Real-Time Monitoring

Real-time monitoring involves many automated steps, so getting everything right is crucial. Before starting, you must have a plan to ensure effectiveness and avoid wasting resources.

Here are some of the best practices you need to consider when doing real-time monitoring:

Defining specific goals

Real-time monitoring uses many resources, so you ensure that all processes are relevant. Thus, defining the goal is the first best practice you must do.

While it’s easy to gather every possible data, it can be a burden to your system and tools, and create noise for your team. Moreover, you’ll waste time and resources gathering data you don’t need or track metrics that don’t matter towards your uptime and performance goals. For this reason, you should determine your goal in doing real-time monitoring. In observability, this is usually your service level objectives (SLOs).

Once you have a goal, selecting key metrics and performance indicators will be easier. You’ll also have a guide in using filtering algorithms to remove unnecessary data.

With your goals set, you can optimize your system to identify the components you need to check. Doing so lets you determine the approach you need for your monitoring tasks.

Choosing the suitable tools

Using the right tools is key to the success of any real-time monitoring process. Thus, it’s crucial to ensure that the tool you’ll use for this task meets the criteria you need.

Some of the factors to consider when choosing a real-time monitoring tool are:

- Flexibility – to work with other related and necessary tools

- Ease of Use – to ease managing real-time data

- Compatibility – to support many data formats

- Scalability – to remain consistent for upgrades and developments

Only pick the one that checks all these things for your goals. This way, you can avoid spending resources on unnecessary tools.

✅ Pro Tip:

When choosing a real-time monitoring tool, always ensure it offers the following features and functions:

- Real-time data collection

- Automated alerting

- Out-of-the-box dashboards

- Intelligent analyzing capacity

Setting up the monitoring system

Proper system setup is also crucial as it’s the key to efficient real-time monitoring. In this practice, you must carefully deploy your monitoring agents and sensors. It also involves establishing secure connections to whatever network you’re using.

Configuring the key metrics

Having the right metrics makes the most out of all the steps in your real-time monitoring task. When configuring these things, focus on performance, health, and security. Some of the metrics can be:

- Bandwidth usage

- Latency

- Error states

- Response times

- CPU usage

- Network traffic volume

With these metrics, you can create triggers that send alerts when the system encounters unusual conditions.

Setting up system notifications and alerts

Another ideal real-time monitoring practice lies in setting up notifications or alert systems. In this task, you’ll determine who receives alerts for specific events or conditions.

✅ Pro Tip:

Make every alert concise and prompt, with a basis and call to action. This way, your automated notification triggers allow on-time responses and repairs.

Integrating monitoring tools with other systems

Proper integration with other systems optimizes a real-time monitoring system’s effectiveness. In this practice, you’ll allow your system to be compatible with other relevant tools such as:

- Incident Management Systems

- Ticketing Systems

- Diagnostic Systems

- Service Management Platform

- Application Programming Interface

This practice allows you to enjoy better incident responses and seamless data sharing. When done correctly, you can get the best real-time insights with the help of other systems.

Performing data visualization

Visuals are crucial in real-time monitoring because they make every data piece easier to understand.

✅ Pro Tip:

Leverage pre-built dashboards to get up and running more quickly. This step lets you quickly detect patterns, trends, anomalies, and opportunities – without investing in a bunch of undifferentiated work.

Defining response procedures

With well-defined response procedures, you can address issues detected with real-time monitoring. This practice also involves careful assigning of responsibilities and proper protocol establishment.

When done correctly, real-time monitoring can improve its recommended repairs and call to action during crucial events.

Reviewing and optimizing the monitoring system

Real-time data evolves, so constant reviews and optimization will benefit your monitoring system. Without regular updates, a monitoring system can lose its efficiency, so it’s crucial to constantly check on the following:

- Monitoring Parameters

- Alerting Thresholds

- Response Procedures

By doing these reviews, you can always ensure that your real-time monitoring system remains compatible with the constant evolution of data and requirements.

Note:

This practice is best done manually by training members to interpret data and adjust components based on feedback.

Best Real-Time Monitoring Tools and Their Specialties

Tools are crucial for real-time monitoring since they automate data collection, analysis, and visualization. However, every tool has a specific feature that does not fit all monitoring tasks. Thus, it’s crucial to carefully pick the right tool based on your needs and objectives.

Here’s a list of the best real-time monitoring tools available in the market:

| Tool | Special Feature/Function |

|---|---|

| Edge Delta | Processing of data immediately – upstream, as it’s created – for real-time insights; AI to detect anomalies and guide root-cause analysis |

| TrueSight Capacity Optimization | Using investigative studies to visualize system capacity and performance in near real-time |

| Cisco AppDynamics | Using real-time performance monitoring in public, private, or multi-cloud environments |

| Datadog Real User Monitoring | Offering end-to-end visibility of real-time activity and individual user experience |

| New Relic APM 360 | Providing a unified full-stack view for real-time correlation of app performance with trends |

| Splunk App for Infrastructure | Unifying and correlating logs and metrics for a unified approach to infrastructure monitoring |

How Edge Delta Can Help in Your Real-Time Monitoring Needs

Real-time monitoring identifies and addresses issues, optimizes performance, and ensures operational efficiency. However, it’s only effective with the right tool for your monitoring needs.

With Edge Delta, real-time monitoring is easier as it processes data within the agent. As an observability platform, it displays data, like logs and metrics, and collects them in one place. This way, you can see every data in a digestible way for easy troubleshooting. Moreover, it uses a machine-learning pattern on the agent, making the data usable for analysis.

Real-Time Monitoring FAQs

How important is real-time data?

Real-time data is crucial for engineering teams to understand the health and performance of their systems. By analyzing this data, organizations can detect and even prevent downtime, ultimately protecting revenue.

How do I monitor real-time data?

To monitor data in real-time, you would typically define critical metrics, instrument applications to omit these metrics, and choose an analytics tool to derive insight from your data. From there, you can create real-time dashboards and set up actionable alerts. Additionally, you can regularly update the monitoring setup based on incident analysis and performance trends to ensure continuous improvement.

Edge Delta can help you automate many of these steps, streamlining your observability experience. Specifically, Edge Delta…

- Collects metrics without any code changes using eBPF

- Provides powerful, pre-built dashboards

- Detects and alerts anomalies automatically

- Eliminates ongoing monitoring toil, including refining alerts

What’s an example of real-time observability?

One example of real-time monitoring is system management. In this industry, businesses use real-time monitoring tools to track metrics like CPU performance, network traffic, and memory usage. This process lets concerned staff detect and fix anomalies, maintaining the system’s performance.